AI-Native Architectures: Building Smarter Systems

AI-Native Architectures: Building Smarter Systems in 2025

Key Takeaways

AI-native architectures are transforming systems by making intelligence the core—not an add-on—powering every interaction, decision, and process. Embracing this shift enables startups and SMBs to build smarter, faster, and more adaptive products ready for 2025’s demands.

- Put AI at your system’s core by designing with real-time, unified data pipelines and hybrid edge-cloud compute for optimal speed and scalability.

- Automate continuous learning with feedback loops that keep AI models fresh, reducing drift and manual upkeep for sustained accuracy.

- Leverage elastic scaling to dynamically align resources with demand, cutting costs by up to 30% without sacrificing performance.

- Prioritize security and ethical governance from day one using privacy-preserving techniques, role-based access, and ongoing model audits to build trust and compliance.

- Embed explainability and transparency to enable real-time AI decision tracing and empower stakeholders with clear, actionable insights.

- Combat AI fatigue by adopting minimal viable toolkits, focusing on quality updates, and using iterative development cycles for sustainable innovation.

- Adopt AI-as-a-service models to lower upfront costs, simplify integration, and accelerate time-to-value with flexible pay-as-you-go intelligence.

- Foster a culture of curiosity and ownership that embraces rapid iteration, accountability, and cross-functional teamwork aligned with AI’s fast evolution.

Ready to evolve beyond AI add-ons? These principles help you build living, learning systems that outpace change and fuel breakthrough growth. Dive into the full article to dive deep into the implementation and architectural decisions needed to make your architecture truly AI-native.

Introduction

What if your software didn’t just respond but truly understood and adapted to your users, your business, and the world around it?

In 2025, AI-native architectures are making that a reality—shifting artificial intelligence from a helpful add-on to the very foundation of smarter systems. Unlike traditional approaches, which are limited in adaptability and lack deep integration, AI-native designs embed intelligence throughout every layer, enabling continuous learning, real-time decision-making, and effortless scalability.

For startups, SMBs, and enterprises facing rapid change, this means unlocking:

- Real-time, data-driven responsiveness that keeps pace with demand

- Automated, scalable workflows that cut costs and boost efficiency

- AI-powered experiences tailored to every individual user without manual tweaks

If you’ve felt the limits of patchwork AI solutions or worried about staying competitive in an ever-faster market, embracing AI-native architecture is your next step. This move represents a paradigm shift in system design, turning your technology from reactive to proactive, and transforming static codebases into living systems that evolve as you grow.

In the sections ahead, you’ll discover how these architectures reshape:

- Core design principles that embed AI at the heart of your stack

- Data and compute strategies powering seamless intelligence

- Real-world benefits and use cases showing tangible business impact

- Common hurdles and practical ways to overcome them

- Future trends pointing toward autonomous, self-optimizing platforms

By the end, you’ll see why building your systems AI-native isn’t just smart—it’s essential for keeping pace with 2025’s digital demands.

Let’s explore what it really means to design smarter systems from the ground up.

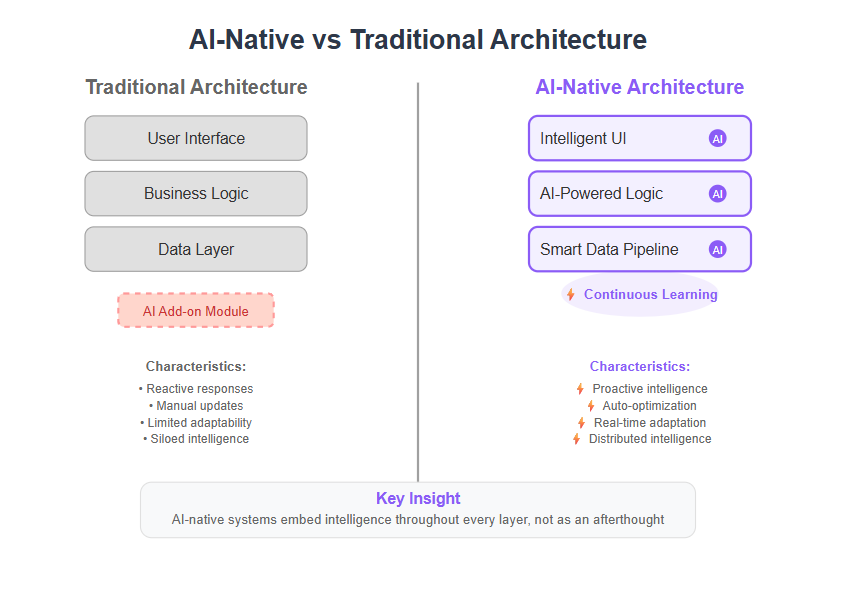

Understanding AI-Native Architectures: Core Principles and Design Paradigms

AI-native architecture means building systems where AI isn’t just a sidekick but the central nervous system powering every layer. The core components of AI-native architectures include distributed intelligence, autonomous Business Logic Agents, and dynamic discovery mechanisms using semantic metadata such as Agent Cards. Unlike AI-enhanced or AI-embedded setups, where intelligence is an add-on or isolated module, AI-native design saturates infrastructure, workflows, and UX with continuous, adaptive intelligence. This deep integration of AI throughout the entire application lifecycle—spanning design, deployment, and operations—enables automation, continuous learning, and autonomous functionality, setting AI-native architectures apart from traditional systems.

From Add-On to Core: A Fundamental Shift

Think of AI-enhanced systems like a smartphone with a smart assistant app—helpful, but separated. AI-native architectures make AI the operating system itself. In contrast, simply adding AI to existing systems or traditional systems—where AI is bolted on as an enhancement—often leads to limited capabilities and fails to unlock the full potential of artificial intelligence. This means:

- Data-driven foundations that feed real-time, unified data pipelines

- Distributed intelligence splitting work between edge devices and cloud for speed and scale

- Continuous learning loops that auto-upgrade models based on live feedback

- Built-in scalability, security, and governance from day one

This shift changes systems from reactive tools into proactive, evolving partners.

Pillars of AI-Native Design in 2025

Key architectural pillars empower smarter, adaptive systems that truly learn and respond:

- Robust, real-time data pipelines for continuous ingestion and integrity

- Hybrid compute distribution ensuring low latency (edge) and heavy AI workloads (cloud) work in harmony

- Automated model retraining to reduce drift and maintain accuracy

- Elastic scaling adjusting resources dynamically as demand fluctuates

- Security and ethical governance baked into every component to foster trust and compliance

AI-native architectures build upon many core cloud native principles—such as microservices, containerization, automation, scalability, and resilience—to enable efficient, scalable, and fault-tolerant systems. However, while cloud native systems focus on optimizing application deployment and operations in the cloud, AI-native designs emphasize autonomous learning, real-time data handling, and adaptive intelligence, setting them apart in both purpose and capability.

Imagine AI as the brain’s nervous system, constantly sensing, learning, and reacting—far beyond a mere tool plugged in after the fact.

Real-World Picture

Picture a startup using AI-native architecture: their app learns each user’s habits, predicts needs before they ask, and scales smoothly during viral growth—all without manual tweaks. The system isn’t just smart; it’s smart by design.

By continuously analyzing user behavior and user interactions, the app personalizes experiences for each individual and improves its features over time.

Takeaways You Can Use Today

- Design your systems with AI at the core—not added on later.

- Prioritize real-time data flow and hybrid compute for optimal responsiveness.

- Automate continuous learning to keep models fresh without constant manual updates.

To fully leverage AI-native systems, rethink your existing workflows and adopt AI workflows that place AI agents at the center of process design. This shift enables fundamental process redesign and unlocks the full potential of AI in your organization.

“AI-native isn’t AI slapped on—it’s intelligence woven into the very fabric of tech.”

“Think of your system as a living organism—AI is its nervous system, sensing and adapting in real time.”

By embracing these core principles, you’re setting up smarter, more agile systems ready for 2025’s fast-changing demands.

Data Infrastructure and Processing in AI-Native Systems

Real-Time, Event-Driven Data Pipelines

At the heart of AI-native systems lie unified, real-time data lakes that continuously ingest and process raw data from diverse sources, supporting immediate analytics and trustworthy, structured pipelines. Imagine a stream where every drop flows seamlessly into your system without lag.

Designing these data pipelines means prioritizing:

- Data security and compliance to protect sensitive information

- Integrity checks ensuring data accuracy and consistency

- Event-driven architecture that triggers instant analytics and automated feedback loops, enabling systems to react immediately to new data

These architectures enable real time inference, allowing for immediate, context-aware decision-making as data is generated. As a result, organizations gain real time insights that drive dynamic business actions and operational automation.

Think of this like a smart thermostat sensing temperature changes in real time and adjusting settings instantly — except at massive scale across industries.

Distributed Compute: Edge and Cloud Synergy

AI workloads juggle between lightning-fast responses and heavy computations, making hybrid compute distribution essential. This strategy splits processing between:

- Edge computing for latency-sensitive tasks that need immediate answers, such as camera-based security alerts or voice assistants

- Cloud computing for large-scale model training and deep analytics that require powerful resources

This synergy reduces delays and cuts operational costs. By automating the distribution of tasks between edge and cloud, hybrid compute distribution minimizes the need for manual intervention in operational tasks. Picture smart buildings where edge sensors detect anomalies instantly while cloud systems analyze patterns over weeks to optimize energy use.

Continuous Model Training and Adaptation

Static AI models are so 2020. Today’s AI-native systems embrace continuous learning cycles that keep models fresh and accurate by incorporating:

- Automated user feedback loops

- Operational data streams for ongoing refinement

Modern systems leverage robust training pipelines to automate model retraining, ensuring that new data and features are seamlessly integrated. Real time feedback from users and systems is fed directly into these pipelines, enabling instant content discovery and dynamic optimization. Throughout this process, performance metrics are continuously monitored to evaluate and optimize model accuracy and reliability.

This process drastically reduces model drift — the slow degradation of accuracy over time — and keeps systems agile and relevant. Unlike periodic manual updates, continuous adaptation feels like a living brain tuning itself constantly to new experiences.

Quick Takeaways You Can Use Now:

- Build event-driven data pipelines to unlock real-time responsiveness in your applications

- Leverage a hybrid edge-cloud compute model to balance speed and scale, cutting costs without sacrificing performance

- Adopt continuous training workflows to keep AI models sharp and aligned with evolving user needs

By embedding AI deeply into data and compute layers, you create smarter systems that react and learn as fast as your business moves.

"Real-time data is the nervous system of AI-native systems—without it, your brain can’t react."

"Think edge and cloud as tag-team partners: one quick on the reaction, the other strong on the heavy lifting."

"Continuous adaptation is how AI stays smart — not just today, but tomorrow and beyond."

Strategic Benefits of AI-Native Architectures for Businesses

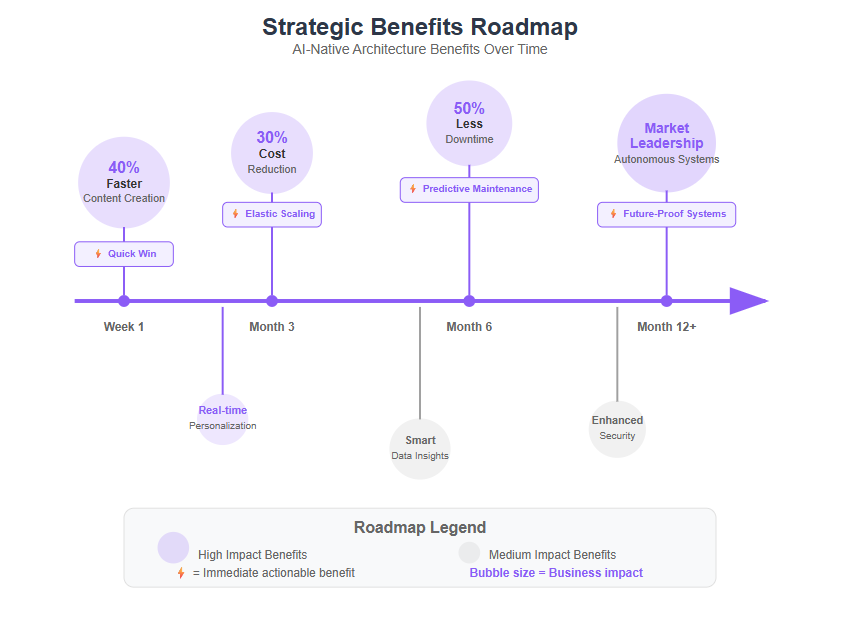

AI-native architectures reshape businesses by putting intelligence at the core of systems, not as just an add-on. By seamlessly integrating with existing workflows, these architectures deliver immediate value, providing quick and tangible benefits that result in rapid ROI. This shift unlocks powerful advantages that drive growth, efficiency, and innovation.

What Makes AI-Native Systems Transformative?

Here are 7 key benefits that AI-native architectures offer organizations in 2025 and beyond:

- Enhanced operational efficiency by automating complex workflows, reducing manual effort, and speeding up processes.

- Elastic scalability that dynamically adjusts resources to match demand, optimizing costs without sacrificing performance.

- Superior decision-making fueled by real-time data, predictive analytics, and continuous learning. These systems can autonomously monitor and optimize their own performance, ensuring ongoing improvement.

- Improved user experience through AI-driven personalization and conversational interfaces, making interactions smarter and more intuitive.

- Increased agility with systems that adapt automatically to changing environments and user needs, while continuously enhancing their own performance.

- Stronger security and governance embedded from the start, ensuring ethical AI use and compliance.

- Competitive differentiation by embedding AI capabilities seamlessly, accelerating time to market for new features and products.

Real-World Wins Across Business Sizes and Sectors

Picture this: a midsize SaaS startup integrates AI-native workflows that cut content creation time by 40%, freeing their team to focus on strategic growth. Or an enterprise in LATAM using distributed AI-powered networks to self-heal and reduce downtime by 30%.

Startups and SMBs leverage real-time personalization to boost customer retention, while large enterprises gain predictive maintenance that cuts operational costs and carbon footprints. Additionally, AI-native platforms can automate inventory management, streamlining multi-step workflows and enhancing operational efficiency for e-commerce and SaaS businesses.

Why This Matters to You

- Automate intelligently: Let AI handle repetitive, complex tasks and free your team for creative work.

- Scale smartly: Only pay for the compute you need, exactly when you need it.

- Make sharper decisions: Use continuous feedback loops to stay ahead of trends and issues.

Quotable takeaways:

“AI-native systems turn data overload into real-time, actionable intelligence for your business.”

“Elasticity isn’t just a buzzword — it’s the secret to balancing cost and performance in 2025.”

“Smarter workflows mean your team spends less time firefighting and more time innovating.”

Building with AI-native architecture isn’t about chasing hype; it’s about creating systems that learn, adapt, and accelerate your goals every step of the way.

Key Use Cases Demonstrating AI-Native Architecture Impact

Smart Buildings and Autonomous Management Systems

Imagine a building that thinks ahead—AI agents monitor every system to predict maintenance needs, optimize energy use, and enhance security. In these environments, autonomous agents are responsible for continuously monitoring and managing building systems, enabling dynamic adjustments and seamless automation.

This happens through:

- Edge sensors collecting real-time data

- Cloud analytics processing these inputs instantly

- Autonomous adjustments to HVAC, lighting, and security systems

The results?

- Significant operational cost savings

- Enhanced sustainability through smart energy management

- Improved occupant comfort

Picture your office adjusting temperature just before you arrive, cutting energy waste without human input. That’s AI-native in action.

AI-Native Networking and Unified Intelligent Control

Networks are no longer just pipes—they’re becoming intelligent ecosystems.

AI-native networks offer:

- Real-time analytics for proactive issue detection

- Self-healing capabilities that fix problems without downtime

- Integrated security frameworks uniting IT, security, and operations

A standout example is Juniper’s AI-native network transformation, which blends AI to create a seamlessly adaptive control plane.

This convergence means networks respond instantly, secure smartly, and evolve continuously—cutting costs and boosting reliability.

SaaS and Enterprise Platform Evolution

Modern SaaS platforms now embed AI from day one, unlike older systems that bolt it on later.

Key features include:

- Large language models (LLMs) and ML integrated for deep personalization

- Interfaces that anticipate user needs and automate routine tasks

- Predictive analytics driving smarter business decisions

- Use of prompt chains and model outputs to enhance AI-powered applications, enabling real-time orchestration and more accurate, context-aware responses

- Intelligent agents that deliver personalized and automated experiences by interacting with prompt chains, model outputs, and enterprise data sources

Startups and SMBs using AI-native platforms report faster workflows and better customer engagement, gaining a sharp edge in competitive markets.

Think of it as your software partner reading your mind—and acting before you ask.

Across these use cases, AI-native architectures transform static systems into living, learning engines that adapt, automate, and optimize in real time. By leveraging ongoing learning and adaptation, these systems enable continuous improvement, ensuring they evolve and remain effective through feedback and autonomous refinement.

They’re not just smarter—they’re more efficient, more reliable, and more aligned with today’s fast-paced digital world.

If you’re ready to build or evolve your tech stack, these examples show the real-world power of putting AI at the core—not on the sidelines.

Overcoming Challenges in Implementing AI-Native Architectures

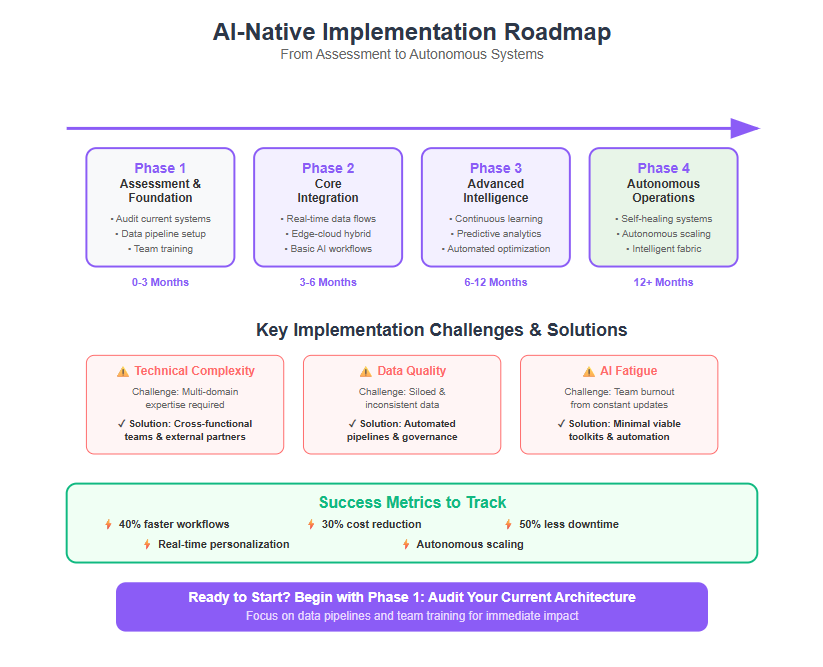

Implementing AI-native architectures isn’t a walk in the park—several challenges can complicate the journey. Integrating AI into separate systems, where transactional processing, analytics, and machine learning operate independently, often leads to complexity and inefficiency compared to unified AI-native architectures. Startups and SMBs often face five critical hurdles that can stall progress or erode ROI.

Five Key Challenges to Watch Out For

- Technical Complexity: Integrating AI deeply across distributed systems demands expertise in diverse domains, from edge computing to cloud orchestration.

- Data Quality and Accessibility: AI thrives on clean, real-time data; poor data hygiene or siloed sources can cripple performance.

- Model Governance: Continuous learning models require robust validation and version control to prevent drift and bias.

- Security Risks: Expanding AI’s footprint broadens attack surfaces, requiring proactive threat detection and privacy protections.

- Operational Scaling: Scaling AI workloads dynamically without ballooning costs or latency is a major infrastructure challenge.

Practical Fixes That Get You Moving

Tackling these challenges isn’t about perfect solutions overnight—it’s about smart, iterative improvements and teamwork.

- Set up cross-functional squads bringing data engineers, data scientists, AI specialists, security experts, and product owners under one roof.

- Invest in automated data pipelines that flag anomalies and enforce compliance in real time.

- Use model monitoring tools to track accuracy and fairness, rolling back or retraining models as needed.

- Harden AI endpoints with zero-trust security practices and encrypt sensitive data both at rest and in transit.

- Apply elastic cloud resources and container orchestration for efficient workload balancing on demand.

Managing AI Fatigue With Sustainable Practices

AI fatigue—burnout from constant retraining and tweaking—is real and can kill momentum.

- Adopt minimal viable AI toolkits that focus on core capabilities without overcomplication.

- Build feedback loops that prioritize quality over quantity of updates, balancing innovation with stability.

- Schedule regular “pause and review” cycles for teams to assess impact and adjust workflows.

Picture this: instead of drowning in alerts or endless rebuilds, your system evolves steadily—learning what works and scaling smartly without surprises.

These approaches keep teams energized and systems resilient, turning AI-native adoption into a sustainable, competitive advantage.

"Overcoming AI-native implementation hurdles requires more than tech—it demands collaboration, vigilance, and patience."

"Automated governance and cross-team agility transform complexity into continuous progress."

"Managing AI fatigue isn't about pushing harder; it’s about working smarter with focused, sustainable toolkits."

Navigating AI-native challenges well means embracing the messiness of innovation with clear roles, strong monitoring, and practical guardrails—so your smarter system truly lives up to its promise.

Designing Scalable and Future-Proof AI-Native Systems

Principles for Scalable Architecture

Building AI-native systems that last means focusing on elasticity, modularity, and seamless AI integration from day one.

Elasticity allows your system to automatically adjust resources—scaling up during peak demand, scaling down when traffic dips—to cut costs without sacrificing performance.

Modularity breaks the system into independent, reusable components. This makes adding new features or swapping AI models less of a headache.

Dynamic resource allocation balances performance demands with cost-efficiency. For instance:

- Use edge computing for ultra-low latency tasks

- Offload heavy computations to the cloud when real-time response isn’t critical

Picture a ride-sharing app that instantly matches you with a driver, no matter how many users are on the platform at once—that’s scalable architecture in action.

Sustainable AI Toolkits and Lifecycle Management

Choosing the right AI toolkits is more than picking popular frameworks; it’s about minimizing operational overhead and ensuring long-term maintainability. In the context of AI native development, this means adopting systematic, phased approaches and building robust data pipelines to support intelligent systems at every layer.

Focus on toolkits that support:

- Automated continuous retraining

- Seamless deployment pipelines

- Transparent governance and monitoring

This reduces the risk of “AI fatigue”, where models become outdated or unmanageable due to complex manual upkeep.

Real-world tip: Automate feedback loops that retrain models using live user data and log analytics. It’s like having your system improve itself while you sleep.

Transitioning to AI as a Service Models

“Smart Building as a Service” and similar consumption-based models are reshaping AI-native adoption.

These models:

- Lower upfront costs by offering pay-as-you-go access

- Simplify integration through standardized APIs and monitoring dashboards

- Align perfectly with startups and SMBs that need agility without heavy capital investment

Think of it as subscribing to intelligence: you don’t buy the whole AI infrastructure—you tap into it, scale when needed, and stay nimble.

Adopting AI-as-a-service means faster time-to-value and reduces internal complexity, freeing teams to focus on innovation over infrastructure headaches.

Scalable AI-native systems blend flexible architecture, sustainable AI lifecycles, and smart service models. Start treating AI not as a one-off feature but as a living, breathing system that grows and adapts with your business needs.

Security, Explainability, and Ethical Governance in AI-Native Systems

Embedding explainability and auditability from day one is non-negotiable in AI-native architectures. Maintaining human oversight is essential in these systems to ensure trust, accountability, and control as organizations transition from traditional to AI-native workflows. These systems must provide clear reasoning for AI-driven decisions, enabling stakeholders to trace outcomes back through transparent logs and interpret model behavior instantly.

Balancing Trust and Compliance

Keeping pace with evolving regulations like GDPR, CCPA, and emerging AI-specific laws is essential. Businesses face hefty penalties—upwards of millions per violation—if they neglect privacy or fairness mandates.

Key focus areas include:

- Privacy-preserving data processing techniques such as differential privacy and federated learning

- Enforcing strict role-based access and data encryption end-to-end

- Embedding AI fairness checks to detect and mitigate bias in real time

Implementing these measures not only protects sensitive user data but also builds user trust, a critical asset in competitive markets.

Transparency Without Sacrificing Performance

Too much transparency can sometimes expose system vulnerabilities or overwhelm users with details. The challenge lies in designing explainability layers that reveal insights at the right level: clear enough for compliance and user confidence, yet efficient enough to keep operations smooth.

Consider an AI-native fraud detection system that automatically flags suspicious transactions. The system should explain flags meaningfully to auditors while quickly processing thousands of daily transactions without delay.

Practical Ethical Governance Frameworks

Ethical governance in AI-native systems means ongoing oversight through cross-functional teams that:

- Constantly review AI decisions for unintended consequences

- Maintain accountability by owning errors and fixes promptly

- Cultivate a culture where transparency and ethical AI use are pillars, not afterthoughts

This proactive approach mitigates risks early and aligns with our core value: Own It. No excuses, just accountability.

Picture this: A startup using AI-native tools to personalize offers also runs automated audits to prevent discriminatory pricing—keeping them compliant and customer-friendly without manual micromanagement.

Key Takeaways for Your AI-Native Projects

Embed explainability from the start to enable real-time audits and improve trust. Ensure your AI systems actively utilize relevant information to improve performance and support better decision-making.

Adopt privacy-first techniques to stay ahead of regulations and protect your users.

Build ethical oversight as a continuous practice, not a checkbox exercise.

Effective security, clear AI explanations, and ethical governance aren’t add-ons—they’re the backbone of smarter, safer AI-native systems poised for growth.

“Trust isn’t given. It’s built with transparent, accountable AI decisions.”

“Privacy-preserving AI is the new competitive edge for startups and SMBs.”

“When ethics drive AI, innovation wins sustainably.”

The Future of AI-Native Architectures: From Interface to Intelligent Fabric

In 2025, AI isn’t just an add-on — it’s the fabric weaving through every part of your system’s behavior and evolution. AI native approaches embed intelligence throughout the system from the start, making AI a foundational element rather than an afterthought. Imagine your software not as a machine with AI modules stuck on, but as a living network where intelligence flows, adapts, and self-optimizes in real time.

Shaping Autonomous, Self-Optimizing Platforms

The next wave of AI-native platforms will:

- Operate autonomously, adjusting without human intervention

- Self-optimize by continuously learning from new data streams

- Seamlessly blend human input with AI decisions to stay relevant and agile

Think of it like a smart organism — sensors, processors, and decision points collaborating instantly to fix problems, improve performance, and anticipate future needs.

Preparing Your Organization for AI’s Ongoing Innovation

To win with AI-native architectures, companies must:

- Embrace continuous learning and iterative development cycles. AI-native architectures are transforming software development practices by integrating intelligent workflows and automation into every stage of building, deploying, and maintaining applications.

- Build cross-functional teams that own AI implementation end-to-end

- Stay ahead of emerging tools to prevent “AI fatigue” and maximize ROI

For example, adopting frameworks that support dynamic resource allocation helps scale costs up or down with demand — a practical move proven to lower infrastructure expenses by up to 30% in cloud-based AI workloads.

Cultivating a Culture That Matches AI’s Pace

Human culture must match AI’s velocity and adaptability:

- Foster curiosity to experiment outside comfort zones

- Encourage quick decision-making with real accountability

- Accept failure as a stepping stone, not a setback

Picture your team iterating like a start-up within your company, rapidly testing, learning, and evolving alongside your AI systems.

AI-native isn’t about plugging in smart features anymore. It’s about letting intelligence shape everything you build — from how systems behave to how they grow.

Ready to ditch old AI addons and dive into intelligent fabrics? This approach lets you unlock blue-ocean opportunities by turning your infrastructure into a living, breathing partner for innovation.

Quotable nuggets to share:

- “AI-native architectures turn software into a living organism — adapting, optimizing, and evolving constantly.”

- “The future isn’t AI on the sidelines; it’s intelligence woven into the very fabric of your systems.”

Unlock your system’s full potential by making AI the foundation — not the afterthought.

Conclusion

AI-native architectures aren’t just the future—they’re the foundation for truly intelligent, adaptable systems that work as proactive partners in your business. By embedding AI at the core, you unlock a continuous feedback loop of learning, responsiveness, and scalability that keeps you ahead in an ever-changing digital landscape.

Building with AI-native principles means your systems don’t just respond—they anticipate, optimize, and evolve in real time. This shift empowers startups and SMBs to compete smarter, innovate faster, and deliver experiences that users truly value.

To start making AI-native architecture work for you today:

- Design your data pipelines for real-time, event-driven flow, ensuring clean, accessible data that feeds continuous learning.

- Leverage hybrid compute between edge and cloud to balance speed with scale and control costs.

- Automate model retraining and monitoring to maintain accuracy without manual overload.

- Embed security, ethical governance, and explainability from day one to build trust and stay compliant.

- Adopt modular, elastic architectures that grow with your needs and reduce complexity over time.

Take bold steps now by auditing your current architectures against these principles. Start small with focused AI integration projects that prove value, then scale intelligently while empowering cross-functional teams to own the AI journey end-to-end. Remember, sustainable innovation comes from iteration, not perfection.

“AI-native isn’t just tech—it’s a mindset shift turning your systems into living partners that learn, adapt, and accelerate your success.”

Put AI at your system’s heart and watch your business move from reactive to revolutionary. The smartest time to get started is now.