AI Tool Not Working? Universal Troubleshooting Checklist

AI Tool Not Working? Universal Troubleshooting Checklist 2025

Key Takeaways

Navigating AI tool failures doesn’t have to be overwhelming—this checklist guides you through fast, focused fixes that save time and money. Whether you’re troubleshooting basics or diving deep into data and model issues, these actionable insights help you restore reliability and scale confidently. Before diving into detailed troubleshooting steps, take a moment to consider the big picture of your AI system’s architecture and requirements to ensure your efforts are strategically aligned. Effective troubleshooting of AI tools typically involves verifying prompt clarity, data quality, and checking for network connectivity issues to ensure smooth operation.

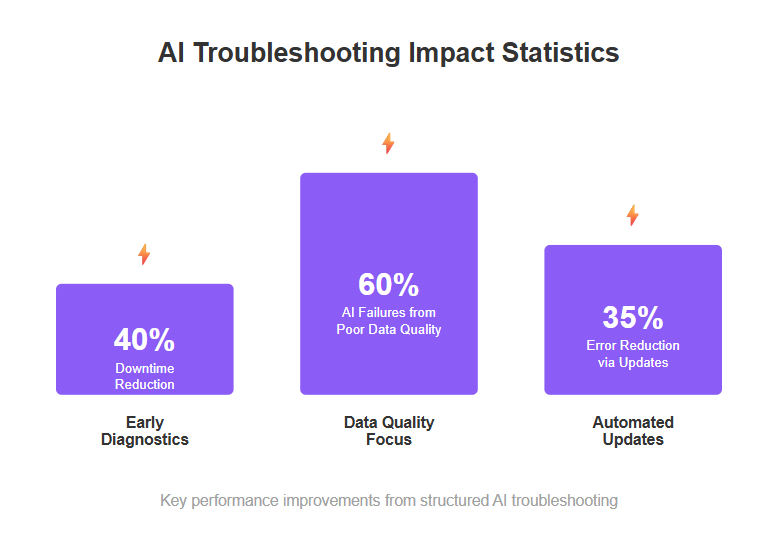

- Start troubleshooting with core diagnostics by checking network connectivity, software versions, and hardware health to resolve up to 40% of common AI tool errors quickly.

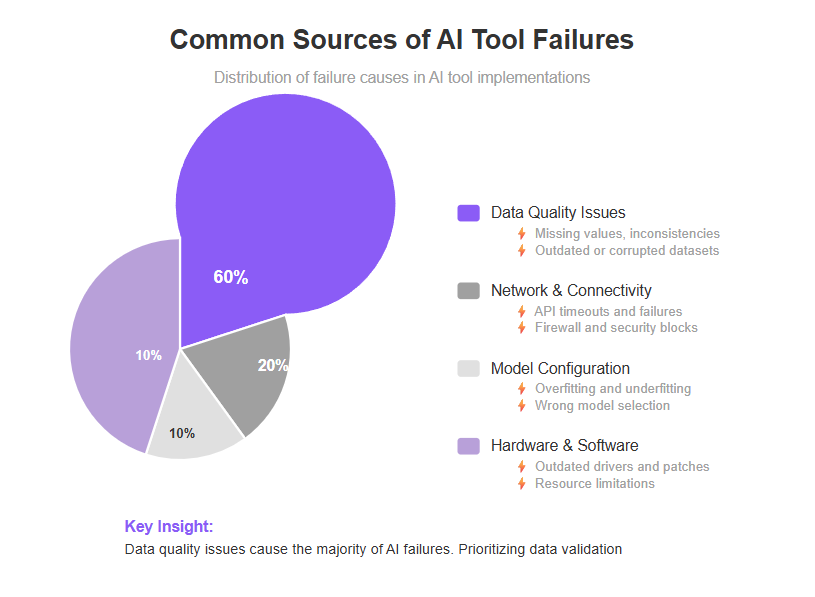

- Prioritize data quality: Clean, validate, and augment datasets regularly using a mix of manual checks and automated tools to prevent 60% of AI failures caused by poor inputs.

- Balance model complexity by avoiding overfitting and underfitting with regularization and cross-validation, tailoring model size to your dataset for both performance and interpretability. Overfitting happens when a model learns the training data too well and performs poorly on unseen data, making regularization and cross-validation essential.

- Boost AI performance and deployment by profiling resource use, optimizing code, and leveraging platforms like AWS SageMaker or Docker to reduce processing times by up to 40%.

- Let AI handle the heavy lifting: AI tools are designed to manage the heavy lifting of parsing instructions, generating tasks, and automating complex processes. Effective troubleshooting ensures these core, labor-intensive functions remain efficient and reliable.

- Detect and mitigate bias early using frameworks like IBM AI Fairness 360; build diverse datasets and ensure transparent model decisions for fairer, more trusted AI.

- Secure your AI pipeline with adversarial training, input validation, and access controls to prevent costly data poisoning and unauthorized access.

- Implement structured maintenance by regularly analyzing logs for error patterns and automating updates, reducing AI errors by up to 35% and preventing downtime surprises.

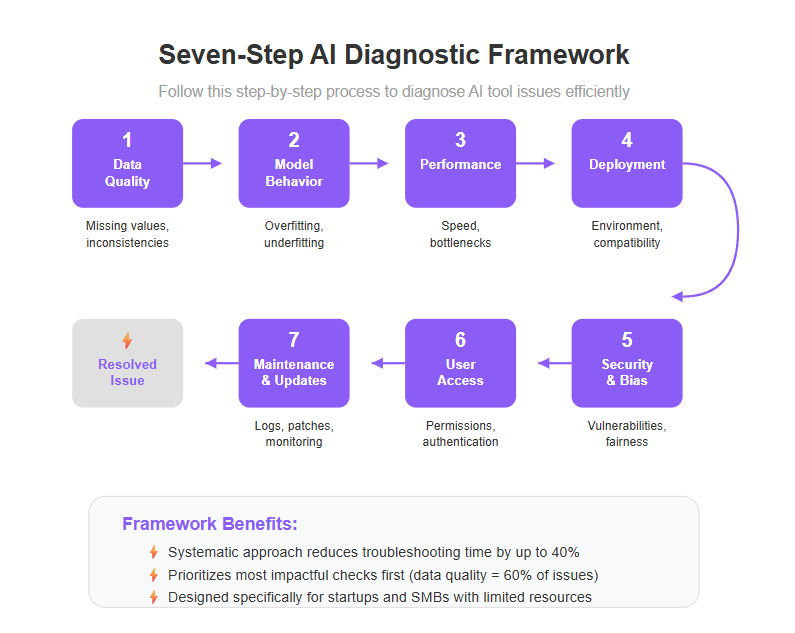

- Use a seven-step diagnostic framework focusing on data, model, performance, deployment, security, access, and maintenance to troubleshoot methodically and restore stability in 48 hours or less.

Mastering these essentials lays the foundation for AI tools that aren’t just functional—they’re fast, fair, and future-ready. Dive into the full guide to unlock every step of this universal troubleshooting checklist. Many issues with generative AI tools stem from unclear or poorly structured prompts, so refining prompts is a key step in ensuring effective AI performance.

Introduction

Ever hit a wall when your AI tool suddenly stops working—right in the middle of a critical project? You’re not alone. For startups and SMBs racing against the clock, every minute of downtime can translate into lost revenue and frustrated clients. When troubleshooting AI tools, ensure to check server status as high traffic or outages can affect functionality. Additionally, if users cannot discover Universal Print printers, it is essential to verify that their PC is connected to Microsoft Entra ID.

What if you had a clear, step-by-step checklist to troubleshoot issues quickly, no matter your technical background? Imagine cutting your AI downtime by up to 40%—saving hours or even days of guesswork. When faced with issues in AI tools, restarting the application or refreshing the web page can often resolve temporary glitches. Automatic collection of browser logs is especially helpful for AI code debugging.

This guide delivers practical solutions to help you spot common pitfalls and take control of your AI tools. The checklist is designed to help you identify and overcome key hurdles that often block effective troubleshooting of AI tool failures.

You’ll learn how to:

- Identify early warning signs like slow responses and inconsistent outputs

- Rule out simple causes (think network glitches or outdated software) before things spiral

- Tackle data quality, model behavior, deployment, and security—all with actionable insights

Focused on startups and SMBs, this checklist respects your limited resources and need for speed. It’s designed to empower you with a systematic troubleshooting mindset that turns confusion into clarity.

Ready to transform headaches into smooth AI performance? The first step starts with understanding the key signs your tool isn’t firing on all cylinders—and how to diagnose them efficiently.

Diagnosing AI Tool Failures: Core Issues and Initial Checks

AI tools acting up? Start by spotting the most common symptoms that indicate they’re not firing on all cylinders.

Watch for:

- Slow or unresponsive interfaces when interacting with the tool

- Unexpected errors or crashes during routine tasks

- Results that feel “off” or inconsistent outputs from the AI models

- Network connectivity problems—network issues such as server outages or connectivity errors are a common cause of AI tool failures. Always check for ongoing outages and verify your network is stable when you encounter error codes or access failures. If a printer shows as offline on a user PC, a verification must be conducted to ensure that the printer is online on the connector PC.

Understanding the expected behavior of your AI tool is crucial for distinguishing between normal operation and actual failures.

Adopt a Systematic Troubleshooting Mindset

Instead of guessing, approach every glitch like a detective.

Focus on:

- Breaking down the problem into smaller components (network, data, compute)

- Documenting what changed recently to ensure that root causes are quickly identified

- Prioritizing checks that minimize downtime and cost—time is money, especially for SMBs

In fact, studies show that early diagnostics can reduce downtime by up to 40% in AI deployments, saving hundreds of dollars per incident.

Involving the whole team in documenting changes and sharing insights leads to faster and more comprehensive troubleshooting.

Quick Wins: Verify Your Basics First

Before digging deep, rule out simple but crucial causes with these fast checks:

- Network connectivity: Is your AI tool properly connected? Even a flaky Wi-Fi can disrupt cloud AI services.

- Software versions: Outdated patches invite bugs. Confirm your app and AI framework are up to date.

- Installation status: Verify that all necessary software and hardware components are properly installed. If any required component is missing, follow the official instructions to install it before proceeding. Installation issues are a common source of problems.

- Hardware status: Are your GPUs or CPUs overloaded or overheating? This can cause unpredictable slowdowns.

Run these checks in order often restores AI tools swiftly without extra cost or delays.

Picture this:

Imagine rushing to fix a complex machine only to discover its power cable was loose. That’s why starting with straightforward diagnostics saves frustration and lost productivity. When troubleshooting, always check for any error message displayed by the AI tool, as these can provide immediate clues to the underlying issue. Additionally, users are unable to access Universal Print even with an eligible license if the license does not include the Universal Print Service plan. When users experience errors related to print jobs, it is important to check the print job status in the user PC’s printer queue. After verifying the printer is online on the connector PC, ensure that the printer is installed locally on the PC running the connector, as only locally installed printers are visible and eligible for registration.

If your network’s suspect, check out 5 Strategic Network Checks to Restore AI Tool Connectivity for hands-on tips on avoiding pitfalls like firewall blocks or DNS errors.

Getting these essentials right sets the stage for deeper troubleshooting.

Kick off every AI issue with clear, focused checks that can unblock your workflow fast.

Remember: small fixes often prevent big headaches down the road—so start simple, stay sharp, and build from there.

Setting Up Troubleshooting Tools for Success

Troubleshooting is a core skill for anyone working with AI tools, and your success often hinges on having the right setup from the start. Creating a structured troubleshooting toolkit or framework is essential to facilitate efficient problem-solving. A well-prepared environment not only speeds up problem solving but also reduces manual effort and frustration when things go wrong. Here’s your step by step guide to building a troubleshooting toolkit that empowers you to identify, analyze, and fix issues in any AI system. The quality of the data used to train an AI model plays a critical role in its performance and accuracy, making data preparation a vital step. Additionally, data drift, where real-world data patterns change over time, can degrade model accuracy, emphasizing the need for ongoing monitoring and updates. The process of generating prompts from detailed bug reports turns unstructured feedback into actionable instructions for AI coding assistants.

Essential Tools Every Troubleshooter Needs

To effectively tackle issues with AI generated code, you need a solid foundation of tools that streamline the debugging process and foster collaboration. Here’s what every troubleshooter should have in their arsenal: Developers can also use AI prompts to generate detailed bug reports, which can significantly aid in fixing broken AI code by providing clear, actionable insights. A well-structured bug report can improve troubleshooting efficiency by ensuring that all relevant technical details are included for the AI coding tool to process. The code debugging AI tool gathers significant data such as CSS selectors, browser information, and console logs to improve bug-fixing accuracy. Using specific tools like log analyzers, version control systems, and document management platforms is essential for comprehensive troubleshooting. Managing workflows across multiple tools is crucial to maintain consistency and enable effective collaboration among team members.

- AI coding tools and code editors: Tools like Visual Studio Code or JetBrains PyCharm * Webvizio provides an AI code fixer that can resolve debugging problems in seconds. An AI coding tool can help automate error detection and streamline debugging. Users may encounter the ‘Tool not found’ error in IDEs if the IDE cannot communicate with the TaskMaster AI back-end.

Configuring Your Environment for Effective Diagnosis

Understanding and Resolving AI Code Issues

Common Coding Pitfalls in AI Projects

Ensuring Data Quality for Reliable AI Performance

Identifying Data-Related Problems

Missing values, inconsistencies, and inaccuracies are like potholes on your AI’s road to success—they slow down or derail your model’s accuracy. Hallucinations in AI occur when the model generates confident but incorrect information, further emphasizing the need for robust data validation and monitoring.

Startups and SMBs often wrestle with:

- Incomplete datasets due to sporadic data collection

- Conflicting information from multiple sources

- Outdated or manually entered data prone to human error

- Inconsistencies and missing values found within documents such as PDFs, Word files, and spreadsheets; reviewing these documents is essential for comprehensive data quality checks

Running more than one AI instance can help focus on different aspects of data quality analysis, allowing each instance to address specific issues such as missing values, inconsistencies, or inaccuracies independently.

These issues directly reduce AI model reliability and increase error rates, leading to wasted time and lost confidence.

Effective Data Cleaning Strategies

Tackling data quality needs both manual review and automation to prepare AI-ready datasets.

Key practices include:

- Remove or fill missing data based on context-sensitive rules

- Standardize formats (dates, currencies) to avoid confusion

- Use automated tools like OpenRefine, Trifacta, or Python libraries (Pandas, Dedupe) for bulk cleaning

- Set up ongoing data validation workflows and establish processes for systematic data cleaning and validation to catch errors early

A practical tip: start simple with regular spot checks, then scale up automations as you grow.

Handling Insufficient Data Challenges

When data feels scarce, be creative—this is where clever fixes really pay off.

Try these proven approaches:

Data Augmentation: Slightly tweak existing data—think adding noise to images or synonym swaps in text—to multiply your dataset size without new collection. Providing concrete examples of data augmentation techniques can help teams implement these methods effectively.

Synthetic Data Generation: Use tools like GANs (Generative Adversarial Networks) to fabricate realistic new samples, ideal for privacy-sensitive applications or rare events. Sharing examples of synthetic data generation can clarify the process and guide users in applying these solutions.

Transfer Learning: Borrow from pre-trained AI models on large datasets and fine-tune them to your niche data—cutting training time and improving accuracy with less input data.

For example, an SMB focusing on customer service chatbots can drastically reduce startup time by leveraging a pre-trained language model rather than building from scratch.

Regularly clean, validate, and augment your datasets to lower the risk of hidden errors bubbling up in production. It’s like tuning a race car—every detail counts in performance.

Quotable insights:

- “Poor data is the silent deal-breaker behind most AI tool failures.”

- “Automate your data cleaning early to save hours, headaches, and lost revenue.”

- “When data runs low, augment, synthetic-generate, or leverage transfer learning—don’t just hope for more.”

Picture this: your AI tool confidently predicting customer needs because you’ve cleared the data roadblocks ahead of time—no surprises, just smooth performance.

Data quality isn’t a one-time fix—it’s your ongoing ticket to reliable AI that scales with your business.

Balancing Model Complexity: Overfitting, Underfitting, and Interpretability

Understanding Model Behavior and Pitfalls

Think of overfitting like memorizing answers to a quiz instead of understanding the subject—you nail training data but flop on new info.

In contrast, underfitting is like giving a half-baked answer: your model misses key patterns, performing poorly everywhere.

Both scenarios hurt AI tool effectiveness—overfitting causes fragile predictions, while underfitting leaves your tool clueless. That's why it's crucial to be able to explain model behavior to stakeholders, ensuring they understand how and why decisions are made.

Practical Solutions for Model Optimization

To fix overfitting, apply regularization techniques such as L1 (which promotes sparsity) and L2 (which shrinks coefficients).

Cross-validation is your friend here—it repeatedly tests your model on different data splits to ensure it generalizes well. Make sure to run tests on each split to verify model robustness.

Select model complexity based on your dataset size and use case:

- Small data? Simpler models avoid overfitting.

- Large, complex data? Deeper models may capture nuances better.

To improve AI model performance, adjustments such as changing the temperature setting or tweaking hyperparameters may be necessary.

- Small data? Simpler models avoid overfitting.

- Large, complex data? Deeper models may capture nuances better.

For example, startups with limited data benefit from logistic regression with L2 regularization more than a deep neural net.

Making AI Models Transparent and Trustworthy

Ever wondered why your AI made a weird prediction? Explainable AI tools like SHAP and LIME break down model decisions into intuitive feature impacts. Crafting the right prompt when using these tools is essential to get clear, actionable explanations from your AI models.

Visualization tools—think heatmaps or decision trees—turn complex math into pictures, helping teams trust and debug AI.

Sometimes, simpler models outperform deep learning in interpretability and ease of maintenance—perfect for SMBs who need clarity and speed without a PhD.

- Use SHAP to quantify feature importance globally.

- Use LIME for local explanations on individual predictions.

Picture this: your marketing AI flags a customer churn risk; SHAP explains it’s mostly due to recent customer support tickets, making the insight actionable fast.

“Overfitting is the silent productivity killer; regularization and cross-validation are your best defenses.”

“Explainability tools turn AI black boxes into transparent partners you can actually trust.”

Optimizing model complexity isn’t just academic—it’s about striking a balance that keeps your AI tool reliable, fast, and understandable for real-world use.

Performance and Deployment: Speed, Scalability, and Stability

Diagnosing AI Performance Bottlenecks

AI tools often slow down or choke because of inefficient algorithms, resource limitations, or scalability issues.

Start by profiling your model’s runtime to spot bottlenecks—look for heavy CPU or GPU usage and high memory consumption. AI systems often do the heavy lifting in parsing data and generating outputs, so identifying where this demanding work occurs is crucial for optimization. Ensure that API usage limits are adhered to, as exceeding them can lead to request errors.

Immediate fixes include: * Optimizing code for efficiency * Reducing model size or complexity * Implementing batch processing or asynchronous operations. For example, using tools like Webvizio can cut debugging time from hours to minutes, streamlining the process and improving productivity.

- Optimizing code for efficiency

- Reducing model size or complexity

- Implementing batch processing or asynchronous operations

For example, switching from a bulky deep network to a lighter model can cut processing time by 40%, giving your AI tool a speed boost without sacrificing accuracy.

“An AI model that runs fast keeps your projects moving and your clients happy.”

Deployment Hurdles and Solutions

Deploying AI isn’t plug-and-play—platform selection and environment setup matter.

Top cloud platforms like AWS SageMaker, Google AI Platform, and Azure ML offer scalable, managed services that simplify deployment hassles.

Containerization with Docker is a lifesaver here: it packages your AI model and dependencies, so what runs on your laptop runs identically in production. Before deploying, always verify that your deployment environment meets all prerequisites to ensure smooth operation.

Also, CI/CD pipelines tailored for AI let you:

- Push new model versions seamlessly

- Roll back quickly if something breaks

- Automate testing and validation of updates

Picture this: an automated pipeline that updates your AI workflow overnight, freeing your mornings for creative problem-solving.

Hardware and Compatibility Considerations

Hardware can be the silent culprit behind AI tool crashes and lag.

Updated drivers, compatible GPUs, and matching hardware specs with software requirements are crucial for stability.

Tips to maximize hardware-software harmony:

- Keep drivers and firmware current—ensure you have the latest version installed

- Choose hardware tested with your AI frameworks

- Monitor system resource allocation to avoid overload

Curious how this magic works? Check out Why Hardware Compatibility Is Revolutionizing AI Tool Stability.

“Aligning your hardware with software specs is like tuning a race car before the big race—it makes all the difference.”

Matching these elements can slash troubleshooting time and boost uptime significantly.

Performance and deployment go hand in hand—speedy AI models with smooth releases and the right hardware backbone create reliable, scalable tools that power your startup or SMB forward.

Addressing Bias, Fairness, and Security in AI Tools

Detecting and Mitigating Bias

Biased AI models aren’t just unfair—they can tank your product’s credibility and alienate users. Bias often creeps in through unrepresentative datasets or flawed assumptions baked into algorithms. Fact-checking AI outputs is critical, especially for applications in sensitive areas like legal, medical, and academic fields, to ensure reliability and trustworthiness.

Start by using bias detection frameworks like IBM AI Fairness 360 or Fairlearn. These tools analyze your model’s decisions and highlight hidden biases.

Create diverse, balanced datasets by including varied demographics and scenarios. Leverage organizational knowledge to identify potential sources of bias and ensure your data reflects real-world diversity. This reduces the chance of skewed outcomes and opens doors to broader market appeal.

Follow simple ethical guidelines to shape fair AI, especially for startups and SMBs:

- Prioritize transparency in model decisions.

- Test outcomes on different user groups before launch.

- Document dataset sources and model assumptions openly.

“Fair AI means happier users—and fewer costly missteps down the road.”

Securing AI Models Against Threats

Imagine someone subtly tweaking inputs so your AI makes wrong calls—that’s an adversarial attack. Or picture corrupted training data quietly poisoning your model’s brain. Adversarial attacks and data poisoning are real risks for SMBs scaling AI fast. Guard against them with: * Adversarial training: Teach your model to recognize and resist malicious input patterns. * Input validation: Filter and verify data at every step to stop bad actors early. * Continuous monitoring: Track model behavior to spot anomalies signaling attacks. Consulting the print connector event log can also provide insights into errors and help diagnose problems effectively.

Adversarial attacks and data poisoning are real risks for SMBs scaling AI fast. Guard against them with:

- Adversarial training: Teach your model to recognize and resist malicious input patterns.

- Input validation: Filter and verify data at every step to stop bad actors early.

- Continuous monitoring: Track model behavior to spot anomalies signaling attacks.

Security best practices tailored for startups and SMBs include:

Regularly audit your AI pipelines for vulnerabilities.

Implement role-based access controls to limit insider threats.

Use encryption and secure storage for sensitive training data.

Leverage AI assistance to monitor for and respond to security threats, enhancing your ability to detect and mitigate risks quickly.

“Investing time in AI security early saves your brand from headaches—and costly breaches—later.”

Embedding Security Checks into Your AI Ops

Make security audits a recurring part of your AI lifecycle. Schedule quarterly reviews examining data integrity, model performance, and access logs.

Encourage a proactive security culture: include your team in threat assessments and disaster recovery drills.

By actively hunting bias and fortifying against attacks, your AI tools will be more reliable, trustworthy, and ready to scale—because fairness and security aren’t optional, they’re foundational. There is a constant need for vigilance in maintaining security and fairness in AI tools.

Ultimately, spotting bias early and building strong defenses protects your AI’s value and your users’ trust—essential for startups betting on AI to grow fast and smart.

User Access and Software Maintenance Best Practices

Managing Permissions to Prevent Access Errors

User permission issues rank among the top reasons AI tools malfunction unexpectedly.

Common causes include:

- Users lacking the required roles to access certain features

- Incorrect role assignments causing conflicts

- Expired or revoked access tokens blocking authentication

Step-by-step secure configuration reduces downtime:

Define roles clearly based on job functions—no guesswork

Regularly audit permissions to prevent privilege creep

Maintain a permissions file to track and audit user access rights

Implement role-based access control (RBAC) for granular security

Use multi-factor authentication where possible to secure sensitive operations

Imagine your AI tool as a busy office—only authorized team members should enter specific rooms to avoid chaos.

Misconfigured rights cost SMBs on average 20 hours monthly in lost productivity, so upfront role setup is a must-have.

Master User Permissions to Avoid AI Tool Access Errors is your go-to guide for zero-friction access management. To resolve credential prompts when discovering printers, it is crucial to ensure that users enter valid Microsoft Entra ID accounts that have permission to access Universal Print.

Importance of Timely Software Updates

Outdated software fuels common AI headaches: * Compatibility errors between AI modules and system libraries * Security vulnerabilities inviting attacks * Performance degradation due to obsolete algorithms. To address the 'Unsupported document format' error when printing, it is important to ensure that document conversion is enabled for the target printer.

- Compatibility errors between AI modules and system libraries

- Security vulnerabilities inviting attacks

- Performance degradation due to obsolete algorithms

Best practices when updating:

- Schedule updates during low-usage windows to avoid disruption

- Test patches in staging environments before production rollout

- Automate update workflows to apply critical fixes swiftly and consistently

Statistics show companies embracing automated updates reduce AI tool errors by up to 35%, making proactive updates a smart investment. Maintaining updated versions of TaskMaster AI and related tools can mitigate many known bugs and issues, simplifying troubleshooting efforts.

Statistics show companies embracing automated updates reduce AI tool errors by up to 35%, making proactive updates a smart investment.

For quick wins, check Essential Software Updates to Fix AI Tool Errors Fast—it’s like giving your AI tool a yearly health check without the wait.

Keeping user access seamless and software fresh is your frontline defense against unexpected AI tool failures.

Stay sharp on permissions and updates to keep your AI running efficiently and securely—because downtime is not an option for fast-moving startups and SMBs.

Leveraging Logs and Preventive Tactics for Long-Term AI Health

Using Log Files to Diagnose AI Issues

AI tool logs are your first line of defense when things go south. Look out for error signatures like memory leaks, failed API calls, and timeout events—these often reveal root causes faster than guessing. If a print job fails, it is necessary to verify that the job appears in the printer jobs section on the Azure Portal to assess its status.

Look out for error signatures like memory leaks, failed API calls, and timeout events—these often reveal root causes faster than guessing.

Even if you’re not a full-blown dev, basic log analysis is doable: track unusual spikes in error counts or repeated failure patterns.

Here’s what to focus on:

- Timestamped error messages signaling when failures start

- Resource usage logs that show memory or CPU bottlenecks

- API request and response codes flagging communication breakdowns

- Search log files for specific error codes or recurring patterns to quickly identify issues

For example, a startup we worked with spotted a recurring “timeout” entry in their logs pointing to a 3rd-party API delay — fixing that cut downtime by 40%.

Logs turn guesswork into insight — unlocking solutions sometimes hidden in plain sight.

Check out our deep dive on How Log Files Can Unlock AI Tool Problem Solutions for pro tips on log tools and analysis workflows.

Preventive Maintenance: Avoiding Future Failures

You don’t want to wait for the next failure to act — prevention is where the real magic happens.

Here are 6 essential tactics to keep your AI tools humming long-term:

Schedule regular system health checks focusing on usage and error trends

Automate updates and patch management to avoid software vulnerabilities

Continuously validate data inputs to prevent garbage-in garbage-out scenarios

Monitor model performance metrics to catch performance drift early

Implement access control audits minimizing user-related errors

Establish incident response protocols for faster issue resolution

Build a culture that celebrates small wins around continuous improvement — it keeps everyone accountable and reduces nasty surprises.

Visualize a daily dashboard tracking these metrics like a race car pit crew monitoring the vehicle’s health mid-race — relentless, precise, proactive.

Grab more actionable advice at Top 6 Preventive Tactics to Keep AI Tools Running Seamlessly and start shifting from firefighting to foresight today.

Logs offer clarity in chaos, turning confusion into actionable insights.

Preventive care isn’t optional; it’s your best bet against costly AI downtime.

Think of your AI tools like plants — consistent care yields vibrant, lasting growth.

Structured Diagnostic Framework: Seven Proven Steps to Efficient Troubleshooting

When your AI tool starts acting up, a messy “spray and pray” approach wastes time and money. Instead, follow a stepwise diagnostic framework to pinpoint issues, identify and evaluate possible solutions, and fix problems fast.

Prioritize the Most Impactful Checks First

Start by systematically inspecting seven core areas:

Data Quality – Missing or corrupted data can tank your AI’s output.

Model Behavior – Look for overfitting, underfitting, or strange predictions.

Performance – Check for slow response times or memory bottlenecks.

Deployment Environment – Verify both the development environment and deployment environment, including container setups, cloud configs, and platform compatibility.

Security & Bias – Scan for vulnerability and biased outputs that could cause harm.

User Access – Permissions misconfigurations frequently block functionality.

Maintenance & Updates – Outdated software or neglected logs hide lingering issues.

This checklist targets startups and SMBs who juggle limited resources and need quick wins without sacrificing thoroughness.

Use Checklists and Decision Trees for Clear Guidance

Navigating troubleshooting is much easier with structured tools. Use decision trees that branch from symptoms (“Is your tool crashing or just slow?”) into tailored actions. Incorporate specific prompts at each step to guide users through troubleshooting, ensuring that each decision point leads to more precise and effective solutions. The six stages of the Universal Problem-Analysis Companion—Describe the Problem, Validate the Root Cause, Assess the Impact, Synthesize Diverse Perspectives, Propose Starting Points for Solutions, and Enable Continuous Problem-Solving—can also provide a structured framework for addressing complex issues. The Universal Problem-Analysis Companion can be used by anyone, from students to team leaders, to achieve better decision-making and outcomes. AI enhances the analysis process by synthesizing inputs from multiple stakeholders during problem escalation and solution proposal.

Checklists also keep teams aligned:

- Verify data integrity before retraining models

- Run cross-validation to detect overfitting

- Confirm hardware specs and driver versions match vendor recommendations

This practical approach mimics real-world diagnostics at successful companies we’ve worked with—cutting downtime by up to 40%.

Real-World Example: Startup Saves Days of Work

Picture a SaaS startup whose AI-powered recommendation engine unpredictably underperformed after an update. Applying this framework—

- They first ruled out data quality issues by spotting corrupted inputs.

- Next, cross-validation revealed overfitting due to a recent model tweak.

- Finally, container logs exposed deployment misconfigurations.

Within 48 hours, the AI was stable again, and that’s with a small in-house team—ensuring they wouldn’t encounter the same problem in the future.

Takeaways for Immediate Action

- Start with data checks; 60% of AI failures trace back to input problems.

- Use decision trees to avoid random fixes and find root causes faster.

- Integrate routine maintenance into your workflow, leveraging AI-assisted tools to automate checks and catch issues before they snowball.

When troubleshooting becomes a clear, repeatable process, fixing your AI tool is no longer a headache — it’s a checkpoint on your product’s growth path.

For a detailed breakdown of these steps and customizable templates, check out our sub-page: 7 Proven Steps to Diagnose Why Your AI Tool Fails.

Conclusion

Troubleshooting AI tools doesn’t have to feel like wandering through a maze blindfolded. With a clear, structured approach, you can quickly pinpoint issues and restore smooth operation, saving precious time and resources for your startup or SMB.

Focusing on the right areas—data quality, model behavior, performance, deployment environment, security, user access, and maintenance—gives you a reliable blueprint to solve problems methodically rather than guessing. This keeps your AI tools running efficiently, securely, and ready to scale with your growth.

Keep these actionable insights front and center as you tackle AI failures:

- Prioritize data health: Regularly clean, validate, and augment your datasets to prevent hidden roadblocks.

- Use decision trees and checklists to diagnose problems systematically, cutting troubleshooting time drastically.

- Automate updates and monitor logs to catch early warning signs before they escalate into downtime.

- Maintain clear user access controls and security audits to guard against unexpected interruptions.

- Optimize model complexity with cross-validation and explainability tools to build AI that’s both powerful and trustworthy.

Start by running the basic diagnostic checklist today and build routines around these key areas. Automate where possible, assign roles for regular audits, and treat AI troubleshooting as an ongoing process—not a one-off chore. Incorporating robust version control practices helps manage code changes efficiently and provides a reliable history for effective troubleshooting.

Remember, every small fix prevents big headaches later. The way you approach AI resilience today directly impacts your innovation speed tomorrow. Mastering AI troubleshooting is a game changer for business growth and resilience, giving startups and SMBs a decisive edge.

“Master your AI troubleshooting, and you master the future of your business—flexible, focused, and unstoppable.”

Your AI tools are ready to perform at their best—and so are you. Take charge, act decisively, and unlock the power of dependable AI to fuel your success.

Troubleshooting AI Integration Issues

Integrating AI tools with your existing systems and services can be a game changer—but it’s rarely plug-and-play. Whether you’re connecting AI-generated code to your CRM, automating workflows, or embedding AI models into your product, integration issues can quickly stall progress. The key to smooth AI integration is knowing how to identify, analyze, and resolve problems as they arise. Here’s your step by step guide to tackling the most common integration hurdles, so your AI tools work seamlessly with the rest of your tech stack.

Pinpointing Integration Points of Failure

When AI integration fails, the first step is to zero in on where things are breaking down. Here are the most common culprits:

- Network Security: Strict network security settings can block AI tools from accessing the data or services they need. Double-check your firewall rules, proxy settings, and access permissions to ensure your AI tools can communicate freely with other systems. Sometimes, a simple port or protocol block is all it takes to derail an integration.

- AI Model Selection: Not every AI model is suited for every integration. Using the wrong model can result in errors, unexpected outputs, or even total failure of the integration process. Make sure you’re deploying the right AI model for your specific use case, and that it’s compatible with the systems you’re connecting.

- Manual Effort and Human Error: Setting up AI integrations often requires manual configuration and testing. This is where small mistakes—like a misconfigured endpoint or a missed API key—can cause big headaches. Document your integration process, follow best practices, and double-check each step to minimize errors.

By systematically reviewing these areas, you can quickly identify where your AI integration is getting stuck and focus your troubleshooting efforts where they matter most.

Common Pitfalls in Connecting AI with Other Systems

Even with the best tools, connecting AI with other systems can introduce unique challenges. Here’s what to watch for:

- Error Messages: AI tools sometimes throw cryptic or generic error messages that don’t point directly to the problem. Learn how to interpret these messages, and use debugging tools to trace the issue back to its source. Don’t just Google the error—dig into logs and documentation for deeper insights.

- Development Environment Mismatches: If your development environment doesn’t meet the requirements of your AI tool, integration can fail before it even starts. Always verify that your environment meets the specs for both the AI tool and the systems you’re connecting. This includes checking software versions, dependencies, and hardware compatibility.

- Recurring Issues (The “Same Problem” Trap): If you keep running into the same problem, it’s a sign of a deeper integration issue—maybe a misaligned API, a persistent network block, or a configuration mismatch. Don’t just patch over the symptoms; take the time to identify and resolve the root cause so you don’t waste time fixing the same problem over and over.

By staying alert to these pitfalls, you’ll be better equipped to troubleshoot integration issues and keep your AI tools running smoothly across all your systems.

Platform-Specific Troubleshooting: Using Azure Portal

When your AI tools are deployed on Microsoft Azure, the Azure Portal becomes your go-to dashboard for diagnostics and troubleshooting. With its centralized interface, you can quickly access, monitor, and resolve issues across all your AI services—no matter how complex your environment.

Navigating the Azure Portal for AI Diagnostics

Here’s how to leverage the Azure Portal to keep your AI integrations running smoothly:

Access the Azure Portal: Log in to the Azure Portal and head to the navigation pane. This is your command center for all Azure-based AI services.

Select Your AI Service: In the navigation pane, locate and select the specific AI service you’re troubleshooting—whether it’s Azure Machine Learning, Azure Cognitive Services, or another AI-powered tool.

View Service Logs: Dive into the service logs to identify any error messages, failed requests, or unusual activity. These logs are invaluable for pinpointing the root cause of issues and understanding the context of failures.

Check the Resource Group: Make sure all required resources—compute, storage, networking—are present and properly configured within the relevant resource group. Missing or misconfigured resources can cause AI services to malfunction.

Test the AI Service: Use the built-in testing features to run sample requests and verify that your AI service is functioning as expected. This step helps confirm whether the issue is resolved or if further troubleshooting is needed.

By following these steps, you can efficiently identify and resolve issues using the Azure Portal, ensuring your AI tools and integrations remain reliable and high-performing. The Azure Portal’s easy access to logs, resource management, and testing tools makes it an essential part of your AI troubleshooting toolkit.