Claude API & Alexa: How to Create Unbeatable Voice Experiences

Claude API & Alexa: How to Create Unbeatable Voice Experiences

Meta Description: Learn how to combine Claude API & Alexa: How to Create Unbeatable Voice Experiences in this guide. Discover steps and tips to integrate Anthropic’s Claude AI with Amazon Alexa for powerful, user-friendly voice apps that delight users.

Outline

- Introduction to Voice AI and Article Goals

- What is the Claude API? (Anthropic’s AI Platform)

- What is Amazon Alexa and the Alexa Skills Kit?

- Why Combine Claude API & Alexa? (Synergy and Benefits)

- Key Features of Claude API for Voice Apps

- Core Aspects of Alexa and Voice Platforms

- Setting Up Development Accounts (Anthropic & Amazon)

- Creating Your First Alexa Skill

- Integrating Claude API into an Alexa Skill (Technical Steps)

- Designing Natural Voice Interactions (Best Practices)

- Multi-Turn Conversations and Context Retention

- Use Cases & Examples (e.g., Customer Support, Education)

- Testing, Analytics, and Optimization of Skills

- Privacy, Security, and Trust (E-E-A-T Principles)

- Performance Limits, Cost, and Reliability Considerations

- Voice Development Tools & Frameworks (Voiceflow, AWS, etc.)

- The Future of Voice AI: Alexa+, Generative AI Trends

- Frequently Asked Questions (FAQs)

- Conclusion

- Next Steps (Translate, Generate Images, New Article)

Introduction to Voice AI and Article Goals

Voice technology is rapidly transforming how we interact with devices. Voice assistants like Amazon Alexa and AI platforms like Anthropic’s Claude are making conversations with machines more natural than ever. AI assistants, including popular voice-activated technologies such as Siri, Alexa, and Google Assistant, are becoming increasingly present in everyday life. In this guide, Claude API & Alexa: How to Create Unbeatable Voice Experiences, we will explore how developers can harness Anthropic’s Claude API with Amazon Alexa to build powerful voice applications. We cover the what, why, and how of voice AI integration, with best practices, real examples, and tips for success. Imagine asking your smart speaker a question and getting a helpful, human-like reply – this is the promise of combining advanced Claude API & Alexa technologies.

Voice assistants like the Amazon Echo (pictured) show how voice AI enters daily life. These smart speakers let users talk to Alexa to do things like play music or control lights. By powering Alexa with Claude’s API, developers can make those voice interactions smarter and more natural. For example, instead of a simple answer, the voice app can explain tasks step-by-step or remember past requests to give personalized replies. In short, this article will give you the roadmap to use the Claude API & Alexa: How to Create Unbeatable Voice Experiences in your projects.

What is the Claude API? (Anthropic’s AI Platform)

Anthropic’s Claude API is a cutting-edge AI chatbot platform. Claude is designed to be “helpful, honest, and harmless,” and it excels at language tasks. The Claude API lets developers send user messages and receive rich, text-based responses. It’s trained on vast text data and can do things like answer questions, summarize content, write creatively, or even write code. In practical terms, Claude is like a very smart AI assistant similar to ChatGPT – one Alexa skill description even calls it “comparable to the more familiar ChatGPT.” Additionally, Claude operates under a unique framework called 'Constitutional AI,' which emphasizes ethical principles to guide its outputs, ensuring responses are both safe and effective.

Using Claude in a project means your app can understand context and handle complex queries. For example, you could prompt Claude with instructions to play the role of a helpful tutor, a travel guide, or a recipe expert. Claude can remember multiple turns in a conversation (context), so it can follow up on previous questions. Advanced AI models like Claude enhance functionalities such as voice assistants, customer engagement, and decision-making automation. In short, the Claude API provides a powerful language engine that you can plug into your applications – including voice assistants. Its high quality and safety focus makes it a strong choice for “unbeatable” voice experiences. Moreover, Claude has a larger context window compared to many competitors, allowing for more nuanced prompts and extended conversation history.

What is Amazon Alexa and the Alexa Skills Kit?

Amazon Alexa is a popular voice assistant that runs on devices like the Echo smart speaker, Fire TV, and more. Think of Alexa as a platform that enables voice conversations for various tasks: checking news, playing music, controlling smart home devices, and other voice-driven services. Alexa users can add skills, which are like voice-enabled apps. In the Alexa Skills Store, organizations and individuals publish skills to reach “hundreds of millions of Alexa devices” worldwide.

The Alexa Skills Kit (ASK) provides tools and APIs to build these skills. According to Amazon, “Voice is the most natural user interface. Enable customers to use their voices to easily access your content, devices, and services—wherever they are and whatever they’re doing.”. In practice, this means you design a voice interaction model (intents, utterances, slots) and link it to a backend service that handles the logic. Alexa will manage things like wake words and speech-to-text; your skill does the rest. The upcoming upgrade to the Alexa voice assistant will highlight its new advanced features powered by Claude AI, marking a significant shift in Amazon's strategy to incorporate generative AI models from Anthropic. The new Alexa will also be accessible on more than 500 million devices worldwide, significantly expanding its reach and potential user base.

Skills can cover many categories: games, music, food ordering, productivity, and more. For example, a custom skill could let users order pizza by saying “Alexa, ask PizzaPal to order my usual,” or check flight status by saying “Alexa, ask TravelGuru for my flight info.” The possibilities are broad. However, traditionally, Alexa’s built-in responses and simple intent handlers could feel limited. That’s where adding a powerful LLM like Claude can take the experience to the next level.

Why Combine Claude API & Alexa? (Synergy and Benefits)

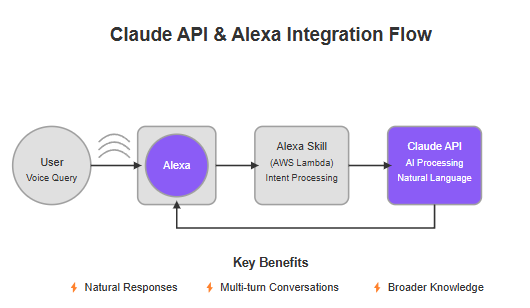

Combining Claude’s AI capabilities with Alexa’s voice platform unlocks unbeatable experiences. Alexa provides the voice interface and distribution (hardwares, devices, millions of users), while Claude supplies natural language understanding and generation. Together, they let you build conversational agents that are more helpful and engaging than either alone. Anthropic's Claude significantly enhances Amazon's Alexa by offering advanced features and superior performance, making it a powerful alternative to other leading AI models.

- Smarter, more natural responses: By default, Alexa’s responses might be scripted or brief. Claude can generate detailed, nuanced replies in real time. For example, a voice skill could use Claude to explain complex topics simply or to keep a fun, flowing chat.

- Multi-turn conversations: Alexa retains limited context by default. Claude can remember conversation context across many turns, so users can ask follow-ups naturally. For instance, you could first ask for dinner ideas, then ask “What about a vegetarian option?” and Claude will adapt the plan.

- Broader knowledge base: Claude’s AI has been trained on extensive data and can fetch information beyond Alexa’s native knowledge. It can answer questions, summarize articles, or solve problems that Alexa alone might not handle.

- Customized persona and branding: With Claude, you can give your voice skill a unique “personality” or domain expertise. For example, set a system prompt so Claude speaks like an enthusiastic teacher or a friendly tour guide. This can make interactions more engaging.

These benefits translate to better user experiences and higher satisfaction. Customers get relevant, helpful answers rather than dead-ends. For example, the “Claude Assistant” Alexa skill boasts features like multi-turn chat and “Claude AI (3.0 Haiku)” that “answers all of your questions,” from cooking advice to creative brainstorming. In one demo, a user asked Claude how to plan a healthy dinner; Claude responded with a balanced meal idea (“grilled salmon with roasted vegetables and quinoa”) and then gave a recipe!. That kind of fluid, intelligent conversation is exactly what you want for unbeatable voice experiences.

Additionally, on the business side, integrating an LLM can differentiate your skill in a crowded market. Amazon itself is moving in this direction: their new Alexa+ (announced Feb 2025) uses large language models from Anthropic and Amazon to deliver a more conversational assistant. In short, combining the Claude API with Alexa lets you ride this cutting edge. Users get a richer experience, and you stand out as a developer with expertise in the latest AI.

Key Features of Claude API for Voice Apps

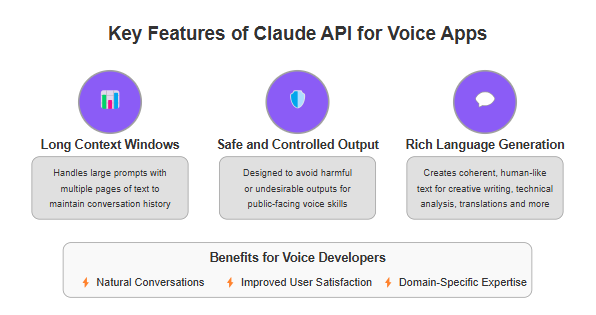

When using the Claude API in voice apps, some features stand out:

- Multimodal Understanding (Text & Images): Claude 3.7 supports text and images. In an Alexa context, this means you could send image data (if your Alexa device has a screen) for analysis – though voice apps are mostly text/audio.

- Long Context Windows: Claude can handle large prompts (multiple pages of text). This enables it to remember lengthy conversation history and complex instructions, useful for maintaining context in multi-turn voice dialogs.

- Safe and Controlled Output: Claude is designed to be helpful and safe. It aims to avoid harmful or undesirable outputs, which is important for public-facing voice skills.

- Few-Shot Learning: You can give Claude a system prompt or examples to steer its behavior. For example, instruct Claude to respond as an empathetic customer service agent or provide clear step-by-step answers.

- Rich Language Generation: Claude excels at crafting coherent, human-like text. It can do creative writing (stories, recipes, jokes), technical analysis, translations, and more. This richness makes voice responses more engaging.

Because of these features, the Claude API turns your Alexa skill into a capable and intelligent assistant. Users won’t notice a script or canned answer; they’ll feel like they’re talking to a knowledgeable expert or friendly guide. For instance, in the Amazon Skills Store, the “Claude Assistant” skill notes Claude can assist with “analysis and problem-solving” and explain complex topics simply. With Claude behind the scenes, your Alexa skill can handle requests ranging from “Explain how photosynthesis works” to “Brainstorm gift ideas,” all by voice.

Core Aspects of Alexa and Voice Platforms

Understanding Alexa’s platform is essential. Key points include:

- Skill Models (Intents & Utterances): You define an interaction model with intents (user goals) and sample utterances (phrases). For example, a “GetWeather” intent might trigger on “What’s the weather today?” Alexa’s NLU maps spoken input to intents.

- Invocation and Dialog: Users invoke skills by name (e.g., “Alexa, open MySkill”). Once the skill is open, the skill’s code (often on AWS Lambda) handles the rest. If integrated with Claude, the skill sends user utterances to Claude API and returns Claude’s text as Alexa’s spoken response.

- Devices and Modes: Alexa runs on devices with or without screens. On Echo Show devices (screens), you can display text or images. But for basic skills, you treat output as speech.

- Built-in Intents: Alexa provides built-in intents (like Stop, Help, Cancel) to handle common situations. Your skill should use those to make the flow smooth. Voice UX best practices say always handle errors and help gracefully.

- Multi-modal Features: If using devices with displays, you can enrich Claude’s answers with visuals. For example, if Claude mentions “sunset over the mountains,” you could show an image on screen. (This combines Claude’s output with Alexa’s display templates.)

Amazon Bedrock can be employed to develop conversational agents that work in tandem with voice interfaces like Alexa. Bedrock's pre-trained foundation models for natural language understanding allow developers to create efficient and responsive AI assistants that can process voice commands and maintain context across multi-turn conversations.

One advantage of Alexa is reach. By building a skill, you publish it to the Alexa Skills Store, potentially reaching millions of users. You’re also leveraging Alexa’s robust infrastructure: speech recognition, natural language understanding, and global device availability are all handled by Amazon. Your focus is on the conversational logic (now powered by Claude) and voice design.

Voice UX Tip: Voice design is unlike web or app design. You must “write for the ear, not the eye.” Phrases should be short and conversational. Amazon advises: prompts and answers should flow naturally, be concise, and fit what users expect to hear. For example, instead of a long list, Alexa might say “Here are today’s top news headlines. First: [headline]. Next: [headline]…” Also, accommodate mishearing: if Alexa misunderstands, have a fallback like “I’m sorry, can you repeat that?” or offering choices.

Setting Up Development Accounts (Anthropic & Amazon)

Before building anything, set up your accounts:

- Anthropic API Key: Visit Anthropic’s developer site and sign up for Claude API access. You may need to request access or select a plan. After approval, you’ll get an API key. Keep this secret (don’t embed it in client code).

- Amazon Developer Account: Go to the Alexa Developer Console and register for free. This lets you create skills. You will also need an AWS account (you can use a free tier) if you host your skill’s logic on AWS Lambda.

- AWS Lambda & Permissions (optional): Many Alexa skills use AWS Lambda as the backend. In the AWS Console, create a new Lambda function (Node.js or Python are common) for handling Alexa requests. In its configuration, include your Claude API key (typically via environment variables) and the logic to call the Claude API. Ensure the Lambda’s IAM role has internet access (default is fine).

- Environment Setup: Decide on your development setup. You can use the Alexa developer console editor (Basic Mode) or develop offline using tools like the Alexa Skills Kit SDK and then upload. For a project using Claude, you will code the request/response logic in a way that the skill’s intent triggers call Claude’s API.

Once accounts are ready, you can start on the skill itself.

Creating Your First Alexa Skill

Now, build an Alexa skill:

New Skill in Alexa Console: In the Alexa Developer Console, click Create Skill. Give it a name (e.g., “ClaudeChat” or anything you like). Choose the default language and a custom model. Choose the Provision your own backend option (to link to AWS Lambda).

Invocation Name: Specify the skill’s invocation name (how users will launch it, e.g. “claude chat” or “smart assistant”). This name should be easy to say.

Intents and Utterances: Define at least one intent like ChatIntent that will handle general queries. Under sample utterances for ChatIntent, put a placeholder like {phrase}, so the user’s entire query is captured. Also include built-in intents (AMAZON.HelpIntent, AMAZON.CancelIntent, etc.).

Slot Types: Create a custom slot type if needed (e.g., a slot for topics), but for a general chat skill, you might just use a single slot to capture everything the user says. Alternatively, you can use Alexa’s built-in Dialog.ElicitSlot to get free-form text.

Save and Build: Save the model and let Alexa build it. The console shows status. Once built, Alexa will know your invocation and intents.

At this point, you’ve defined what the skill can do. Next, you connect it to your code that does the how (using Claude).

Integrating Claude API into an Alexa Skill

The core of integration is connecting Alexa’s voice requests to the Claude API and returning the response. A high-level flow:

- Receive Alexa Request: When a user says something, Alexa processes it, matches an intent (e.g., ChatIntent), and sends a JSON request to your service (e.g., AWS Lambda function). This request includes the spoken text in request.intent.slots.phrase.value (if you used a slot for user input).

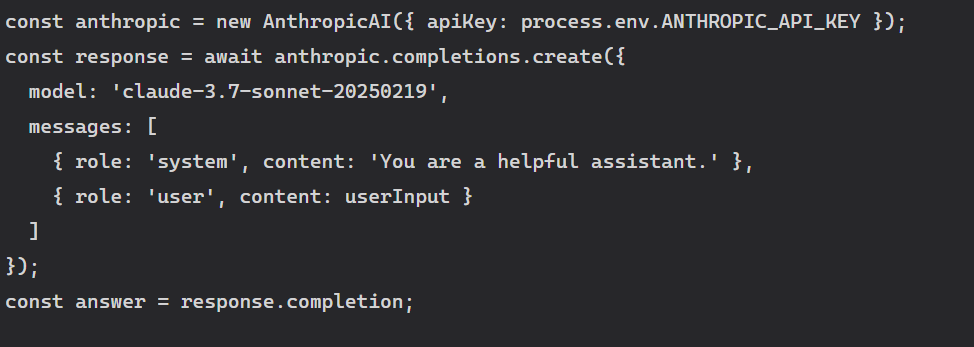

- Prepare Claude Prompt: In your code, extract the user’s utterance and build a prompt for Claude. It often helps to add a system message to guide the model, for example: “You are a helpful voice assistant. Answer briefly but informatively.” Then add the user message. Amazon Bedrock provides access to pre-trained foundation models optimized for natural language interactions, which are crucial for understanding user inputs and generating human-like responses.

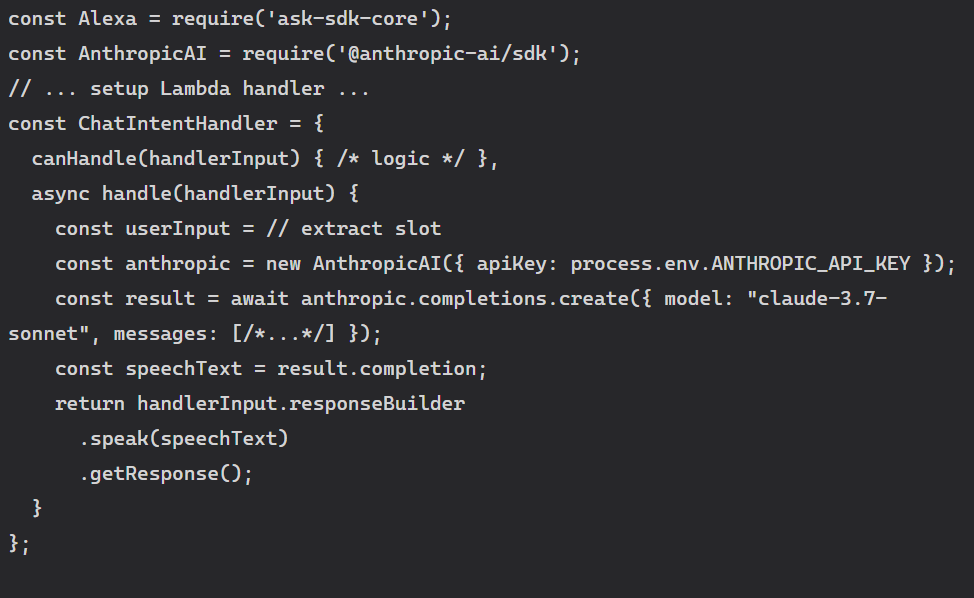

- Call Claude API: Use Anthropic’s SDK or a simple HTTP POST to send the messages to Claude’s API endpoint. Include your API key in the header. For example, a Node.js snippet might be:

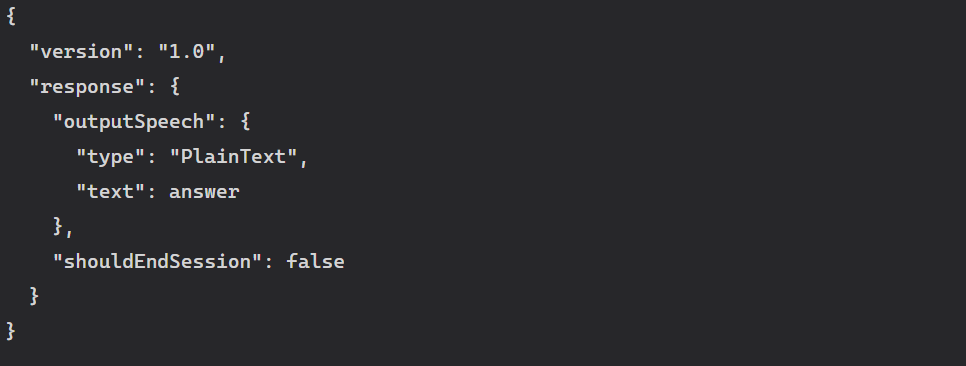

- Return Alexa Response: Take Claude’s answer text and package it into Alexa’s response format. Typically, you send a JSON response with a < speak> SSML string containing Claude’s answer. For example:

Alexa will speak out the text.

For real implementation, check Amazon’s Alexa SDK docs or use their Node.js/Python SDK to simplify this. They handle the boilerplate JSON for you. The key takeaway: your skill code should act as a middleman — sending user requests to Claude and sending Claude’s reply back to the user.

Example: Several developers have done this. A GitHub tutorial “Building a Claude Skill for Alexa” guides through creating a skill that calls Claude’s API. The result is a voice app that lets you “ask it questions or have conversations” much like ChatGPT. Similarly, Twilio’s blog shows how to create a Claude-powered voice assistant via phone calls. The pattern is the same for Alexa: hook up the voice input to Claude’s large language model.

Designing Natural Voice Interactions (Best Practices)

A key to unbeatable voice experiences is how you design the conversation. Here are best practices:

- Write for the Ear: Keep prompts and responses concise. Long paragraphs are hard to follow. Use a friendly tone and speak in normal sentences. For example, say “Alright, here’s how to clean a wine stain. First, blot the stain…” instead of a formal written tone. Designlab’s guidelines remind us to “write for the ear, not the eye”.

- Understand User Context: Anticipate where and why the user might be speaking. For instance, a user might be cooking, driving, or multitasking. Amazon’s Alexa is built to understand context: e.g., if you ask for a timer in the kitchen vs. music in the living room, Alexa gives context-relevant answers. Try to make your skill similarly aware. If your skill is a cooking helper, assume the user’s hands might be busy.

- Simplify Interactions: Reduce user effort. Users shouldn’t memorize commands. Support natural language as much as possible. A best practice is to allow vague but clear commands. For example, Domino’s voice ordering lets users say “Order my usual” or “Ask Domino’s to deliver a pepperoni pizza”. Your skill could similarly accept variations of questions instead of a rigid format.

- Guide the Conversation: Use friendly prompts and confirmations. If the user says something unclear, ask a clarifying question (“I didn’t catch that. Did you mean X or Y?”). Always handle errors gracefully: if Claude’s response is empty or unclear, have a fallback response. For instance, “I’m sorry, could you repeat that?” or a brief help message.

- Maintain Consistency: Give Claude a clear “persona” or style. If your skill is casual, instruct Claude to keep replies casual. If it’s professional, use a formal tone. This consistency makes the conversation feel natural.

- Test Prompts: Try out different phrasings with Claude to see how it responds. Because Claude can output more verbose answers, you may want to limit length (e.g. max tokens). You can say in the prompt: “Answer succinctly, as if speaking to a user.” Experiment in Anthropic’s Playground or quickly via their API.

By following these guidelines, your skill will be more engaging and easier to use. Remember, voice design is different – users expect quick answers and may not like hearing long-winded responses. Encourage Claude to give clear, step-by-step replies rather than dumping huge paragraphs. For example, when asked “How do I remove a wine stain?”, Claude might reply: “Red wine stains can be tricky, but here’s a method: 1. Blot the stain with a clean cloth; 2. Sprinkle salt on the stain; 3. Pour boiling water over it from a height; 4. If any stain remains, apply a mix of dish soap and hydrogen peroxide and let it sit a few minutes.” This structured answer is both helpful and easy to follow by ear.

Multi-Turn Conversations and Context Retention

One big advantage of the Claude API is handling multi-turn chats. By default, Alexa can remember session attributes, but it’s limited. Claude can store conversation history in the prompt, providing near instant responses that enhance the user experience in multi-turn conversations. Here’s how to use it: Claude has been shown to significantly lower customer service resolution times when implemented in applications like Lyft, demonstrating its ability to streamline interactions and improve efficiency in real-world scenarios.

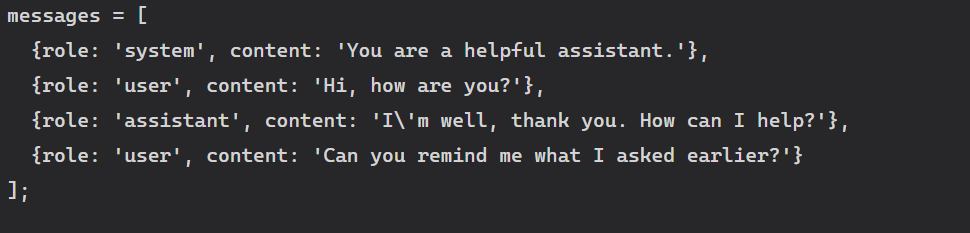

- Pass the Dialogue History: Each time the user says something, append it and previous turns to the list of messages you send to Claude. For example:

Claude will see the context of the entire conversation.

- Maintain State Carefully: Track context in your code. Alexa sessions may time out, so you might store the conversation log in a database or in session attributes. Only send as much as needed to Claude to avoid exceeding token limits.

- Context Window: Claude 3.7 has a large context window (more tokens). This means it can remember longer threads of conversation. Use this to your advantage by summarizing old parts if needed.

With context, users can treat your skill like a real chat partner. For instance, a user might say: “What’s a healthy dinner option?” Claude replies with a suggestion. The user then says: “Make it vegetarian.” Because you include the previous suggestion in the prompt, Claude can modify it accordingly. It feels natural and fluid. The Amazon Alexa team highlighted that their Alexa+ will be able to remember user preferences (“dietary preferences or allergies — the more it’s used, the more it learns”). You can mimic that by tracking user details (with consent) and feeding them to Claude to personalize answers.

Example: A use case could be a travel planning skill. The user says “Plan a weekend trip to Paris.” Claude suggests an itinerary. The user then says “Make it kid-friendly.” The skill passes both turns to Claude, and it revises the plan with children’s activities. This seamless context handling is what sets AI voice experiences apart from static Q&A skills.

Use Cases & Examples

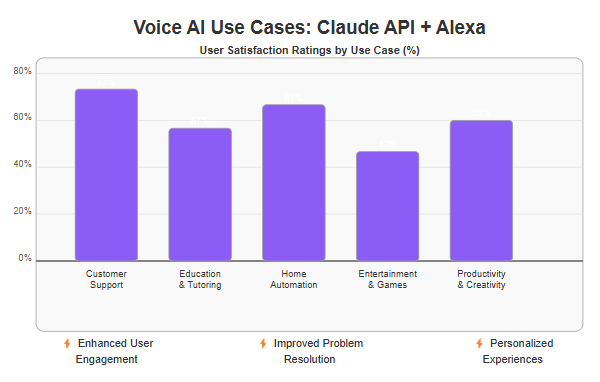

There are many real-world scenarios where Claude API & Alexa shine together. Here are a few:

- Customer Support and FAQ: A company can use an Alexa skill for customer questions. Instead of just scripted answers, Claude can understand varied user queries and give detailed yet concise answers. For example, a tech support skill could have Claude explain how to solve a connectivity issue step-by-step. One demo on an Alexa skill shows Claude advising on household chores like removing a wine stain with numbered steps. This level of detail can reduce frustration and mimic talking to a human agent.

- Education and Tutoring: Voice-enabled tutoring is emerging. Imagine a student asking, “Explain Pythagoras’ theorem.” Claude can deliver a clear explanation and even give an example problem, all via Alexa. Later, the student can ask follow-up questions (“Can you give me a practice problem now?”) and Claude continues the dialogue. This makes learning interactive. Voice apps using ChatGPT for education have shown promise, and Claude’s friendly and safe AI can do the same.

- Home Automation & Personal Assistant: Combine smart home control with intelligence. For example, a user might say, “Alexa, ask HomeCoach how to fix the AC.” Instead of Alexa’s generic responses, Claude could offer a conversational troubleshoot guide. Or “Plan a party playlist,” and Claude could suggest songs based on spoken preferences.

- Entertainment & Games: Develop an interactive story game. Each choice by the user can be handled by Claude, making a dynamic adventure. For example, an Alexa skill “Haunted House” could have Claude describe scenarios and react to user decisions in creative ways. Multi-turn context is crucial here.

- Productivity & Creativity: Chat with your ideas. Say you run a blog and need writing help. You could build an Alexa skill that uses Claude to brainstorm topics or refine an outline by voice. It would be like speaking to your writing assistant. This can bring AI-powered brainstorming into the hands-free world.

AI solutions like Claude and Alexa are impacting various industries by providing tailored services and technologies that enhance customer engagement, decision-making automation, and operational efficiency. These examples show how powerful voice + AI can be. As a concrete illustration, the Claude Assistant Alexa skill (a third-party integration) highlights features in its description: multi-turn conversations, context retention, natural dialogue with flexible phrases, and creative brainstorming. That skill’s user experiences include planning meals and giving cooking instructions naturally, just by voice. You can create similar domain-specific solutions by tailoring Claude’s prompts for your application.

Testing, Analytics, and Optimization

Once your skill is running, test and refine it thoroughly:

- Voice Testing: Use tools like Alexa’s Simulator in the console, or test on a real device. Speak naturally and try synonyms of your sample utterances to see if Alexa still reaches Claude properly. Also test edge cases (“Stop”, “Help”, mispronunciations).

- Review Claude’s Output: Sometimes Claude might give an answer that’s too long or slightly off-topic. You can trim or post-process answers if needed. For instance, limit answers to 300 characters or remove any text before returning to Alexa. Always ensure the final response is clear and on point.

- Iterate on Prompts: If Claude’s replies are not the tone you want, adjust the system prompt. E.g., add “Speak in a friendly tone and keep it concise.” Experiment until the answers feel right.

- Use Analytics: Amazon provides analytics on skill usage (number of users, popular requests, drop-off rates). Use this data to see what users ask and where they get stuck. For example, if many users say “I don’t know” after a prompt, it might mean the skill’s prompts are unclear. If questions to Claude always end in confusion, refine your handling.

- Gather Feedback: Encourage beta users to give feedback. Maybe they found it slow or sometimes out of scope. Use that to improve the dialogue flows.

- Performance Monitoring: Keep an eye on latency. Claude’s API calls take time, so the user may wait a second or two for a response. That’s usually acceptable for complex answers, but try to minimize unnecessary wait time. You can have Alexa say “Let me think…” or play a sound while waiting.

Optimization is ongoing. The goal is to make the interaction feel smooth. If you set Claude’s max_tokens too high, it might delay responses; too low, and it might cut off. Find a balance. Use follow-up requests to Claude rather than one huge prompt if needed. For example, break a conversation into turns rather than re-sending the entire history each time (depending on model costs). Testing is key: simulate real users and keep improving.

Privacy, Security, and Trust (E-E-A-T Principles)

Voice experiences handle user data, so trust is critical. Apply E-E-A-T (Experience, Expertise, Authority, Trustworthiness):

- Transparency: Tell users what data you collect. If your skill stores user preferences or transcripts, mention that in your privacy policy. Amazon requires that published skills include a privacy policy if they collect personal info.

- Data Handling: By default, Alexa records utterances in the cloud to improve ASR. With Claude integration, you’re also sending text to Anthropic’s servers. Make sure you understand Anthropic’s data usage policies (Anthropic states they may use data to improve models, unless under a specific agreement). If needed, note this in your policy.

- Security: Protect your API keys. Do not hard-code them; use secure storage (AWS Secrets or Lambda environment variables, AWS KMS, etc.). Follow Amazon’s security requirements for skills.

- User Control: Respect user privacy. For example, allow users to clear any saved data. Alexa has built-in commands like “Alexa, delete what I just said” for voice logs. Remind users they can manage their data. Amazon’s own feature let users review and delete recordings – encourage similar vigilance on your part.

- Trustworthy Answers: Avoid having Claude present critical info as fact if uncertain. If Claude is unsure, you can program your skill to say “I’m not certain” or guide them to official sources. Remember, LLMs can hallucinate. Adding a quick check for “It is not clear” in Claude’s answer or a fallback if the answer is nonsensical can help maintain trust.

- Compliance: If you are in regulated industries (healthcare, finance), ensure compliance. For example, HIPAA compliance is needed for health data. Alexa has HIPAA-compliant skills programs.

- Authority: Cite external information when relevant. One way is to have Claude answer and then optionally provide a source (if it’s confident). For instance, Claude could say “(Source: CDC website)” after health advice. This builds authority. However, be careful: Claude may fabricate sources. Always verify critical info.

Studies show many Alexa skills lack proper privacy notices and transparency, which erodes trust. Don’t fall into that trap. Make it clear that Claude is an AI assistant (as the Alexa skill description does: “This Alexa skill is purely an interface to the Claude AI chatbot developed by Anthropic”). This honesty about who/what is behind the skill increases user confidence.

By demonstrating expertise (well-designed prompts), authority (accurate info, credible sources if possible), and trustworthiness (clear privacy, reliable answers), your skill will follow E-E-A-T principles. Users are more likely to adopt a voice service they trust.

Performance Limits, Cost, and Reliability Considerations

While Claude and Alexa integration is powerful, be mindful of practical limits:

- Latency: Each Claude API call can take ~1-3 seconds (depending on model and complexity). Alexa skills should respond within 8 seconds or they risk timing out. In practice, ensure your skill sends responses quickly. If needed, trim Claude’s prompts or answers to speed things up.

- Rate Limits and Costs: Anthropic’s API is paid by usage. Check their pricing. A busy skill could generate significant token usage. Monitor usage and consider caching frequent responses or summarizing long dialogues. You might choose a smaller Claude model for faster, cheaper responses when ultra-high creativity isn’t needed.

- Offline Fallback: If the Claude API is unreachable (network error), your skill should have a backup response. For example: “Sorry, I’m having trouble accessing my brain right now.”

- Voice Mistakes: Speech recognition isn’t perfect. Include logic to handle misheard words. Sometimes ask “Did you mean…?” or allow repetition.

- Content Moderation: Although Claude is designed to be safe, keep an eye out. If your skill covers sensitive topics, test thoroughly to ensure no inappropriate responses slip through. You might add filters or use Claude’s “safer completion” settings.

By planning for these, you’ll have a more reliable skill. Remember, users value consistency. A fast, correct answer is better than a slow answer with 99% accuracy.

Voice Development Tools & Frameworks

Several tools can speed up building Claude-powered Alexa skills:

- Voiceflow: A low-code platform for designing voice apps. Voiceflow supports custom API integrations. (Voiceflow even has tutorials on integrating Claude into a Voiceflow assistant.) You can prototype dialogue flows and test with voice, then export code.

- AWS Lambda + Alexa SDK: Amazon’s official way. Use the Alexa Skills Kit SDK for Node.js or Python to manage requests/responses. It simplifies session handling.

- Docker for Local Testing: Tools like Alexa Local let you test Alexa skills on your machine before deployment.

- Code Samples: Anthropic’s docs and example GitHub projects can guide you. For instance, the Twilio blog’s Node.js code (using Fastify) can be adapted for Alexa. Their example shows creating a conversation with Claude in real-time voice contexts. You might copy their method of queuing messages and handling events.

- Logging and Monitoring: Use AWS CloudWatch logs to track your skill’s behavior. Log the prompts and responses (without logging secrets). This helps debug if Claude answers incorrectly or if you hit API errors.

- Prompt Libraries: Keep a library of effective prompts. For example, a prompt like “When answering the user, first restate their question to confirm, then give a clear answer.” You might have prompts for different intents (one for definitions, one for step-by-step guides, etc.). Testing and reusing good prompts is a mark of expertise.

These tools and resources make development faster and more robust. The combination of Claude’s SDK and Alexa’s SDK is very powerful. For instance, in Node.js you can use Anthropic’s official npm package alongside the ask-sdk. This way you write something like:

This snippet shows how Alexa’s handler calls Claude and uses the response. Having code examples like this in your toolkit is helpful when starting out.

The Future of Voice AI: Alexa+, Generative AI Trends

We are just at the beginning of AI-powered voice. Amazon’s recent announcements highlight this trend:

- Alexa+: In early 2025 Amazon rolled out “Alexa+”, a generative-AI-infused assistant. It can plan events, check tone, and have a “conversational, humanlike flow”. Amazon confirmed Alexa+ uses large language models from both Amazon and Anthropic with a “model-agnostic” system. This means Amazon itself sees the value in Claude-like models for voice.

- Multi-Model Approaches: Expect voice assistants to pick the best AI for the task. Alexa+ will “select the best AI model” for each query. As a developer, you could one day switch between models (Claude, GPT, Llama, etc.) within your skill depending on needs.

- Ambient Voice Computing: The idea that voice AI is everywhere: in cars, appliances, phones. Skills you build now might run on next-gen devices. Designing with this future in mind (short responses, privacy, on-device hints) will keep your experiences ahead of the curve.

- Increased Personalization: Future voice assistants will use history to adapt. We already mentioned learning preferences. Soon, any skill you make could tap into user profiles (with permission) to tailor responses.

- Competition and Collaboration: Google, Apple, and other companies are also improving their voice AIs. Skills built for Alexa may inspire cross-platform solutions. Understanding Claude and LLMs sets you up to work with any voice platform’s AI layer.

Additionally, Amazon is implementing a subscription model to support the new features of Alexa, ensuring sustainable development and access to advanced capabilities.

- Alexa+: In early 2025 Amazon rolled out “Alexa+”, a generative-AI-infused assistant. It can plan events, check tone, and have a “conversational, humanlike flow”. Amazon confirmed Alexa+ uses large language models from both Amazon and Anthropic with a “model-agnostic” system. This means Amazon itself sees the value in Claude-like models for voice.

- Multi-Model Approaches: Expect voice assistants to pick the best AI for the task. Alexa+ will “select the best AI model” for each query. As a developer, you could one day switch between models (Claude, GPT, Llama, etc.) within your skill depending on needs.

- Ambient Voice Computing: The idea that voice AI is everywhere: in cars, appliances, phones. Skills you build now might run on next-gen devices. Designing with this future in mind (short responses, privacy, on-device hints) will keep your experiences ahead of the curve.

- Increased Personalization: Future voice assistants will use history to adapt. We already mentioned learning preferences. Soon, any skill you make could tap into user profiles (with permission) to tailor responses.

- Competition and Collaboration: Google, Apple, and other companies are also improving their voice AIs. Skills built for Alexa may inspire cross-platform solutions. Understanding Claude and LLMs sets you up to work with any voice platform’s AI layer.

Continuous innovation drives the development of more advanced and personalized user experiences in voice AI, particularly in areas such as home automation and voice assistance.

In summary, voice AI is moving fast. By learning Claude API & Alexa, you’ll be at the forefront of voice development. The experiences you build now can evolve as models improve. Today’s chat-powered Alexa skills are just the first chapter of a voice-first future.

Frequently Asked Questions

Q1: What are some alternatives to using Claude API with Alexa?

A1: You could use other LLMs like OpenAI’s GPT, Google’s PaLM, or open-source models. Amazon also has its own Titan models. However, Claude is known for its helpfulness and safety focus. The integration steps are similar for any API-based AI model.

Q2: Do I need special permission from Amazon to use Claude in my Alexa skill?

A2: No special permission is needed beyond normal skill creation. Just comply with Amazon’s skill policies (content rules, privacy, etc.) and follow Anthropic’s terms of service for Claude. For commercial use of Claude, a paid plan and agreement with Anthropic is required. For testing and demos, the Claude demo key usually suffices.

Q3: How do I ensure Claude’s responses are short enough for voice?

A3: Use Claude’s parameters like max_tokens or ask it directly in the prompt to keep answers brief. For example, add “Keep the answer under 50 words.” Alternatively, truncate the response in code before sending to Alexa. Always test to balance completeness with brevity.

Q4: Can I use Claude API & Alexa for languages other than English?

A4: Yes, Claude supports multiple languages to an extent. Alexa also supports many locales. However, voice accuracy and Claude’s knowledge may vary by language. If you target non-English users, test specifically in that language.

Q5: Will using Claude API slow down my Alexa skill?

A5: There is some added latency (often ~1-3 seconds) because Claude needs time to generate a response. Alexa skills can handle this, but users expect quick answers. You might add brief wait messages or keep answers short. Overall, a 2-second delay is usually acceptable for the richer answers you get.

Q6: How do I handle Claude giving incorrect information?

A6: Even advanced AI can make mistakes. It’s good practice to detect obvious errors (like claiming a false fact) and have a safe fallback. You could add a quick check (e.g., if an answer contains “I don’t know, but” or is empty, respond with a polite fallback). Encouraging Claude to provide sources can also help (though with caution, as it may hallucinate). Regular testing and user reports are key to catching issues.

Q7: Can I monetize my Claude-powered Alexa skill?

A7: Yes, you can publish and monetize skills (e.g., with in-skill purchases). However, remember that Claude API usage has its own cost. Make sure your revenue model covers API costs. Also follow Amazon’s policy for paid features.

Conclusion

Voice assistants are becoming more intelligent and personal, and combining the Claude API & Alexa is a powerful way to lead in this space. By integrating Anthropic’s advanced AI with Amazon’s voice platform, you can create apps where users feel truly heard and helped. In this guide we covered everything from setting up accounts, to creating skills, to design best practices and trust considerations. We saw how Claude can give Alexa a “brain” that answers naturally and in-depth. We also emphasized the importance of good conversation design and user trust.

As Alexa and other assistants adopt generative AI (like Amazon’s new Alexa+), skills built with Claude will be ahead of the curve. Remember to continually test, learn from analytics, and refine your voice flows. The result will be a seamless, “unbeatable” experience where users feel like they’re chatting with a smart companion. Stay optimistic: the future of voice is bright and user-first, and with Claude API & Alexa, you’re on the cutting edge of making that future a reality.

Next Steps

- Translate the Article: Want to reach a global audience? Translate this guide into other languages (e.g. Spanish, Chinese, German) so non-English speakers can also learn how to build voice AI apps.

- Generate Blog-Ready Images: Use DALL·E or another image tool to create custom visuals for your blog post. For example, an image of a happy user speaking to a futuristic smart speaker, or a diagram showing the integration flow of Alexa -> Claude API -> Voice.

- Start a New Article: Inspired to write more? Try a related topic like “How to Build an Alexa Skill Using ChatGPT API” or “The Future of Voice Assistants in 2025”. Each new article helps you become a go-to expert in voice AI development.