How to Build Trust in AI Systems

How to Build Trust in AI Systems: The Ultimate 2025 Blueprint

How to Build Trust in AI Systems: Key Takeaways

Building trust in AI isn’t optional—it’s the foundation that powers adoption, compliance, and sustainable growth. Ethical practices are essential for building and maintaining customer trust in AI systems. These actionable insights help startups and SMBs embed trust deeply into their AI systems right now.

- Build trust on the 7 pillars: prioritize transparency, fairness, reliability, accountability, privacy, ethics, and stakeholder engagement to create AI users can confidently rely on.

- Make AI decisions transparent with tools like LIME and SHAP, clear user communication, and plain-language explanations to boost adoption and reduce support calls by up to 30%.

- Embed ethical AI practices through regular bias audits, inclusive datasets, and societal impact assessments to avoid reputational damage and legal risks, while fostering customer trust through ethical practices.

- Prioritize privacy and security with strong encryption, anonymization, and proactive threat monitoring to meet regulations like the EU AI Act and build user confidence.

- Implement clear accountability frameworks with defined ownership, human-in-the-loop oversight, and AI governance boards to ensure ethical, responsible AI deployment.

- Ensure AI reliability and robustness using stress testing, real-time monitoring, and automated error handling to maintain consistent performance and user trust.

- Design AI with users front and center by applying human-centered design principles, usability testing, and accessibility standards to make AI intuitive and trustworthy.

- Engage diverse stakeholders continuously through feedback loops, transparent reporting, and localized communication to build inclusive, adaptable AI systems that scale globally.

Trust in AI is a strategic advantage—dive into the full blueprint to transform your AI from a black box into a trusted teammate your users champion.

Introduction

Nearly 4 in 10 organizations paused their AI projects last year—not because of budget or skills, but because they didn’t trust the technology.

Decision makers play a critical role in AI adoption and usage, especially when trust is lacking, as their confidence directly influences whether AI systems are implemented or put on hold. What if you could flip that script and turn trust into your startup’s biggest competitive edge?

Building trust in AI isn’t just about fancy algorithms or slick interfaces. It’s about creating systems that feel reliable, fair, and transparent to the people who use them every day. Whether you’re a startup launching your first AI feature or an SMB scaling smart automation, trust is the foundation that makes users actually stick around—and regulators keep their hands off your neck.

You’ll discover how mastering seven core pillars—from transparency and fairness to privacy and accountability—drives increased AI adoption and responsible AI usage by making complex AI something your customers and partners understand and value.

Here’s a taste of what’s ahead:

- How clear explanations can transform AI from a black box into a trusted teammate

- Practical ways to audit and reduce bias before it becomes a PR crisis

- Smart privacy safeguards that do more than check regulatory boxes

- Building governance that keeps humans in control without slowing innovation

All grounded in real-world examples and tailored for fast-moving teams with limited resources.

Imagine launching AI tools your users not only tolerate but recommend. The secret? Owning trust from day one, not as an afterthought.

To start, let’s break down why trust is the real dealbreaker—and core to your AI success story.

Understanding Trust in AI Systems: Foundations and Importance

Trust in AI means users feel confident that AI systems act reliably, fairly, and transparently. Building trust also depends on meeting users expectations and ensuring they understand AI—how it works, its limitations, and its decision-making process. It’s about knowing AI decisions are clear, ethical, and respect privacy.

Why Trust Is a Dealbreaker for Adoption

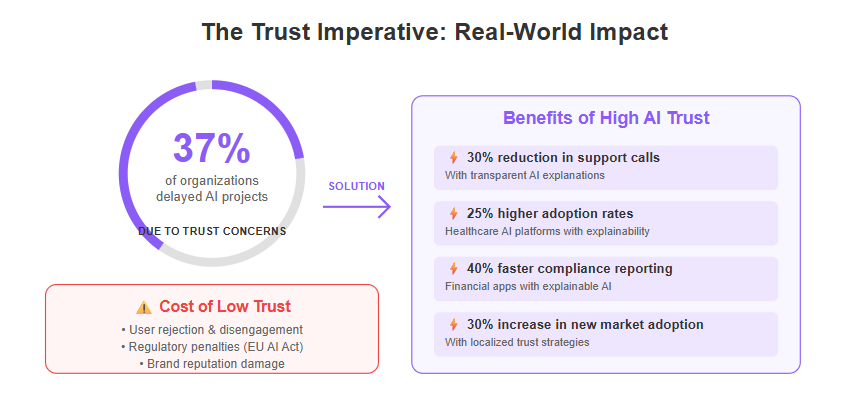

Without trust, SMBs, startups, and enterprises hesitate to adopt AI, fearing errors, biases, or legal risks. A 2024 report shows 37% of organizations delayed AI projects due to trust concerns.

Common risks of low trust include:

- User rejection or disengagement

- Regulatory penalties under laws like the EU AI Act

- Damage to brand reputation and lost revenue

Low trust can negatively affect an organization's willingness to assign AI to critical roles, limiting its use in decision-making or sensitive operations.

These risks amplify when AI decisions affect customers or sensitive data.

The 7 Pillars of Trustworthy AI

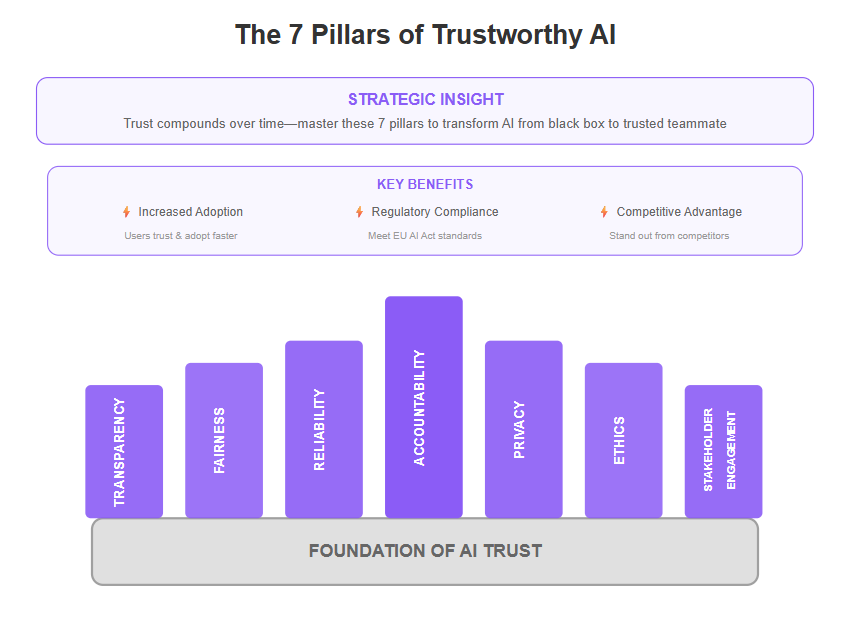

Building trust means mastering these core dimensions:

- Transparency: Explain how AI makes decisions

- Fairness: Remove bias for equitable outcomes

- Reliability: Ensure consistent and accurate results

- Accountability: Define who owns AI outcomes

- Privacy: Protect user data with strong safeguards

- Ethics: Align AI with societal values and adhere to ethical standards

- Stakeholder Engagement: Include users, developers, and regulators in feedback loops to address ethical issues through stakeholder feedback

Together, these create a trust framework your AI can stand on reliably.

The 2025 Trust Landscape: What’s Shaping It?

With regulators increasingly cracking down, like the anticipated impact of the EU AI Act, organizations must build trust proactively. User expectations are evolving too — people now expect AI to be explainable and fair by default. There is also a growing demand for AI transparency, as users want clear explanations of AI decisions to foster trust and ensure ethical practices.

Picture this: a startup deploying AI-powered customer support that clearly flags when users talk to AI versus humans, earning quick trust and boosting satisfaction.

Takeaways You Can Use Now

- Start by mapping AI trust risks specific to your industry and audience.

- Use transparent communication to notify users about AI involvement; don’t leave them guessing.

- Adopt regular audits of fairness, privacy, and performance, with a focus on continuous improvement — trust is earned continuously.

Thinking of trust as a product feature—not an afterthought—helps you build AI your users will actually rely on confidently. That’s the difference between cutting-edge tech and real-world success.

Transparency: Making AI Decisions Clear and Understandable

Transparency is the cornerstone of trust in AI. When users understand how AI systems reach decisions, they’re far more likely to adopt and rely on them. Making AI's outputs understandable is essential for building user trust, as it allows stakeholders to evaluate and perceive the reliability of AI-generated results.

Explainability techniques like LIME and SHAP help break down complex AI outputs into digestible insights.

- LIME shows which features influenced a specific decision and serves as an important tool for interpreting complex AI models.

- SHAP assigns clear attribution scores to different input factors, helping users understand the inner workings of an AI model.

These methods turn black-box models into interpretable stories, boosting user confidence and enabling smarter business decisions. Using explainable AI tools is essential for building trust and ensuring transparency when deploying AI models in real-world applications.

Clear communication means more than tech jargon.

- Notify users clearly when AI is involved

- Explain decision logic in plain language

- Highlight any uncertainties or limitations

Picture this: a startup uses AI to approve loan applications. When applicants receive a transparent explanation of why they were accepted or denied, user satisfaction jumps, cutting support calls by 30%. Transparency isn’t just a nice-to-have—it’s a shield against mistrust and regulatory penalties.

But transparency faces real challenges.

Advanced AI models like deep neural networks are inherently complex. Large language models, in particular, introduce unique transparency challenges due to their scale and the difficulty in tracing how they generate content. Balancing openness without overwhelming users is tricky. Companies often struggle between revealing enough detail and protecting proprietary algorithms.

Real-world wins show transparency works.

- Healthcare AI platforms that explain diagnoses earned 25% higher doctor adoption rates

- Financial apps using explainable AI improved regulatory compliance reporting speed by 40%

These success stories underline that transparency builds bridges between AI and its users. Demonstrating transparency in real world scenarios is crucial for building trust and ensuring AI systems perform reliably in practical applications.

For deeper strategies, check out our subpage: How Explainable AI Can Transform Trust in Automated Decisions.

When you make AI decisions clear and understandable, you’re not just following best practices—you’re setting a new standard of trust that accelerates adoption, cuts risk, and powers growth.

Quotable:

- “Transparency turns AI from a mystery into a trusted teammate.”

- “Explaining AI decisions isn’t optional in 2025—it’s essential.”

- “User trust skyrockets when AI opens its black box.”

Ethical AI Development: Fairness, Inclusion, and Bias Mitigation

Ethical AI is no longer just a checkbox; it’s a strategic imperative for startups and SMBs aiming for long-term trust and adoption.

AI ethics refers to the foundational principles, frameworks, and guidelines that ensure AI systems are designed, implemented, and used in ways that are moral, transparent, and aligned with human values. Key aspects of AI ethics include fairness, transparency, and accountability, which are essential for building trust and ensuring regulatory compliance.

At its core, ethical AI means actively identifying and reducing bias through regular audits and bias impact analysis to ensure algorithms don’t reinforce stereotypes or unfair outcomes.

Diverse, representative datasets are essential. Imagine training an AI on data that reflects only a narrow user group—it’s like building a product blindfolded.

Here’s what ethical data practices look like in action:

- Curate datasets from varied demographics and contexts, ensuring high data quality for trustworthy AI

- Use fairness metrics to measure equity across groups

- Regularly update datasets to reflect changing populations

The “7 Proven Strategies to Ensure Ethical AI Development in 2025” highlights these as foundational moves toward fair AI systems.

Beyond data, conducting societal impact assessments helps foresee broader repercussions of AI deployments—from hiring tools to customer service bots.

Continuous ethical monitoring isn’t a one-off task. It involves:

- Ongoing reviews of model outputs for unintended biases

- Incorporating stakeholder feedback for course corrections

- Instituting accountability checkpoints throughout AI’s lifecycle

This proactive approach shields companies from costly reputational damage while fostering greater user acceptance. It also delivers tangible benefits such as increased trust, improved efficiency, and cost savings for organizations and users.

Picture this: a startup launches a recruitment AI without bias checks, unintentionally screening out qualified candidates from underrepresented groups. The fallout isn’t just reputation—it’s lost opportunity and possible legal exposure. In such cases, several participants from different backgrounds may be unfairly impacted, underscoring the need for trust and transparency in AI systems.

Ethical design acts as your safeguard, building AI that respects human values and drives inclusive innovation.

Takeaways you can act on today:

Schedule regular bias audits—monthly or quarterly depending on AI complexity.

Build or source datasets reflecting your full user base, not just the majority segment.

Set up clear ethical oversight with defined roles and responsibilities.

When you embed fairness and inclusion into your AI DNA, you’re not just avoiding pitfalls—you’re building a product that users trust and champion.

Ethical AI isn’t hypothetical—it’s a practical edge you can seize now to power smarter, fairer growth.

Privacy and Security: Protecting Data to Build Confidence

Building trust in AI starts with solid privacy and security foundations that meet today’s tough regulatory standards—think the EU AI Act and beyond. Data privacy and data security are essential for protecting personal data, ensuring ethical use, and maintaining stakeholder trust in AI systems.

At the core are three data protection principles you need on lockdown:

- Encryption of data at rest and in transit

- Anonymization to strip personal identifiers from datasets

- Secure storage using latest cloud and on-premises safeguards

These practices shield sensitive info from leaks and keep your AI compliant with growing legal demands.

Protecting Against AI-Specific Threats

AI systems face evolving risks that traditional IT security might not cover. Examples include:

- Model inversion attacks revealing private training data

- Data poisoning that corrupts AI decision-making

- Adversarial inputs designed to fool algorithms

Defending against these requires layered security approaches blending technical shields and constant monitoring. It is crucial to ensure that AI operates within secure and ethical boundaries, maintaining control measures and compliance to build trust and protect sensitive data.

Learn about the four-phase security approach to keep in mind for your AI transformation.

Communicating Privacy With Transparency

Users won’t trust AI that feels like a black box on data use. Proactively sharing:

- Clear privacy policies

- How data is collected, stored, and used

- Security measures in place

...goes a long way to build confidence and reduce misunderstandings.

Learning From Real-World Privacy Failures

Remember the 2023 health app breach exposing thousands of patient records? It cost companies millions and shattered user trust overnight.

Takeaways:

- Even small missteps have massive fallout

- Prioritize privacy by design from day one

- Regularly audit security to catch weak spots before attackers do

Embracing these safeguards is your smartest move to keep AI trustworthy in 2025 and beyond.

“Privacy isn’t just compliance; it’s a pledge to your users.”

“Secure AI isn’t optional—it’s the foundation of lasting trust.”

Picture this: You’re launching an AI chatbot that handles customer queries. Imagine the impact if your users knew exactly how their data is protected with encryption and transparency. That’s trust in action.

Locking down data with proven methods and honest communication turns potential anxiety into user confidence—and that’s how you create AI systems people believe in.

Accountability and Governance: Human Oversight and Clear Roles

Clear accountability frameworks are the backbone of trustworthy AI systems. They define who owns AI outputs and who is responsible throughout the AI lifecycle—from development to deployment and monitoring. It is crucial to ensure that AI systems operate within their intended function to maintain accountability and prevent misuse.

Building Governance That Works

Effective governance structures ensure AI decisions are ethical and reliable. This means:

- Assigning explicit ownership for AI projects

- Establishing cross-functional teams with clear duties

- Implementing policies for ethical use and compliance

- Applying secure data access controls to safeguard AI initiatives and ensure responsible identity and access management

Think of governance as the AI system’s control tower, guiding operations and catching issues early.

Learn more in AI Governance 2025: A CTO’s Practical Guide to Building Trust and Driving Innovation.

Human-in-the-Loop: The Safety Net

In high-stakes scenarios, human-in-the-loop approaches keep AI decisions balanced and sensible. Humans review, validate, or override AI outputs when errors or ethical dilemmas arise. Involving human users in this process is crucial for ensuring ethical standards, accurate decision-making, and maintaining trust in AI systems.

- This reduces risks of automated mistakes

- It adds a layer of moral judgment machines lack

- It fosters user confidence by connecting AI with real-world accountability

Imagine a loan application flagged by AI—before rejection, a person reviews the case to ensure fairness.

Committees That Keep AI Honest

Many organizations set up AI oversight committees, such as:

- Ethics boards assessing societal impacts

- Compliance teams monitoring regulatory adherence

- Risk committees evaluating operation safety

These groups promote transparency and collective responsibility.

For hands-on auditing, explore “5 Cutting-edge Tools to Audit AI Systems for Greater Trustworthiness” to streamline oversight without overloading teams.

Transparency in Roles and Decisions

Transparency isn’t just about how AI works; it’s about showing who’s responsible for key decisions. When users and stakeholders can trace decisions back to individuals or teams, trust grows.

Quotable nuggets:

"Accountability in AI is about knowing who’s calling the shots, not just what the algorithm says."

"Human oversight turns AI from a black box into a trusted partner."

"Strong governance is the silent guardian that keeps AI ethical and reliable."

Picture this: In a startup scaling fast with limited resources, assigning clear AI ownership can save costly missteps and build trust with early users.

Accountability and governance transform AI from an isolated technology into an integrated, trustworthy collaborator—reminding us that every smart algorithm needs a smart human behind it.

Reliability and Robustness: Ensuring Consistent AI Performance

Building reliable, fault-tolerant AI systems is critical for maintaining trust over time. When your AI stumbles or behaves unpredictably, users quickly lose confidence—and that hurts adoption. Reliable AI systems lead to significant improvements in productivity and efficiency, making them essential for business success.

Testing Strategies to Keep AI Rock-Solid

To ensure consistency, deploy a mix of testing techniques:

- Stress Testing: Push AI models to extreme scenarios to identify breaking points.

- Scenario-Based Validation: Simulate real-world conditions your AI will face. This testing provides critical insights into system weaknesses and areas for improvement, helping to enhance AI reliability.

- Real-Time Monitoring: Continuously track performance and flag anomalies in production.

Each approach catches different failure modes, making your system more robust overall. Picture a startup relying on AI to auto-route customer requests—stress tests ensure peak hours won’t cause crashes or misroutes.

Automated Error Handling: Catch and Correct on the Fly

Trust grows when AI doesn’t just fail silently—it detects and recovers from errors proactively. Best practices include:

Error Detection: Use AI health dashboards and alerting to spot issues fast.

Handling Procedures: Implement fallback mechanisms or human alerts for glitches.

Automated Corrections: Trigger automated model recalibration or rollback when drift occurs.

These systems reduce friction and downtime, keeping user experience smooth even under pressure.

Challenges: Model Drift and Dynamic Environments

AI models decay when input data or environments change—a phenomenon known as model drift. This demands ongoing validation and adaptation since outdated models can erode trust quickly.

Think of it like a GPS app needing constant map updates; without them, directions become unreliable. Regular re-training and monitoring help maintain accuracy despite shifting data landscapes.

For further insights, see Establishing Trust in AI Systems: 5 Best Practices for Better Governance - Architecture & Governance Magazine.

Quotable Insight

“Reliable AI isn’t just about perfection—it’s about graceful recovery and seamless user experience under all conditions.”

“Stress test early, monitor continuously, and automate error fixes—these are your trust-building trifecta.”

For deeper tactics, see “Unlocking Reliability in AI Systems: Top Techniques That Build Trust” for a comprehensive playbook on pushing your AI’s uptime and accuracy beyond expectations.

In fast-paced SMB environments, prioritizing reliability and robustness creates a foundation for users to depend on your AI—not just tolerate it. It turns AI from a risky gamble into a trusted teammate powering smarter decisions.

Human-Centered AI Design: Prioritizing User Experience and Ethical Considerations

Human-centered AI design flips the script on traditional tech-first approaches by putting real people front and center. It’s more than UI polish—it’s about building systems that truly align with user values, needs, and ethical expectations. Designing for positive human AI interactions is crucial for building trust, ensuring that users feel confident and supported in their communication and collaboration with AI systems.

Why Human-Centered Design Matters

At its core, this approach ensures AI doesn’t just work—it works for people. It tackles common trust blockers like confusing interfaces, non-inclusive design, and ethical blind spots.

Key design principles include:

- Empathy-driven workflows that match how users naturally think and behave

- Ethical transparency so users understand AI’s role and limitations

- Inclusive accessibility accommodating diverse abilities and backgrounds

These elements combine to create AI experiences users can intuitively trust and rely on.

Usability Testing & Accessibility

Trust skyrockets when users feel AI tools are designed with them, not for them. Usability testing with diverse user groups reveals hidden hurdles and uncovers how real humans interpret AI outputs.

Accessibility isn’t optional—it’s essential. Designing for various cognitive and physical abilities makes products more welcoming and widens adoption.

- Regularly testing with diverse personas

- Iterating based on honest user feedback

- Ensuring compliance with accessibility standards (like WCAG)

These steps shrink resistance and boost user confidence.

Real-World Impact in 2025

Picture this: A startup’s AI-driven app routinely gathers user insights, refining features to address concerns about data use and decision fairness. By understanding and respecting customer preferences, the app further tailors its AI features, which helps build trust and loyalty. Over six months, customer retention climbs 20% and support calls drop by 30%—all because users trust the product.

Check out “Building Trust in AI and Agile: Your Ethics Playbook for 2025” for detailed case studies and practical takeaways.

Takeaways You Can Use Today

- Start small with empathy interviews: understand users’ fears and motivations before building

- Integrate iterative usability tests early and often to catch trust blockers fast

- Build transparency into the UX—clear signals about AI’s role help demystify decisions

Human-centered AI isn’t just kinder—it’s smarter business. When your AI respects and reflects real human needs, trust isn’t a bonus, it’s built right in.

"Trust in AI starts with trust in design—it’s a conversation, not a code snippet."

"Design kindness and clarity into your AI, and users won’t just tolerate it—they’ll champion it."

"Your product’s face to the user is your AI’s trust passport—make it welcoming."

Stakeholder Engagement and Feedback Loops: Building Trust Through Inclusion

Involving a diverse range of stakeholders—from developers and end-users to regulators and impacted communities—is essential for building trust in AI systems. Stakeholder engagement plays a crucial role in fostering trust by ensuring that AI development aligns with ethical principles, transparent governance, and the needs of all parties involved. This ensures multiple perspectives shape AI outcomes and helps prevent blind spots that erode confidence.

Fostering Open Dialogue: Practical Steps

To actively engage stakeholders throughout the AI lifecycle, create channels for continuous feedback, such as:

- Regular workshops and focus groups to gather insights during development

- Open forums or online communities for real-time user engagement

- Transparent reporting of AI impacts shared with regulators and public

These ongoing conversations keep trust alive by showing you listen and adapt.

Transparent Feedback Channels to Encourage Trust

Establishing clear, accessible ways for users to submit input or complaints matters as much as collecting feedback. Consider:

- Intuitive feedback forms embedded in apps

- Dedicated support teams trained to escalate AI-related issues

- Public dashboards showing how feedback influences system updates

Building visible feedback loops helps users feel valued and in control.

Leveraging Insights to Improve AI Systems

Stakeholder feedback isn’t just data—it’s a rich resource to iterate and refine AI trustworthiness. Use insights to:

- Identify bias or fairness issues early

- Adjust features to meet diverse user needs

- Strengthen compliance with evolving regulations

Incorporating stakeholder feedback is essential for improving AI solutions, ensuring they are trusted, user-accepted, and compliant with regulatory requirements.

Best practices from the guide Establishing Trust in AI Systems: 5 Best Practices for Better Governance - Architecture & Governance Magazine recommend integrating user feedback as a core part of AI governance.

Cultural and Regional Sensitivity Matters

When your AI serves global markets like the US, UK, and LATAM, remember:

- Cultural norms affect how users perceive and trust AI

- Localization includes transparent communication tailored to local expectations

- Engaging regional stakeholders prevents missteps and builds broader acceptance

As ai extend into more aspects of society, its influence crosses different cultures and regions, making it essential to develop trust strategies that are sensitive to these diverse contexts.

Data shows that products with localized trust strategies increase adoption rates by up to 30% in new markets.

Shareable Insights

“Trustworthy AI listens first—building feedback loops is the smartest shortcut to better systems.”

“Inclusive stakeholder engagement isn’t optional; it’s the foundation of lasting AI trust.”

“Your AI’s transparency starts with clear, open channels for user voices to be heard.”

Picture this: a startup hosting monthly virtual town halls where users, regulators, and developers debate AI impacts live—turning feedback into rapid fixes and stronger trust.

Engaging diverse stakeholders through continuous, transparent feedback loops builds resilient, trustworthy AI that scales globally with local relevance. Prioritize inclusion to turn complex AI systems into accessible, dependable tools your users actually want to rely on.

Integrating Trust Principles into Your AI Development Roadmap for 2025

Embedding trust at every stage of your AI project isn’t optional anymore — it’s essential for sustainable success. Integrating AI responsibly within trust frameworks ensures that ethical guidelines, user trust, and effective control mechanisms are embedded throughout the system.

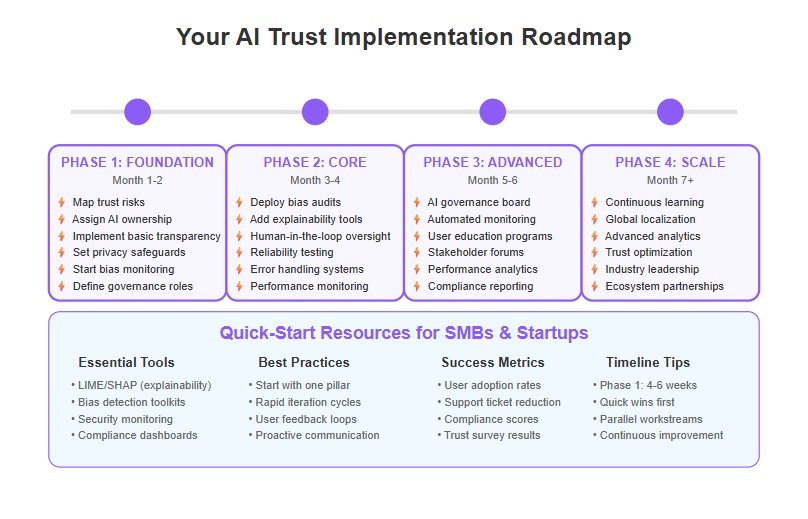

Start by building a strategic framework that covers these core pillars:

- Transparency: Explainable models and clear user communication

- Ethics: Bias audits, inclusive datasets, and societal impact reviews

- Privacy: Data protection aligned with regulations like the EU AI Act

- Accountability: Defined roles, human-in-the-loop oversight, and governance boards

- Reliability: Rigorous testing, monitoring, and error handling

- User Focus: Human-centered design prioritizing usability and accessibility

Fast-Track Trust for SMBs and Startups

If you're running a startup or SMB, resource constraints might feel like a tough barrier, but actionable steps can accelerate trustworthy AI deployment without blowing your budget:

- Leverage AI-first, low-code platforms to speed up development while embedding compliance checks

- Use automated tools for bias detection, privacy auditing, and explainability reporting—many integrate smoothly with popular frameworks

- Focus on rapid iterations with continuous learning loops, incorporating user feedback early and often

Picture this: Instead of manual audits, your system flags bias patterns as they emerge, giving you real-time fixes without slowing down your release cycle.

Tools and Frameworks That Work Without Overhead

Plenty of open-source and commercial tools exist to help scale trust without heavy lifting:

Explainability libraries like LIME and SHAP for transparent decision insights

Bias and fairness toolkits that run automated impact analyses

Security monitoring platforms ensuring data stays locked down

These tools ease compliance with emerging rules and empower teams to “own it” from start to finish.

Cultivating a Trust-First Culture

Tech alone won’t keep trust thriving. It requires a mindset shift:

- Promote ownership and accountability across your team. Tech companies, in particular, have a responsibility to foster a trust-first culture by ensuring ethical practices and transparency in AI development.

- Stay agile—be ready to adapt as AI models evolve and markets shift

- Foster continuous education on ethical AI trends and regulatory updates

This culture fuels speed without sacrificing responsibility, turning trust from a checkbox into a competitive advantage.

Balancing Speed with Responsibility in Dynamic Markets

Innovating fast is great, but rushing can erode trust if you cut corners.

- Prioritize validation steps that catch issues early without stalling progress

- Use human-in-the-loop controls for high-impact decisions to combine speed with oversight

As one savvy product lead put it: “Trust isn’t a hurdle; it’s the foundation that lets us innovate boldly without falling apart.”

Building trust in AI for 2025 means combining the right tech, mindset, and process to power smarter, fairer, and faster solutions for your users.

Keep trust top of mind, and your AI roadmap won’t just survive—it’ll thrive.

Preparing for Future Trust Challenges: Emerging Trends and Technologies

AI is evolving faster than ever, shifting the landscape of trust-building in big ways. As data volumes rapidly expand, organizations face new challenges related to data management, security, and the ethical use of AI systems.

Emerging AI Advancements Reshaping Trust

Keep an eye on technologies like:

- Generative AI, which crafts content autonomously, raising new explainability challenges

- Federated learning, enabling AI to train on decentralized data, boosting privacy but complicating oversight

- Self-regulating AI systems that monitor and adjust their own performance in real time

These advances demand new strategies to keep trust intact as complexity grows.

Regulatory Shifts and Explainability Breakthroughs

Legislation like the EU AI Act is tightening rules, pushing companies to prioritize transparency and fairness.

Watch these trends:

- Increasing focus on real-time auditing to catch issues instantly

- Enhanced explainability tools that translate AI decisions into user-friendly terms

- Moves toward AI models that self-document decision paths for easier governance

Adapting quickly to these will keep your AI projects compliant and trustworthy.

Flexibility and Ethical Vigilance Are Non-Negotiable

As AI tech and societal norms evolve, your trust practices must stay flexible.

Pro tips:

- Set up continuous monitoring for ethical and societal impact, especially as AI’s role grows

- Build feedback loops with users and stakeholders to catch blind spots early

- Invest in ongoing professional development to stay sharp on emerging risks and solutions

Remember, the only constant in AI trust is change.

Visualize this: Picture a dashboard that alerts you when your AI’s fairness metric dips — enabling near-instant fixes before harm spreads. That’s the future of proactive trust management.

Trust isn’t built once; it’s a living process adapting alongside tech and policy.

Embracing emerging AI trends with agility, transparency, and ethics will set you apart.

You’ll not only comply with regulations but also garner lasting user confidence—an invaluable asset in crowded markets.

The key is to see trust as a dynamic journey, not a destination.

Keep learning, adjusting, and involving your community to safeguard your AI’s credibility tomorrow and beyond.

Conclusion

Building trust in AI isn’t a luxury—it’s the foundation that turns innovative tech into powerful, reliable tools your users depend on. As trustworthy AI applications become integral to our daily lives, their impact on everything from healthcare to personal assistants underscores the importance of transparency and ethical design. When you embed transparency, ethics, privacy, and accountability deeply into your AI systems, you unlock real adoption, reduce risks, and create lasting competitive advantage.

Remember, trust isn’t a checkbox; it’s a continuous practice that shapes every interaction and decision your AI makes.

Here are key actions to drive trust forward now:

- Implement clear, user-friendly explanations about how your AI works and makes decisions

- Run regular bias and privacy audits to catch issues early and protect your brand

- Assign explicit accountability roles so every AI outcome has a human owner

- Design with empathy and inclusivity to create intuitive, accessible AI experiences

- Set up feedback loops that keep you connected to user concerns and evolving needs

Start by picking one pillar—whether transparency, ethics, or governance—and build momentum from there. Trust compounds over time, especially when you act proactively and openly.

You’re not just launching AI products—you’re building relationships with your users and stakeholders.

Boldly own your AI’s trustworthiness and make it your startup or SMB’s secret weapon.

Because in 2025 and beyond, the companies that win aren’t just the fastest or smartest—they’re the ones their customers truly believe in.

“Trust isn’t given. It’s earned every day, one transparent decision at a time.”

User Education: Empowering People to Understand and Trust AI

User education is a cornerstone of building trust in AI systems—especially as artificial intelligence becomes woven into the fabric of daily life. As AI technologies and AI models extend their reach into everything from customer service to fraud detection, empowering people to understand how these systems function is essential for widespread acceptance and responsible adoption.

Demystifying AI for Non-Experts

For many, AI still feels like a black box. Misconceptions and uncertainty can undermine trust in AI and slow down adoption. That’s why demystifying AI for non-experts is a critical first step. Effective user education breaks down how AI systems and AI models operate, what they can (and can’t) do, and how their decisions are made.

Practical Training for Everyday Users

Understanding AI in theory is important, but hands-on experience is what truly builds user confidence. Practical training empowers users to interact with AI tools effectively—whether they’re using a chatbot, reviewing AI-generated recommendations, or making decisions based on AI’s outputs.

Building Confidence Through Transparency and Learning

Trust in AI grows when users see how decisions are made and have opportunities to learn from their interactions. Transparent AI systems that explain their reasoning, provide performance metrics, and offer feedback channels help users understand and trust AI’s recommendations.