Secure Transactions: How Data Tokenization Protects Your App

10 Powerful Ways Secure Transactions: How Data Tokenization Protects Your App

Meta Description: Discover 10 powerful ways to secure transactions and see how data tokenization protects your app by safeguarding sensitive data, ensuring compliance, and building user trust.

Outline:

Introduction – Introduces the importance of securing transactions and previews the role of data tokenization in protecting your app.

Secure Transactions: How Data Tokenization Protects Your App – Discusses why protecting transactions is crucial, highlighting trust and the high stakes of data breaches.

What is Data Tokenization? – Defines data tokenization in simple terms with examples (like the casino chip analogy) to illustrate how it works.

How Data Tokenization Works – Explains the tokenization process step by step (token creation, token vault, detokenization) and how it secures transactions.

Tokenization vs Encryption – Compares tokenization with encryption and highlights key differences in security and implementation.

1. Replacing Sensitive Data with Tokens – Describes how tokens substitute real data to eliminate exposure, ensuring stolen tokens are useless to attackers.

2. Minimizing Damage from Data Breaches – Explains how tokenization limits the impact of breaches, so compromised data can’t be misused by hackers.

3. Simplifying PCI DSS Compliance – Shows how using tokenization helps meet industry security standards (like PCI DSS) with less effort and risk.

4. Protecting Data in Transit – Covers how tokenization keeps sensitive information safe during transmission, preventing intercepts from stealing real data.

5. Guarding Against Insider Threats – Details how tokenization prevents even internal staff or admins from accessing actual sensitive data, reducing insider risks.

6. Preserving Data Integrity and Format – Highlights that tokenization maintains data format/types, so systems work seamlessly with tokens without breaking functionality.

7. Reducing Security Costs and Complexity – Discusses how limiting sensitive data storage cuts down on security infrastructure costs and simplifies compliance efforts.

8. Building Customer Trust and Confidence – Describes how tokenization enhances user trust and loyalty by protecting their data and providing a safer user experience.

9. Embracing Modern Payment Systems – Explains how tokenization integrates with digital wallets and emerging payment technologies to keep your app up-to-date and secure.

10. Future-Proofing Your App’s Security – Looks at how tokenization prepares your app for evolving threats and growing security expectations in the future.

Frequently Asked Questions (FAQs) – Answers common questions about data tokenization, encryption differences, implementation, and its effects on your app.

Conclusion – Summarizes key insights and reinforces the importance of data tokenization in ensuring secure transactions, ending on an optimistic note.

Introduction

In today’s digital world, secure transactions are the lifeblood of any successful app. Users trust your application with credit card numbers, personal information, and other sensitive data—often including critical data that, if compromised, could have severe consequences—whenever they make a purchase or share details. But that trust can vanish in a heartbeat if their data falls into the wrong hands. Data breaches cost companies millions of dollars and can severely damage their reputation. The good news? By using the right security strategies, you can prevent disasters before they happen – and one of the most powerful strategies is data tokenization.

Imagine if, even in the event of a cyberattack, your users’ sensitive information remained safe and unusable to thieves. That’s exactly what tokenization offers. It’s an approach that replaces real, sensitive data with harmless stand-ins, so even if hackers break in, they come away empty-handed. For app developers and business owners, the burning question is how to ensure secure transactions and how data tokenization protects your app from threats. This comprehensive guide will answer that question. We’ll explore 10 powerful ways tokenization secures transactions – from keeping hackers out to boosting customer confidence – all in an informative, easy-to-understand way.

Our journey will cover what tokenization is, how it works, and why it’s essential for modern applications. You’ll learn how tokenization not only thwarts cybercriminals but also simplifies compliance and saves costs. Tokenization helps organizations meet data protection laws and regulatory requirements, such as PCI DSS, by reducing the amount of sensitive data they handle. By the end, you’ll see how adopting tokenization is like putting your data in a vault and handing out keys that cannot be misused. So, if you’re ready to protect your app and its users, read on to discover the 10 powerful ways data tokenization keeps transactions safe and your business one step ahead of threats.

Secure Transactions: How Data Tokenization Protects Your App

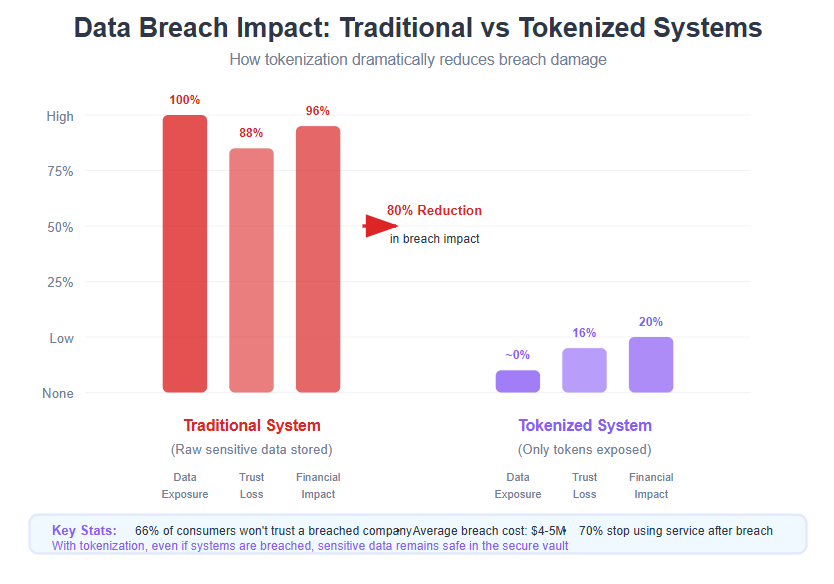

Securing transactions isn’t just an IT concern – it’s a fundamental part of maintaining user trust and safeguarding your business’s reputation. With apps handling payments, personal details, and other confidential information daily, the stakes are incredibly high. A single data breach can shatter customer confidence. In fact, 66% of US consumers say they would not trust a company that falls victim to a data breach. Even more alarming, up to 70% of people might stop using a service or shopping with a brand after a serious security incident. These numbers highlight that trust is hard to earn and easy to lose when it comes to user data. Storing real sensitive information also introduces significant security risks, as attackers may exploit vulnerabilities to access or misuse this data.

This is where data tokenization comes into play as a game-changer for secure transactions. Tokenization directly addresses the question of “how do we protect our app’s sensitive data during transactions?” by fundamentally changing what data we store and expose. Instead of keeping actual credit card numbers, personal IDs, or passwords in your systems, tokenization allows you to store fake data – called tokens – that stand in for the real thing. If cybercriminals somehow break past your defenses, they’ll only find gibberish tokens that have no value or meaning on their own. The real sensitive information remains locked away in a separate, highly secure environment, out of the intruder’s reach.

By implementing tokenization, companies large and small are effectively reducing their risk footprint. Major industries like finance and healthcare already rely on tokenization because they face strict data protection regulations. If your app handles payment cards, for example, tokenization means you’re not actually storing card numbers – you’re storing tokens. This significantly shrinks the target on your back by minimizing the amount of sensitive data stored in your systems. Even if attackers intercept transaction data or access your databases, all they get are token values that are useless without the original data they represent. In summary, secure transactions are achieved not just by locking the doors, but by removing the prize that thieves are after. Data tokenization does exactly that, protecting your app by ensuring that sensitive user data never roams freely through your system where it could be compromised.

On top of security, choosing tokenization shows your users that you value their privacy and safety. It’s an optimistic, proactive step – you’re not waiting for a breach to happen, you’re preventing it from the get-go. Tokenization is widely used to replace sensitive data, such as credit card numbers or personal information, with tokens to enhance security and compliance. In the rest of this article, we’ll break down exactly what tokenization is, how it works, and then detail the 10 powerful ways it secures transactions and protects your app. Let’s start by understanding the basics of data tokenization.

What is Data Tokenization?

Data tokenization is a security method for replacing sensitive data with non-sensitive tokens. In simple terms, you swap out something valuable (like a credit card number or a Social Security number) for a random placeholder of the same format. The real data is stored safely in a separate location (often called a token vault), and the placeholder token is what gets used in your application’s database or transactions. Because the token is just a stand-in, it has no intrinsic meaning or value to anyone who might steal it. It’s like taking a priceless painting out of a museum and putting a replica in its place – the replica might look similar, but it’s worthless to thieves.

A classic example to illustrate tokenization is the casino chip analogy. Think about when you play games at a casino: you trade your cash for casino chips. Those chips represent real money while you’re playing on the casino floor, but if someone snatches a chip and leaves the casino, it’s useless to them because it’s not actual currency. The real money stays secure with the casino cashier. Similarly, tokenization replaces actual sensitive data with tokens (like the chips) that stand in for the data during processing. Outside the secure system (the “casino”), the tokens can’t buy anything – they can’t be turned back into the real data without the proper process. In this way, tokenization removes sensitive data from your app’s environment, replacing it with tokens to enhance security.

Tokens come in many forms. They could be a random string of numbers and letters that look like the original data (for instance, a token “4242-3175- Rogers-4433” might stand in for a credit card or an ID), or they might be formatted to preserve certain aspects of the data (such as the last four digits of a card number for customer reference). Crucially, a token has no exploitable meaning on its own. It’s essentially a code or reference that only a special tokenization system can translate back to the original information.

To put it formally, “tokenization is the process of substituting a sensitive data element with a non-sensitive equivalent (a token) that has no intrinsic or exploitable meaning or value”. This token acts as a reference or pointer to the actual data which is kept secure elsewhere. If someone were to somehow get hold of the token, they cannot reverse-engineer the original data from it – there’s no mathematical relationship to exploit in most tokenization schemes. Only the tokenization system (which is heavily secured) knows how to swap the token back for the real data, a process known as detokenization.

Tokenization is widely used in the payment industry and beyond. It’s particularly popular for processing credit card transactions because it allows companies to comply with industry standards (like PCI DSS) without storing sensitive customer card numbers. But it’s not limited to payments – any kind of personally identifiable information (PII) such as health records, bank account numbers, or driver’s license numbers can be tokenized to enhance security. Tokenization is especially effective for protecting structured data fields such as credit card details and Social Security numbers, which are commonly targeted in data breaches. By tokenizing data, an app can still perform its normal operations (like charging a payment or verifying a user’s identity) because the tokens can be passed around and used in place of real data for most processes. Meanwhile, the actual sensitive details remain locked up tight.

In summary, data tokenization means your app never handles the actual secret data directly. It hands that duty off to a secure service which returns a harmless token. This dramatically reduces the risk to your app. Next, let’s take a closer look at how this process works in practice, especially during a transaction.

How Data Tokenization Works

Understanding how tokenization works will help clarify why it’s so effective. Tokenization systems are comprehensive solutions that manage the creation, storage, and retrieval of tokens, ensuring sensitive data is protected throughout its lifecycle. The process usually unfolds in a few straightforward steps whenever sensitive data needs to be handled:

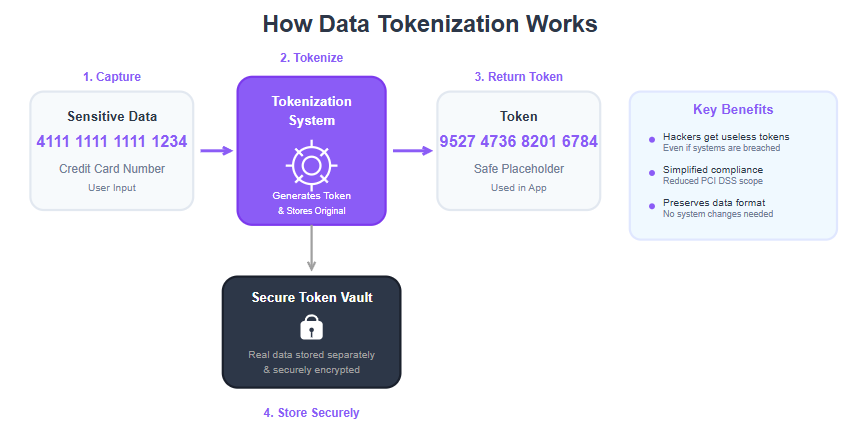

Capture Sensitive Data: First, sensitive data is identified and captured. For example, a user enters their credit card number into your app during a purchase, or perhaps they provide personal details for verification. At this moment, instead of storing that data in your app’s database, you prepare to tokenize it.

Send to Tokenization System: The sensitive data is securely sent to a tokenization service or system. This could be an internal component of your infrastructure or a trusted third-party service (like a payment gateway) that specializes in tokenizing data. The data might travel over an encrypted connection to ensure it’s protected in transit. Tokenization helps transmit data securely by ensuring that only tokens, not the actual sensitive data, are sent over networks, reducing the risk of exposure during transmission.

Token Creation: The tokenization system receives the data and generates a unique token to replace it. This token is typically a random string of characters or numbers. Importantly, the token can be designed to have a similar format to the original data. For instance, if the original was a 16-digit credit card number, the token might also be 16 digits long and perhaps keep the last four digits the same for user convenience. This format-preserving aspect means using the token won’t disrupt other systems.

Store Data Securely: The real sensitive data (like the actual credit card number) is then stored securely in a token vault, which is a hardened, isolated database that only the tokenization system can access. Here, the sensitive data is stored separately from the tokens themselves, enhancing security. The vault holds the mapping between each token and the original data it represents. Access to this vault is strictly controlled – only authorized processes (like when you truly need to charge the card via your payment processor) can retrieve the original data using the token.

Receive and Use the Token: The tokenization system returns the newly created token back to your app. From this point on, your app and its databases use the token in place of the sensitive information. For example, your transactions, records, or analytics will reference the token, not the real credit card or ID number.

Detokenization (when needed): In the rare cases that the original data is required (for example, processing a payment or displaying masked info to the user), an authorized request can be sent to the tokenization system to detokenize, or swap the token back to the real data. This happens behind the scenes – often within the secure environment of a payment processor or a secure backend – so the actual data is never exposed to your application’s front-end or databases unnecessarily.

An example of how mobile payment tokenization works: A user’s credit card info is replaced by a token via a secure service, and only the token (not the actual card number) is used in transactions. Even if intercepted, the token alone is worthless without the secure vault that maps it back to the real card data.

To make this concrete, imagine a customer making a purchase in your mobile app. They input their credit card number (say, 4111 1111 1111 1234). The app sends this number to a tokenization service. The service generates a token, like 9527 4736 8201 6784, and stores 4111 1111 1111 1234 safely in the vault. The token 9527 4736 8201 6784 is returned to your app and that’s what gets saved in your database and used for the transaction. The next time you need to charge that customer or identify their card, you use the token. If a hacker were to somehow snoop on the transaction or breach your database later, all they would find is the token, which is just a useless placeholder without access to the tokenization system’s vault. As one security guide puts it, even if a hacker breaches your system, they’ll only get their hands on meaningless tokens, not the sensitive data itself.

It’s worth noting that tokenization often works hand-in-hand with encryption. For example, data might be encrypted during transit to the tokenization service, and the token vault might encrypt the stored data as an extra layer of protection. But the big difference is that your app’s environment never stores the sensitive data in a usable form – it relies on tokens. This dramatically minimizes the paths through which data can leak out.

Now that we have a grasp of how tokenization functions mechanically, let’s compare it to a more familiar data protection method, encryption, to see how the two differ and why tokenization is uniquely powerful for securing transactions.

Tokenization vs Encryption

Tokenization and encryption are both techniques used to protect sensitive information, but they work in fundamentally different ways. Understanding these differences will clarify why tokenization is so useful for securing app transactions (and also when you might still use encryption alongside it).

Encryption transforms sensitive data using a cipher (algorithm) and an encryption key. When you encrypt, say, a password or a credit card number, you apply a mathematical function that jumbles the data into a unreadable format. For example, the credit card 4111 1111 1111 1234 might turn into something like XJ9&^%1… (a random-looking string). To get the original data back, you need the correct decryption key. Encryption is a reversible process: with the key, you can decrypt and retrieve the original data; without the key, the encrypted data is supposed to be impractical to decode. The strength of encryption lies in the complexity of the cipher and the secrecy of the key. However, if that key is stolen or cracked, the encrypted data can be unlocked.

Tokenization, on the other hand, does not use a reversible mathematical transformation on the data. Instead, it swaps the data for a token and stores the original data separately. Tokenization replaces sensitive data with non-sensitive tokens to enhance security. There is no algorithm to “decrypt” a token; the only way to get back the original data is to look it up in the secure token vault using the token as the reference. If someone only has the token, they cannot derive the original information from it through any mathematical means. This is a key distinction: breaking strong encryption might require finding a key or exploiting a flaw in the algorithm, whereas breaking tokenization would require breaching the secure vault or the tokenization system itself, since the token by itself reveals nothing.

To put it simply, encryption is like scrambling a message with a code, whereas tokenization is like locking the message away and leaving only a code name in its place. Both methods keep data from prying eyes, but tokenization has some special advantages for transaction security:

- No Meaningful Data to Steal: Encrypted data is protected, but it’s still the real data in scrambled form. If a malicious insider or a hacker gets hold of encrypted data, they might attempt to brute-force the key or find vulnerabilities. With tokens, there’s nothing to brute-force, because the token isn’t derived from the data in a way that can be mathematically reversed – it’s a random substitute. As long as the token vault is secure, the token is useless on its own (imagine trying to guess someone’s full credit card number just from a random token value – it’s infeasible).

- Format Preservation: Encrypted data usually looks very different from the original (often a different length and character set), which can make it hard to use in systems that expect a certain format. Tokenization maintains the format and length of data if needed. For instance, a token can be designed to look like a normal 16-digit credit card or a 9-digit Social Security number. This makes tokenization easier to integrate into existing databases and applications – you don’t need to redesign your database schema or application logic to accommodate weird encrypted strings. The token “plugs in” wherever the original data would go, preserving data integrity in the app’s workflows. Tokenization is particularly beneficial for integrating with legacy systems that require data in specific formats, allowing organizations to enhance security without disrupting established operational workflows.

- Operational Use: Since tokens can be deterministic (always the same for a given input, if desired), you can perform certain operations on tokenized data without detokenizing it. For example, you could sort or search records by a tokenized customer ID, or keep track of a tokenized credit card for recurring billing. Encryption, especially if using random initialization vectors for security, might produce different encrypted outputs for the same input at different times, making consistent operations tricky without decrypting.

- Reduced Key Management: Encryption requires careful management of encryption keys – you must store keys securely, rotate them, and ensure they aren’t exposed. With tokenization, your main concern is securing the tokenization system and vault. You’re effectively outsourcing the “secrecy” from many distributed keys to one centralized vault system. This can reduce complexity because only the token service needs to be heavily guarded and validated, and it never shares a secret key with other parts of your app – it simply provides tokens or returns original data when asked under strict controls.

- Use with Other Security Layers: Tokenization often complements encryption. For example, the communication between your app and the tokenization service can be encrypted for safety. But once tokenized, the data at rest in your app’s environment doesn’t need encryption, since it’s not sensitive. This layered approach means even if one layer (say encryption during transit) were to fail, the token layer still protects the data.

It’s important to note that tokenization is not necessarily a silver bullet replacement for all encryption. Encryption is still very useful for protecting data in transit (like SSL/TLS on network connections) and for securing data that must remain in use but masked (like end-to-end encryption of a message). However, for stored data and transactional data, tokenization provides an extra level of security by ensuring that your app’s databases and logs simply don’t hold sensitive information in the clear at all. For example, unlike encryption, tokenization maintains the type and length of data, making it compatible with existing systems without requiring changes – which means you get security without breaking functionality or requiring access to decryption keys in every component.

In practice, many systems use both: they tokenize sensitive data and also encrypt the contents of the token vault or the tokens themselves when stored, for maximum protection. The key takeaway is that tokenization greatly limits who and what can access actual sensitive data. With those fundamentals in mind, let’s dive into the core of this article: the 10 powerful ways in which data tokenization secures transactions and protects your application.

1. Replacing Sensitive Data with Tokens

The first and most fundamental way tokenization secures your app is by replacing sensitive data with tokens everywhere you can. By doing so, you eliminate the exposure of actual confidential information in your application’s environment. If your database, logs, or analytics only contain tokens instead of real credit card numbers, personal IDs, or account details, then a data breach yields nothing of value to attackers. As one source succinctly put it, even if tokens are stolen, they would be useless without the original data they represent, which dramatically reduces the risk of data breaches. Tokenization also means your systems store less sensitive data, which simplifies compliance requirements and streamlines audits, especially for standards like PCI DSS.

Think of this as swapping out crown jewels for fake replicas. The tokens act as realistic stand-ins: they might look like real data to your application and preserve necessary information (like format or partial digits), but they’re essentially dummy values. For instance, a customer’s tokenized credit card number might preserve the last four digits for recognition (e.g., **** **** **** 1234), but all the other digits are generated to not correspond to the actual card. Without access to the secure token vault, no one can turn that token back into the real card number.

From a hacker’s perspective, breaking into a system that uses full tokenization is incredibly frustrating – it’s like cracking open a safe only to find play money inside. The tokens have no exploitable meaning or value on their own, so the attacker gains nothing useful. A concrete example: if your e-commerce app tokenizes customer addresses, an attacker might get a database of address tokens that look like TOK123MAINSTREET instead of actual street names. They can’t reverse those tokens to get the real addresses without your tokenization system’s help.

By replacing live sensitive data with tokens, you’re effectively minimizing the exposure of sensitive data across all applications, people, and processes in your environment. Everything operates using tokens instead of actual data, which means unauthorized access – whether by a hacker or even an overly curious employee – won’t reveal private information. Only a tightly controlled tokenization service can map tokens back to real data, and it will only do so under the right circumstances.

It’s worth emphasizing how this token replacement builds a strong foundation for all other security measures. If you later implement monitoring, intrusion detection, or backups, those systems will also only see tokens. This limits the damage from human error as well – imagine an engineer accidentally logging some data for debugging; if you use tokenization, that log will contain tokens, not raw secrets.

In summary, by broadly replacing sensitive details with tokens, you ensure that your app is essentially working with decoy data from the perspective of any would-be thief. This strategy nullifies many threats from the start. It’s like removing the target from your back – there’s nothing of value for attackers to steal in your app’s transactions or storage. Next, we’ll see how this fundamental change plays out when a breach actually occurs, and how it dramatically limits the damage.

2. Minimizing Damage from Data Breaches

No security measure can claim a 0% chance of breach, but tokenization ensures that even if a breach happens, the damage is minimized. This is one of the most powerful ways tokenization protects your app and your users: it turns a potential catastrophic leak of sensitive data into a far more contained incident by removing sensitive data from your systems and preventing the risk of exposing sensitive data during a breach.

Consider the unfortunate scenario that a cybercriminal manages to penetrate your app’s defenses – maybe through a new vulnerability or a stolen credential. In a traditional system that stores actual credit card numbers or personal data, the attacker would hit the jackpot: they could immediately start extracting that information and potentially use it for fraud or sell it on the dark web. This is the nightmare headline we hear about in major data breaches. However, in a tokenized system, the intruder’s victory would be hollow. They’d spend all that effort to break in, only to retrieve strings of tokens that can’t be used to commit fraud. The stolen data would be tokens, not actual sensitive data, making it useless to hackers and effectively preventing the exposure of sensitive data.

Real-world impact: If a database of 100,000 customer records is breached from a tokenized app, and all the key fields are tokens (credit cards, SSNs, etc.), the attacker essentially gets nothing of value. They can’t charge those tokenized credit card numbers or impersonate users with tokenized IDs. For the company, this means avoiding the devastating outcomes of a typical breach. Customers’ real financial or personal information remains safe, still locked away in the token vault which the attacker did not access. You might have to reset tokens or improve security, but you’re not in the position of telling users “your card number and personal info have been stolen” – a phrase that often leads to massive losses of trust.

This benefit of tokenization is recognized widely as a form of damage control. Tokenization ensures minimal damage in the event of a data breach. It’s like having a ship with watertight compartments: even if one section gets breached, the whole ship doesn’t sink. The compromised system only held tokens, so the actual sensitive data remains uncompromised in another “compartment.”

Moreover, limiting breach damage protects your brand’s reputation and customer relationships. If you can confidently say, “Even though attackers accessed some of our systems, no actual customer data was exposed,” you retain customer trust far more than if you had to admit that credit card numbers or identities were stolen. Tokenization thus guards not just data, but also your app’s standing and reliability in the eyes of users. As Stripe’s security guide notes, by ensuring any compromised data is non-sensitive and cannot be used for fraudulent transactions, you mitigate the negative impact on your business and preserve your brand’s reputation.

For example, there have been cases where companies that tokenized their payment info could report a breach of token data but reassure customers that their real card numbers were not leaked. Compare that to breaches where actual card numbers get out – those often result in costly card replacements, fraud investigations, fines, and loss of customers. Tokenization is essentially an insurance policy: you hope to never be breached, but if it happens, the “payout” for criminals is negligible.

It’s also worth noting that breaches aren’t always external. Tokenization equally minimizes damage from internal mistakes. Suppose an employee’s account is compromised or someone accidentally pushes a database backup to a public server – if that data is tokenized, the breach is far less severe than it could have been.

In summary, tokenization protects your app by drastically reducing the fallout of any breach. It’s the difference between a minor scare and a full-blown disaster. This ties directly into the next benefit: the world of regulatory compliance, where preventing breaches and limiting their scope isn’t just a good idea, it’s often a requirement.

3. Simplifying PCI DSS Compliance

If your app handles payment card information (credit or debit cards), you’re likely familiar with the Payment Card Industry Data Security Standard (PCI DSS). PCI DSS is a set of security standards that anyone storing, processing, or transmitting cardholder data must follow. Compliance can be quite complex and stringent – and failing to comply can result in heavy fines or even losing the ability to process payments. One of the powerful advantages of tokenization is that it simplifies PCI DSS compliance and similar regulatory obligations.

Why? Because under PCI DSS (and many other data protection regulations), if you don’t store actual sensitive data, a lot of the requirements no longer fully apply to you. Tokenization allows you to do exactly that for card data. By replacing card numbers (also known as PAN – Primary Account Number) with tokens and storing the PANs in a secure token vault, you shrink what’s called the “PCI scope.” Tokenization specifically helps protect primary account numbers by ensuring they are not stored in your main systems, reducing the risk of exposure and supporting compliance with data protection laws. Only the system that does the tokenization (and maybe a small part of your network that communicates with it) is considered to handle sensitive card data. The rest of your app’s environment can be considered out of scope or in a much lower risk category for compliance.

Concretely, PCI DSS has numerous technical requirements: network segmentation, encrypted storage, strict access control, quarterly scans, and so on. Tokenization reduces the scope of PCI DSS compliance because only the parts of the system dealing with tokens (and not actual card data) need to meet those rigorous standards. If your main databases and application servers only see tokens, they are not holding cardholder data in the PCI sense. This means less burden on your app’s infrastructure: you can focus your highest-level security controls on the tokenization service and the connections to it, rather than every single server in your stack.

For example, imagine an online store app: Without tokenization, every server that touches payments, the database storing orders, backups, etc., all fall under PCI scope. With tokenization, perhaps only a small microservice and the payment provider environment handle the actual card data – everything else just handles tokens and thus can operate with more flexibility. As a result, your compliance audits become easier, faster, and likely less costly. One security blog notes that by minimizing the storage and processing of sensitive payment data, tokenization helps businesses adhere to industry standards like PCI DSS more easily. Tokenization also helps protect sensitive cardholder data by replacing it with tokens, which reduces the scope of sensitive data that must meet PCI DSS requirements and simplifies security measures. It’s a proactive step that shows regulators and auditors that you’ve architected security into your system.

It’s not just PCI. Think about other regulations like HIPAA for healthcare data, or GDPR for personal data in Europe. Many frameworks have the concept of data minimization – only store what you absolutely need. Tokenization is a form of extreme data minimization: you’re choosing not to keep the sensitive info at all in your primary systems. This helps meet the spirit and letter of many laws by significantly reducing the risk of data exposure. In finance and healthcare, for instance, tokenization helps organizations meet strict regulations by ensuring sensitive customer data is not stored in their systems.

Another plus: avoiding hefty fines and consequences. Non-compliance with PCI DSS can mean fines ranging from $5,000 to $100,000 per month (depending on the volume of transactions and size of the business) until compliance is achieved, not to mention the risk of breaches. By using tokenization, you’re less likely to suffer a breach of actual card data (as we discussed), which means you’re less likely to face the penalties and legal liabilities that come with exposing regulated data. And if you do get audited, showing that “we don’t store any real card data” is a strong argument in your favor.

In summary, tokenization protects your app not only technologically but also legally and financially. It simplifies compliance efforts by reducing the amount of sensitive data you handle, thereby lightening the regulatory load on your shoulders. Companies that adopt tokenization find that audits are smoother and the risk of non-compliance is greatly diminished. This allows you to focus on growing your app’s features rather than constantly worrying about meeting every security rule for every component.

Next, let’s explore how tokenization plays a role in protecting data not just at rest in your systems, but also when it’s moving across networks – a critical aspect of transaction security.

4. Protecting Data in Transit

Every time a user submits a form or your app communicates with a server, sensitive data is in motion – this is what we call data in transit. Protecting data in transit is crucial because attackers might try to eavesdrop on network traffic or intercept messages (through techniques like man-in-the-middle attacks or network sniffing). Tokenization helps here as well: by ensuring that even data in transit is tokenized as early as possible, you reduce the window in which sensitive information is exposed.

In many implementations, tokenization occurs almost immediately when data enters the system. For example, when a mobile app user hits “Pay Now,” their card details might be sent directly to a secure payment gateway that tokenizes the data and returns a token to your app. The actual card number is never even seen by your app’s servers. This means that even if someone were to intercept the message between your app and your server, they’d either catch encrypted gibberish (if using HTTPS, as you should) or they’d catch a token or temporary placeholder – not the actual card data. Tokenization is especially valuable during payment transactions, as it enhances security by protecting sensitive payment data and reducing the risk of fraud.

Furthermore, if your app needs to send sensitive data between its own components or to external services, using tokens as the content greatly reduces risk. Tokenization can secure data during transmission because even if data is intercepted mid-stream, it will be useless to hackers without the de-tokenization mechanism. Consider an API call from your app’s front-end to your back-end: if that call contains only a token (e.g., “customer_token=ABCD1234” instead of “ssn=987-65-4321”), an interceptor can do nothing with ABCD1234 by itself. It references sensitive data, but reveals none of it.

Of course, you should also use standard encryption for data in transit (like TLS/SSL for web traffic) – tokenization isn’t a replacement for that; it’s an additional layer. But tokenization ensures that even if the transit encryption is somehow broken, there’s another safety net. The attackers would then have to also breach the token vault to get any real data, which is far more difficult and unlikely if the vault is well secured and isolated.

An everyday example of protecting data in transit with tokenization is how many payment apps work: When you tap your phone to pay with a mobile wallet, the phone sends a token (sometimes called a device account number) to the payment terminal, not your actual card number. That token travels through the payment network, and only the bank can detokenize it to complete the transaction. This means at every hop along the way (phone -> terminal -> payment processor -> bank), no actual card number is floating around to be intercepted. Your app can embrace a similar approach by using tokens whenever data leaves one component for another.

Additionally, if your system integrates with third-party services (like analytics, support tools, or partner APIs), tokenization ensures you’re not unnecessarily exposing customer data to those external systems. For instance, you might tokenize email addresses or user IDs before sending data to an analytics provider. That way, even in transit to another service and at rest in that service, user identities remain pseudonymous.

In summary, tokenization acts like armor for data in motion. It complements network encryption by making the content meaningless to eavesdroppers. As a result, your app’s transactions are kept secure not just when stored, but throughout their journey over networks and between components. This gives both you and your users peace of mind that sensitive details aren’t flying across the internet in a usable form.

Now, securing against outside threats is one side of the coin. But what about threats from within? Our next point covers how tokenization protects against insider risks, ensuring even those with access to systems can’t abuse data they’re not supposed to see.

5. Guarding Against Insider Threats

Not all threats come from anonymous hackers on the internet; sometimes the risk is much closer to home. Insider threats – such as rogue employees, contractors, or anyone with internal access abusing their privileges – can be just as dangerous to an application’s security. Data tokenization is a strong safeguard against these threats because it limits what insiders can actually access.

In a tokenized environment, even if a database administrator, developer, or support engineer has access to your app’s databases or logs, they will not see actual sensitive information – they’ll see tokens. For example, a customer support staffer looking up a user’s profile might see a token in place of that user’s national ID or credit card on file. Securing sensitive data, such as payment details, healthcare records, and other critical data, is essential to protect against insider threats and ensure compliance. Without jumping through special hoops (i.e., going through the secure tokenization system with proper authorization), the insider can’t get the real data. This is essentially the principle of least privilege in action, enforced technologically: staff can do their jobs using tokens (to reference accounts, transactions, etc.) without ever needing raw data.

Consider how this mitigates risk: There have been cases in the past where insiders with database access stole customer information, or where employees snooped on celebrity accounts out of curiosity. If those data fields are tokenized, snooping yields nothing sensitive. An employee can’t casually read a celebrity’s address if it’s stored as a token like “ADDR_TOKEN_ABC123” instead of the real address. Similarly, a malicious insider attempting to exfiltrate data would find that the files they took contain only tokens. Tokenization protects against insider threats, as sensitive data is not directly accessible to employees or system administrators – it’s all swapped out.

Even routine scenarios benefit: say your database backups are handled by an IT team, or you use a third-party service for debugging that involves viewing data. With tokens, these activities don’t expose actual confidential info. The insiders might not even realize what the real data is; it’s all abstracted away.

To be clear, tokenization doesn’t eliminate the need for other internal security measures (like background checks, access controls, monitoring, etc.), but it adds a powerful layer of safety. It ensures that even if those measures slip – for example, an admin’s account is compromised or someone accesses data they shouldn’t – the most they obtain is tokenized data that they can’t misuse. In essence, tokenization de-values the data in your system for everyone except those with explicit permission via the tokenization system.

Furthermore, if an insider did try to get the actual data, they would have to use the tokenization system, which is typically monitored and logged for any detokenization requests. This creates an audit trail. If someone in the company inappropriately attempted to detokenize something without a valid reason, that could be flagged as suspicious activity.

In summary, tokenization significantly reduces the risk of human-factor security breaches within your organization. It makes sure that no single employee or administrator can walk away with a trove of customer secrets just by virtue of their access. By replacing sensitive data with tokens at every level, you’ve essentially locked away the true data behind an extra wall that insiders cannot scale without detection. Your app is not only protected from outside hackers, but also from the potential misdeeds or mistakes of those on the inside.

Having covered how tokenization safeguards data from various angles, we now turn to an often overlooked but very practical benefit: maintaining data integrity and format, which shows how tokenization can improve security without disrupting your app’s functionality.

6. Preserving Data Integrity and Format

One impressive aspect of tokenization is that it preserves the format and usability of data, which means you can enhance security without breaking your app’s functionality or user experience. This might seem like a technical detail, but it’s a powerful way tokenization protects your app’s operations and integrity while keeping transactions secure.

When we say tokenization preserves format, we mean that a token can be generated to look similar to the original data. For instance, if you tokenize a 16-digit credit card number, the token can also be 16 digits and might even keep a pattern (like the first few digits to identify the card network or the last four digits for user familiarity). If you tokenize an email address, the token might keep the same general structure (like abcd1234@token.mail). Tokenization is especially effective for structured data fields such as credit card numbers and Social Security numbers, where maintaining the original format is crucial for both security and system compatibility. Why does this matter? Because your app likely has validation rules, database schemas, and integrations that expect data in a certain format. Unlike encryption, tokenization maintains the type and length of data, so tokens can pass through your system without causing errors or requiring major changes.

For example, say your e-commerce database has a column for credit card numbers that expects 16 digits. If you encrypted the card number, you’d get a long string of random characters that doesn’t fit that pattern (and might not even fit the column length). You’d have to redesign parts of your app to handle this encrypted string every time, and formatting it for display would be impossible without decrypting. With tokenization, however, the token can be designed to be 16 digits and even preserve the last four digits of the card so you can still show “**** **** **** 1234” to the user. The rest of the systems can treat that token just like a normal card number for things like transaction lookups or refunds, without ever needing the actual number.

This format-preserving nature ensures data integrity within your app’s workflow. Applications, reports, and processes continue to operate normally because the tokens behave like the data they replaced. Sorting a list of tokenized account numbers? It will still work if the tokens keep a sortable format (or if you use deterministic tokenization for consistency). Searching for a user by their tokenized email address? It’s doable because the token looks and acts like a string in email format. The tokenization process can be fine-tuned – for instance, deterministic tokenization always gives the same token for a given input, which helps if you need to join data between systems or perform analytics by token value.

What this means for security is subtle but important: because tokenization doesn’t disrupt your operations, there’s no temptation to turn it off or bypass it for convenience. Sometimes, highly secure schemes are so cumbersome that employees or systems find workarounds (like storing unencrypted data somewhere for ease of use). Tokenization avoids this by fitting smoothly into your existing data pipelines. You get stronger security and keep functionality intact – the best of both worlds.

Furthermore, preserving format aids in user experience and trust. Users can often be shown tokens in a friendly way (like seeing the last digits of their card or a masked version of their info) so they feel confident you have their details on file, without you actually storing those details. It signals to users that you’re handling their data carefully – for example, showing “Account: *--6789” implies you’re not exposing their full number and thus are being cautious.

By maintaining data integrity, tokenization also reduces the chance of errors or data corruption. Because tokens can be validated like the original data (for example, a token for a numeric ID can still be checked as numeric), your app’s validation logic remains effective. This means you’re not introducing new vulnerabilities or bugs as you implement tokenization; things continue to work as expected, just more securely.

In summary, tokenization protects your app not only by adding security, but by doing so seamlessly. It preserves the shape and utility of your data, ensuring that increased security doesn’t come at the cost of broken features or frustrated users. This harmonious balance encourages consistent use of tokenization across your app’s transactions, which in turn maximizes security coverage. Next, we’ll discuss how tokenization can even save you money and reduce complexity in your security efforts – an often welcome side effect of doing things the secure way.

7. Reducing Security Costs and Complexity

Strengthening security often brings to mind increased costs and complexity – more encryption keys to manage, more servers to lock down, more compliance checks. However, data tokenization can actually reduce security costs and complexity for your app in the long run, making secure transactions not only feasible but economically sensible.

One immediate cost saving comes from the compliance scope reduction we discussed earlier. By limiting the parts of your system that handle sensitive data (thanks to tokenization), you can streamline audits and invest in securing a smaller footprint. Businesses that tokenize data can limit the sensitive data they handle, which reduces the costs associated with data security infrastructure and compliance. For instance, if only a tokenization service and vault need top-tier security hardening, you might spend less on expensive secure storage solutions or network segmentation for every single server. Fewer systems in scope mean fewer systems requiring intensive monitoring, fewer access controls to implement, and less frequent costly compliance assessments on everything.

Another way tokenization cuts complexity is by eliminating the need to encrypt/decrypt data throughout your application. Consider a scenario without tokenization: you might encrypt data in your database, then every time you need to use it (for display or processing), you have to decrypt it in the app (which means managing keys and exposing data in memory). That’s a lot of moving parts and potential points of failure. With tokenization, your app rarely, if ever, needs to handle raw sensitive data after the initial tokenization. There’s no need for pervasive encryption/decryption operations across dozens of microservices or modules; only the tokenization service handles the real data. This simplifies application logic and reduces the chance of a mistake (like a piece of data accidentally not being encrypted).

Moreover, tokens can be reused for certain operations securely. For example, a tokenized credit card can be stored once and reused for future purchases without re-entering the number (great for user convenience and reducing friction). Because it’s a token, you’re not incurring extra risk by storing it for reuse. This streamlines data management – you don’t have to keep asking users for their info, and you avoid storing the actual card each time (which would multiply risk). Stripe’s resources mention that tokens can be reused for future transactions, streamlining the payment process and reducing the need to collect sensitive data repeatedly. This not only improves the user experience (one-click checkout for returning customers) but also lowers the overhead of handling sensitive inputs over and over.

Reducing risk also indirectly saves money by preventing breaches. While it’s hard to quantify the “cost of a breach prevented,” consider that the average data breach in 2024 costs around $4–5 million in damages, fines, and lost business. Tokenization drastically lowers the probability and impact of such an event. In effect, it’s an investment in security that can save huge costs down the line by avoiding the expenses of breach response, legal penalties, and customer compensation. As the saying goes, an ounce of prevention is worth a pound of cure – tokenization is a shining example of this idiom in practice.

From an operational standpoint, tokenization can also lower costs by enabling the use of cloud or external services safely. For example, maybe you want to use a cloud analytics tool on user data but you’re worried about uploading sensitive info. If you tokenize that data first, you can safely use the cloud tool without exposing real data, potentially saving costs by leveraging cloud efficiencies without compromising security. The complexity of building in-house secure analytics might be avoided.

In summary, tokenization simplifies your security model. You centralize sensitive data handling in one secure service and lighten the security requirements everywhere else. This focused approach often translates to cost savings in infrastructure and compliance, as well as reduced overhead in data handling throughout your app. By adopting tokenization, you’re not just buying security peace of mind – you’re also making a pragmatic choice that can improve your bottom line. With these financial and operational benefits in mind, let’s discuss perhaps one of the most important outcomes of secure transactions: the boost in customer trust and confidence.

8. Building Customer Trust and Confidence

When users know that an app takes their security seriously, it builds trust and confidence, which can set your product apart in a crowded market. Data tokenization contributes to this trust in multiple ways, ultimately protecting not just data, but also the relationship between you and your customers.

Firstly, savvy users are increasingly aware of security issues. When a company can tell users, “We **never store your credit card or personal details in plain form – we use tokenization so your data is safe,” it sends a strong signal that you value their privacy. Tokenization specifically protects sensitive payment information by replacing actual payment card details with secure tokens, making it much harder for hackers to access or misuse this data. This transparency about security measures can be a selling point. Customers might not know the term “tokenization,” but they understand concepts like “we don’t actually keep your full credit card number on our servers.” In an age of frequent news about data breaches, offering a more secure way to handle transactions can increase customer loyalty. People are likely to stick with services that haven’t betrayed their trust with a breach.

Tokenization also enables a smoother user experience in many cases, which in turn boosts confidence. For example, because tokenization allows you to securely save payment details without actually storing the sensitive data, customers can enjoy convenient features like one-click purchases or subscription billing without the unease of thinking “does this app have my card number on file?”. They might see an interface that says “Card ending in 1234” and feel assured that the company only kept a reference, not the actual card. This creates a smoother experience at checkout and builds trust in your company – users don’t have to re-enter info every time (less hassle) and they implicitly trust you more because you’ve implemented a secure system to handle it.

There’s also a psychological effect: tokenization, by reducing incidents of fraud and breaches, means your users are less likely to encounter a scary situation like fraudulent charges or identity theft originating from your app. Even if they never know tokenization was the protective measure, they will know that using your app has never caused them problems, which builds a track record of trust. On the flip side, if an app suffers a breach, customers often lose faith and may leave for competitors. By preventing breaches (or containing them so no real data leaks), you maintain a trustworthy reputation over time.

Let’s not forget word-of-mouth and reviews: users who feel safe with your app are more likely to recommend it. For example, a parent might recommend a payment app to their friends saying, “I like that this app doesn’t actually store my card details, it uses some secure method to keep my info safe.” They may not use the word tokenization, but the concept translates to a positive review. In an era where one bad security incident can go viral on social media, consistently good security (thanks in part to tokenization) can be a quiet yet powerful asset in your brand’s favor.

There’s evidence that focusing on security helps customer retention. Maintaining compliance (like PCI DSS) also indirectly assures customers that an app meets industry standards. If a business is PCI compliant, customers know it adheres to certain security practices. Since tokenization helps achieve PCI compliance more easily, it becomes part of the toolkit that lets you publicly attest to being secure, which can be used in marketing or customer communications.

In summary, tokenization helps you earn and keep user trust. It shows experience and expertise (you know how to protect their data), it demonstrates authority in security matters (following best practices like tokenization), and it builds a sense of trustworthiness (because even if something goes wrong, their sensitive data isn’t at risk). Users who feel safe will transact more freely and stick around longer. By protecting your customers’ data, you’re also protecting the relationship you have with them – one of the most valuable assets for any app. Now, let’s explore how tokenization keeps your app aligned with the latest payment innovations and why that matters for staying secure and competitive.

9. Embracing Modern Payment Systems

The world of transactions is constantly evolving – from chip cards to mobile wallets to contactless payments and beyond. One reason data tokenization is so powerful is that it allows your app to easily integrate with modern payment systems and emerging technologies, all while keeping transactions secure. Tokenization is not limited to payment card data; it also secures financial transactions such as banking and stock trading records, protecting sensitive financial activity within a robust data protection framework. In other words, tokenization doesn’t just protect what you have today; it positions you for the future.

Consider mobile payment platforms like Apple Pay, Google Pay, or Samsung Pay. These systems rely heavily on tokenization. When you add a credit card to Apple Pay, Apple doesn’t store your actual card number in the phone. Instead, a Device Account Number (a type of payment token) is created and stored in your device’s secure element. For each transaction, a dynamic security code is generated. The merchant (and any apps using Apple Pay) never see the real card number, only these tokens. If your app supports Apple Pay or similar services, you’re effectively handling tokens by design. By having a tokenization-friendly backend, your app can seamlessly accept these forms of payment, which are highly secure and popular among security-conscious users.

Furthermore, as new payment methods like digital wallets, contactless payments via wearables, or even IoT-based payments (think paying via your smart car or fridge) become common, tokenization will be at their core. Tokenization can be applied to new technologies, allowing businesses to adopt innovative solutions while maintaining high security. By building your app’s payment infrastructure around tokenization, you’re effectively future-proofing it to easily plug in these new payment channels. Instead of dealing with raw card data from a smartwatch, you’d deal with tokens – the process remains consistent and secure.

Another aspect is unified commerce or omnichannel experiences. If you have both an online app and a brick-and-mortar presence, tokenization can unify customer data securely. For instance, a customer could use a loyalty card token or a payment token across in-store and online purchases, and you can link those experiences without exposing actual account numbers. Stripe’s analysis mentions that tokenization allows managing payment data securely across multiple channels – online, offline, mobile – which promotes a consistent and secure customer experience everywhere. Customers today expect that they can, say, order online and return in store, or pay in app and pick up in store, etc. Tokenization helps facilitate these flows without ever compromising security between the channels.

Tokenization also readies your app for any changes in regulations or industry standards. The payment industry might introduce new tokenization schemes (for example, network tokens from Visa/Mastercard that replace PANs with tokens at the network level). If your system is built with token handling in mind, adopting such schemes is easier. In some cases, major card brands have pushed tokenization as a requirement for certain transactions (especially in mobile and e-commerce) to enhance security. By embracing tokenization early, you’re ahead of the curve and won’t be scrambling to comply when such rules kick in.

From a user’s perspective, supporting modern, token-based payments can also be a selling point for your app’s security and convenience. Many users feel safer using Apple Pay or similar because they know their actual card isn’t being shared. If your app proudly supports these methods, you benefit from the security reputation they carry. Additionally, by adopting these methods, you reduce the burden of handling sensitive data yourself – you offload some of that to the tokenization and payment providers, which have robust security measures.

In summary, tokenization is a bridge to the future of secure transactions. It ensures that as payments go more digital, distributed, and innovative, your app can integrate without missing a beat or compromising safety. You’re effectively joining an ecosystem of security-forward payment solutions. This keeps your app competitive (users love modern options) and secure by design. Finally, let’s discuss our last key point: how tokenization helps you stay ahead of evolving threats and requirements, truly future-proofing your app’s security stance.

10. Future-Proofing Your App’s Security

Cyber threats are continually evolving – hackers devise new tactics, and technology keeps advancing. One of the greatest advantages of implementing data tokenization is that it future-proofs your app’s security in a fundamental way. By minimizing sensitive data exposure today, you’re also protecting your app against unknown threats of tomorrow and setting a strong foundation for long-term security.

Firstly, tokenization addresses the core issue that will likely persist in any future scenario: the value of data. As long as sensitive data like personal info and payment details have value, attackers will seek them. Tokenization removes that value from your systems. It’s a timeless defensive strategy. Even as hacking techniques become more sophisticated (using AI to find vulnerabilities, for example), breaching a tokenized system will remain a low-reward endeavor unless the attacker can also penetrate the token vault. You’re structurally making your app a less attractive target. Think of it as keeping the gold in a bank vault rather than in your store – thieves can break into the store (maybe through a new clever method), but they still won’t find the gold there. This fundamental approach will continue to pay dividends as threats change.

Secondly, tokenization frameworks and standards are themselves adapting and improving. By using tokenization, you can more easily adopt new security enhancements. For instance, if in a few years a new form of secure token or exchange protocol emerges, you can update your tokenization service or provider without overhauling your whole app. Your app’s core logic deals with tokens; as long as that interface remains, the behind-the-scenes can upgrade. It’s much harder to retrofit an app that was designed to handle raw data to suddenly become tokenized than it is to improve a tokenization implementation. In that sense, you’ve “future-proofed” the architecture by already decoupling data handling from the rest of your operations.

We also see trends where more enterprises are adopting tokenization year over year, indicating that it’s becoming a standard best practice. Analysts predict that 80% of enterprises will use tokenization for payment data security by 2025, up from 65% in 2023. This momentum means that tools, services, and best practices around tokenization will continue to grow and improve. By aligning your app with this trend now, you ensure compatibility with emerging industry solutions (like tokenization-as-a-service platforms, improved vault technologies, etc.). You’ll be able to integrate with partners or services that expect tokenized data formats, which might become the norm. Essentially, you’re not running against the crowd of security – you’re moving with it, which is a safer long-term path.

Furthermore, as privacy regulations expand (and they likely will, given the global focus on data protection), tokenization will help you comply with new requirements with minimal changes. Many privacy laws focus on whether you’re storing personal data and how you protect it. If regulators come and ask, “Are you storing this sensitive info about users?”, you can confidently say, “No, we store tokens instead.” That technical choice could save you from having to implement costly new controls or face restrictions under future laws. It also builds trust with regulators and partners that you’re using state-of-the-art techniques to safeguard data.

Lastly, tokenization’s compatibility with other cutting-edge security measures means you can layer defenses. For example, if quantum computing becomes a threat to certain encryption algorithms in the future, an app that relied solely on encryption might have to scramble to re-encrypt data with quantum-resistant methods. An app that tokenizes data has an extra layer of defense – even if some encryption is weakened, the data is still tokenized. Meanwhile, you can update encryption in the token vault or transit without altering the rest of your system’s logic. This layered approach ensures no single breakthrough in hacking completely exposes you.

In conclusion, adopting data tokenization is a forward-looking strategy that keeps your app prepared for whatever comes next. It’s an investment in resilience. By reducing dependency on the secrecy of data, you make your app inherently safer against unforeseen vulnerabilities and stricter future standards. Tokenization will continue to be a cornerstone of secure transactions as we advance, and by leveraging it now, you’ve positioned your app to thrive securely both today and in the years to come.

Frequently Asked Questions (FAQs)

How is data tokenization different from encryption?

Answer: Tokenization and encryption both protect data, but they do so differently. Encryption scrambles data using an algorithm and a key – if you have the key, you can decrypt and get the original data back. Tokenization, on the other hand, replaces the data with a token and stores the original data separately (in a secure vault). There’s no mathematical way to get the original data from just the token. In short, encryption is reversible with a key, whereas tokenization is only reversible by looking up the token in a secure system. This makes tokens useless to anyone without access to the tokenization vault, even if they can see or steal the token.

Does tokenization slow down my app’s performance?

Answer: Implementing tokenization has a minimal impact on performance when done properly. The process of swapping data for a token (or vice versa) is usually very fast – often just a database lookup in the token vault or a quick call to a tokenization service. Modern tokenization solutions are optimized to handle high volumes of transactions with negligible latency. In many cases, users won’t notice any difference. The slight overhead introduced (a network call or lookup) is a small trade-off for the huge security benefits. Plus, by reducing compliance overhead, tokenization might improve performance indirectly (for example, less encryption/decryption happening constantly within your app).

Is tokenization required for PCI DSS or other compliance?

Answer: Tokenization itself is not an absolute requirement in PCI DSS, but it’s strongly encouraged and widely used to help meet requirements. PCI DSS requires that stored cardholder data be protected – tokenization is one way to achieve that because you’re effectively not storing the actual card data at all. Using tokenization can reduce the scope of your PCI DSS assessment (making compliance easier). While you could use encryption or other methods to protect data and be compliant, tokenization often makes compliance simpler and more cost-effective. Similarly, regulations like HIPAA or GDPR encourage minimizing stored sensitive data; tokenization helps with that by only keeping tokens in most systems.

What kinds of data should I tokenize in my app?

Answer: You should tokenize any sensitive data that could cause harm if exposed. Common examples include payment card numbers, bank account numbers, Social Security numbers, health records, driver’s license numbers, and other personally identifiable information (PII). Essentially, if a piece of data could lead to fraud or identity theft, or if it’s protected by privacy laws, it’s a good candidate for tokenization. Many apps start with payment info and personal IDs, then extend tokenization to things like addresses, phone numbers, or even email addresses depending on their threat model. The goal is to limit what real data is stored in your databases. Remember, tokenizing too much (like non-sensitive info) isn’t necessary – focus on the data that needs protection.

Can tokens be stolen or misused by hackers?

Answer: Tokens can be stolen, but on their own they are useless to hackers. If an attacker breaches your system and grabs tokens, they can’t do much with them. A token is just a reference – it doesn’t reveal the original data and can’t be used to transact (in the case of payment tokens) without going through the secure tokenization system. However, if an attacker somehow gains access to the tokenization system or vault as well, they could potentially detokenize data. This is why the tokenization system must be highly secured and isolated from regular app systems. In practice, it’s far more difficult to compromise a well-protected token vault than to breach other parts of an app. So while no system is 100% hack-proof, tokenization greatly limits what a hacker can do – they’d have to overcome multiple layers of security to get actual sensitive data, which is very unlikely if best practices are followed.

How do I start implementing tokenization in my app?

Answer: To implement tokenization, you have a couple of approaches:

Use a Tokenization Service/Provider: Many payment gateways (like Stripe, Braintree, etc.) and cloud providers offer tokenization as a built-in feature. For example, when accepting a credit card payment, you can use their API to get a token for the card, and then use that token for charges. You never handle the raw card data yourself – the provider does and returns a token. Similarly, some database security platforms provide tokenization for general data. Using these services is often the quickest way to add tokenization with minimal development effort.

Build or Use a Tokenization Server: If you need in-house control (say for specific data types or compliance reasons), you can set up a tokenization server. This is a secure application that you host, which has a database (the token vault) and APIs to tokenize and detokenize data. Your app would communicate with this service whenever it needs to swap data for a token or vice versa. There are open-source libraries and commercial software that can help with this, so you don’t have to write it from scratch. Key considerations are to secure this server strongly (network isolation, encryption, access controls) since it becomes the gatekeeper of your sensitive info.

Client-Side Tokenization: In some cases, you can tokenize data on the client side (like in a web browser or mobile app) before it even hits your servers. For example, web payment forms can directly send data to the payment processor to tokenize, so your server only gets the token. This requires some integration work but is a great way to ensure sensitive data never traverses your system.

After choosing the method, you’ll integrate tokenization into your data flows. Update your database schemas to store tokens (same fields but will hold tokens), adjust your backend logic to call the tokenization service, and test thoroughly to ensure everything still works (remember, format preserving tokens make this easier!). It’s wise to start with one type of data (like payment info) and then expand tokenization to other sensitive fields gradually. Also, educate your team about how tokens should be handled (they should treat them like sensitive data in the sense of not exposing them publicly, but know that tokens aren’t the actual secrets).

By taking these steps, you can implement tokenization and greatly enhance your app’s security posture.

Conclusion

Secure transactions are the backbone of trust in today’s app-driven economy. We’ve explored “10 Powerful Ways Secure Transactions: How Data Tokenization Protects Your App,” and the message is clear – tokenization is a game-changing strategy to safeguard your application and its users. By replacing sensitive data with valueless tokens, you’ve effectively put your most prized data in a hardened vault and given would-be thieves nothing but useless tokens to find. This approach dramatically reduces the risk of breaches and their impact, ensuring that even if attackers strike, they come up empty-handed.

Throughout this article, we saw how tokenization covers all the bases of security: it keeps hackers at bay, minimizes damage in worst-case scenarios, and makes compliance a much easier hill to climb. It works hand-in-hand with other security measures like encryption to protect data in transit, and it even thwarts insider threats by limiting what staff can see. What’s more, tokenization achieves all this without sacrificing functionality – your app continues to run smoothly, as tokens preserve the format and usefulness of data. In fact, a tokenized system often runs more efficiently in the long run, with lower security overhead and simpler audits.

We also highlighted the broader business benefits: gaining customer trust by showing you put their security first, and staying agile by embracing modern payment trends that rely on tokenization. As the landscape of threats and regulations evolves, tokenization stands out as a future-proof solution that keeps your app resilient. When 80% of enterprises are moving toward tokenization by 2025, it’s a strong signal that this is not just a tech fad, but a new standard for protecting transactions and data in general.

In an optimistic light, think of tokenization as empowering you to innovate without fear. You can confidently add new features, integrate new services, or expand your business, knowing that this protective layer is woven into your app’s fabric. It provides peace of mind for you and your users. Experience, expertise, and industry authority all point toward tokenization as a best practice – and by implementing it, you position yourself as a trustworthy steward of user data.

As you move forward, remember that security is an ongoing journey. Tokenization is a major leap in the right direction, and maintaining strong token management practices (securing the vault, monitoring access, etc.) will ensure it continues to serve you well. With the knowledge from these 10 powerful ways, you can confidently transform how your app handles data. You’re not just keeping up with security standards; you’re setting the pace.

In summary, data tokenization protects your app by making secure transactions the default – reducing risk, building trust, and paving the way for growth unhampered by security woes. It’s an investment in your app’s future and an assurance to your users that their information is safe in your hands. Now is the perfect time to embrace tokenization and enjoy the dual benefits of stronger security and greater user confidence. Here’s to a safer, smarter app for everyone!

Next Steps:

- Translate this article into another language to share these tokenization insights with a broader audience.

- Generate blog-ready images or infographics that illustrate tokenization concepts (like the vault analogy or process flow) to enhance your content.

- Start a new article exploring a related topic, such as “Tokenization vs. Encryption” deep-dive or a step-by-step guide on implementing tokenization in popular programming frameworks.