The Best AI Assistants for Code Review and Debugging in 2025

The Best AI Assistants for Code Review and Debugging in 2025

Key Takeaways

AI assistants are revolutionizing code review and debugging by automating routine tasks and boosting development speed without sacrificing quality. Here’s how you can leverage these tools to supercharge your workflows in 2025.

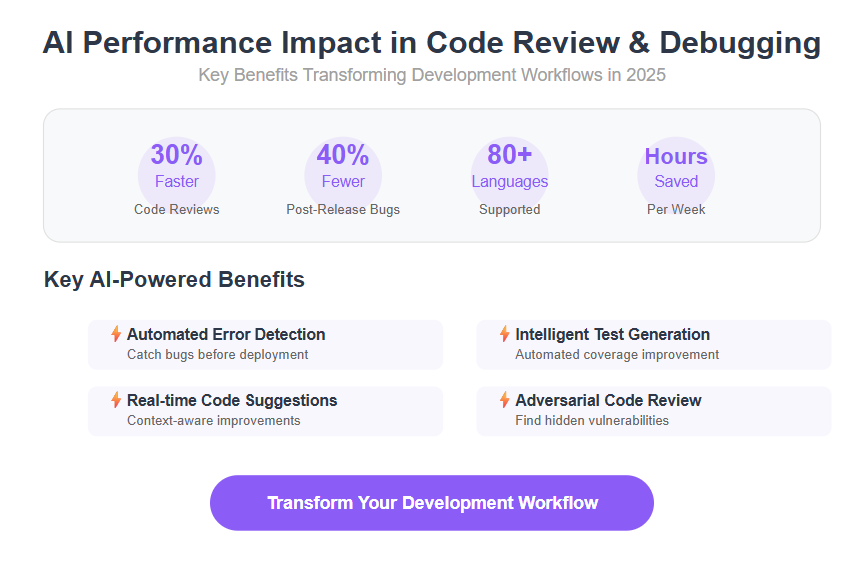

- AI speeds up code reviews by up to 30% through automated error detection, test generation, and change tracking, letting your team focus on complex problem-solving and innovation.

- Choose AI assistants that fit your tech stack and IDEs—tools like Tabnine support 80+ languages, while Qodo excels in VS Code and JetBrains for seamless integration.

- Adversarial critique and multi-agent AI collaboration enhance code quality by actively exposing hidden vulnerabilities before deployment, raising review precision significantly.

- Integrate AI gradually into workflows by automating repetitive checks first and preserving human judgment on architecture and design to avoid “black box” dependence.

- Use asynchronous AI pull requests (e.g., Google Jules) to maintain developer control while accelerating fixes and reducing review bottlenecks in distributed or hybrid teams.

- Manage AI suggestion volume strategically by prioritizing critical alerts and batching minor fixes, preventing cognitive overload and keeping teams engaged.

- Invest in vendor transparency and training—regular updates, clear documentation, and team education maximize your AI assistant’s effectiveness and adoption.

- Future-proof your process with AI-driven CI/CD and predictive analytics that detect vulnerabilities in real time and forecast risky code areas, enabling proactive bug prevention.

Start implementing these insights today to transform your code reviews from a slow grind into a fast, accurate sprint. Dive into the full article to see which AI assistant fits your team’s unique needs and workflows.

Introduction

Ever spent hours wading through code reviews only to catch bugs late in the game? You’re not alone. With software delivery timelines tightening, developers need smarter ways to catch errors faster—without burning out.

That’s where AI assistants come in, reshaping how code review and debugging happen in 2025. These tools are no longer futuristic novelties; they’re becoming essential teammates that help you:

- Automate repetitive checks

- Spot subtle bugs earlier

- Generate and verify tests on the fly

For startups and SMBs racing against the clock, this means cutting review cycles by up to 30% without sacrificing quality. For enterprises aiming to scale securely, AI’s deep insights into vast codebases uncover vulnerabilities a human might miss.

But not all AI assistants are built the same. Some focus on intelligent project decomposition, others shine with adversarial critique or real-time suggestions tailored to your coding environment. Choosing the right assistant could transform your workflow from tedious to turbocharged.

In this guide, you’ll discover key AI tools making waves in the dev community, practical ways they boost productivity, and how to integrate them smoothly into your existing processes.

By the end, you’ll know how to hand off repetitive, error-prone work to AI while keeping strategic decisions firmly in your hands—turning code review and debugging into powerful accelerators instead of bottlenecks.

Let’s unpack how these AI copilots are changing the game right now.

Understanding AI Assistants in Code Review and Debugging

AI assistants are transforming how developers tackle code review and debugging by embedding smart automation directly into everyday workflows. Think of them as digital copilots that sift through your codebase to catch errors, suggest improvements, and help maintain code quality—all in real time. Modern tools provide intelligent code assistance, automating code suggestions and refactoring to streamline the development process.

How AI is Shaping Modern Development

Traditional code reviews often feel like a bottleneck, with manual inspections slowing down release cycles. AI flips this script by:

- Automating repetitive tasks like syntax checks and style enforcement

- Providing intelligent code suggestions and accurate code suggestions for bug fixes and optimizations

- Managing large code contexts faster than any human reviewer could

These AI tools can assist with various programming tasks, from debugging to code optimization.

Startups and SMBs gain a clear edge here: speed, accuracy, and scalability improve dramatically without piling on headcount or extending deadlines.

The Tech Behind the Magic

At the core, most AI assistants rely on:

- Large Language Models (LLMs) such as OpenAI’s GPT or Google’s Gemini that understand natural language and code syntax. These models are trained on vast amounts of source code to understand programming syntax and logic.

- Intelligent, autonomous agents that decompose complex projects into manageable parts

- Adversarial review systems that actively test code for vulnerabilities and logic gaps

AI assistants can interpret a natural language prompt and natural language queries to generate code or answer questions. Some tools can even generate entire functions from user input, streamlining the coding process.

Imagine a “critic” running alongside your code, constantly challenging it to be better—that’s adversarial review in action.

What AI Handles Versus What Still Needs You

AI shines at automating:

- Detecting common errors and code smells, and even write tests automatically

- Generating unit tests and verifying coverage

- Writing boilerplate code and handling pull request reviews

AI also assists with code optimization by analyzing code and suggesting performance improvements during code review.

But, AI isn’t replacing human judgment anytime soon. Strategic decisions, architectural design, and nuanced problem-solving require your expertise.

Picture This

You’re juggling multiple feature releases. Instead of sifting through thousands of lines manually, your AI assistant flags critical bugs, suggests fixes, and writes initial test cases—all ahead of your review. The AI assistant can also review the code written during these releases, ensuring quality and consistency. This frees you to focus on innovation and complex debugging that demands a human touch.

AI assistants accelerate development by handling grunt work while boosting confidence in code quality. The immediate takeaway? Embrace AI to shave hours off review cycles and scale your development velocity without sacrificing accuracy. This is about working smarter—not harder—in 2025’s fast-paced software landscape.

Types of AI Coding Assistants

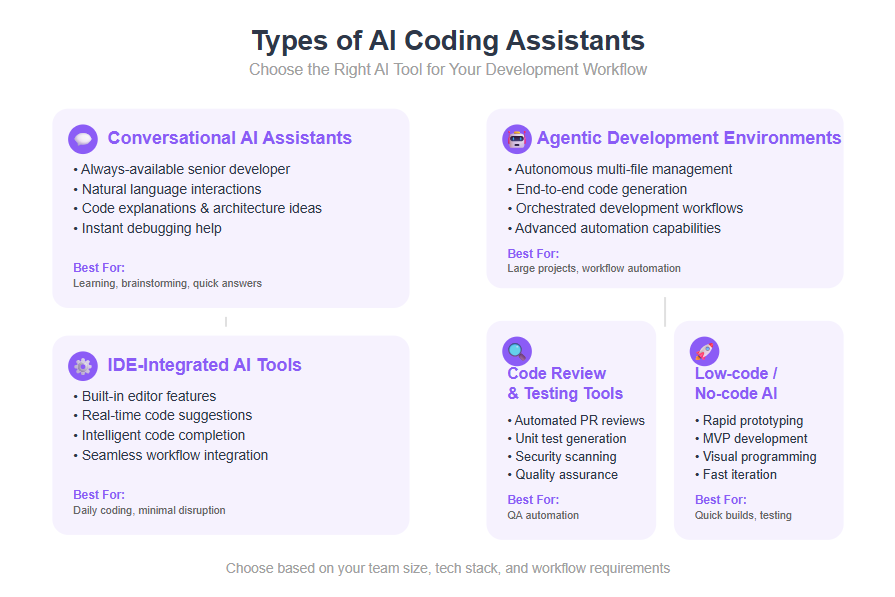

AI coding assistants come in a variety of forms, each designed to fit different stages of the development process and cater to diverse team needs. Understanding these categories can help you choose the best ai coding tools for your workflow and maximize the benefits of ai powered code completion, code suggestions, and code quality improvements.

- Conversational AI Assistants: These act like always-available senior developers, ready to answer questions, explain complex code, or brainstorm architecture ideas. They excel at natural language interactions, making it easy to ask for code explanations, debugging help, or best practices using natural language prompts. Whether you’re stuck on a tricky bug or need a quick code review, conversational ai coding assistants provide instant, judgment-free support.

- Agentic Development Environments (ADEs): Representing the next generation of ai coding tools, ADEs go beyond simple suggestions. They can autonomously manage multiple files, orchestrate entire development workflows, and even handle end-to-end code generation. These ai assistants are ideal for teams looking to automate larger chunks of the development process, from initial design to deployment, all within advanced development environments.

- Integrated Development Environments (IDEs) with AI Capabilities: Many popular code editors now come with built-in ai coding assistant features. These tools offer real time code suggestions, intelligent code completion, and context-aware code generation as you type—without disrupting your existing workflow. By embedding ai capabilities directly into familiar development environments like Visual Studio Code or JetBrains, these assistants boost productivity and code quality with minimal learning curve.

- AI-powered Code Review and Testing Tools: Focused on automating the review and testing process, these ai tools help ensure your code meets best practices and security standards. They can generate unit tests, review pull requests, and flag potential issues before they reach production. By integrating seamlessly with your codebase, these ai code assistants streamline quality assurance and reduce manual effort.

- Low-code and No-code AI Tools: Perfect for rapid prototyping and MVP development, these ai powered platforms enable users to build functional applications with minimal manual coding. By leveraging ai code generation, even non-developers can create and iterate on software quickly, making these tools invaluable for testing ideas and accelerating time-to-market.

By understanding the different types of ai coding assistants available, you can select the right ai tool for your team’s needs—whether you’re looking for conversational support, autonomous development, or rapid prototyping across multiple files and languages.

Top AI Assistants Revolutionizing Code Review and Debugging in 2025

AWS Kiro: Agentic AI IDE for Structured Development

AWS Kiro stands out by breaking down complex projects into manageable components using the Model Context Protocol (MCP).

Its intelligent project planning helps teams stay organized from start to finish, while built-in verification and change tracking catch mistakes early—before deployment headaches arise.

Picture this: a startup juggling multiple feature rollouts finds Kiro slashing their bug rate by 30%, thanks to its structured workflows and error detection. Kiro integrates seamlessly into your development environment and complements your entire development stack, making it a powerful addition to any team's workflow.

- Intelligent project decomposition

- Built-in verification steps

- Change tracking to reduce deployment errors

- Advanced features available for power users or enterprise teams

Google Jules: Asynchronous Coding Agent with Adversarial Critique

Google’s Jules works quietly in the background, pushing code updates as pull requests for your review, keeping control firmly in your hands.

Powered by the Gemini 2.5 Pro model, Jules processes extensive code context to detect vulnerabilities and suggest fixes, while its “critic” feature offers adversarial scrutiny—finding weaknesses before they turn into problems. Jules can also be used as a code extension within popular IDEs, providing enhanced review capabilities and seamless integration into your development workflow.

Imagine a LATAM enterprise catching security flaws earlier in the cycle because Jules flagged risky logic updates users might’ve missed.

- Asynchronous code updates via safe pull requests

- Gemini 2.5 Pro-powered deep context analysis

- Adversarial “critic” reviews for enhanced code quality

- Static code analysis to detect vulnerabilities and improve code quality

GitHub Copilot: Real-Time Code Suggestions Across Languages

GitHub Copilot’s ace is real-time support across multiple AI language models including GPT, Sonnet, and Gemini, tailoring help to whatever language or framework you’re tackling.

It’s like having a seasoned reviewer by your side, helping you navigate unfamiliar codebases and reduce docs-digging time.

Copilot’s wide integration with your preferred code editor means it easily slides into your daily coding flow, streamlining development without disruption. It also supports code sharing and integrates with version control systems, enabling collaborative workflows and efficient management of code changes.

- Multiple AI language model options

- Reduces time spent reading documentation

- Deep IDE integration for seamless workflows

Qodo (formerly Codium): Comprehensive Code Generation and Review Suite

Qodo automates key development tasks by combining:

- Real-time test generation

- Automated pull request reviews

- Test coverage assurance

Qodo supports the entire code development lifecycle, from code generation to review. It can analyze and improve existing code as part of the review process, ensuring that both new and existing code meet high standards.

This tool hooks into IDEs like VS Code and JetBrains, plus Git platforms—boosting productivity and improving code reliability during fast development sprints.

Think of an SMB hitting deadlines faster with fewer bugs because Qodo manages the nitty-gritty review details automatically.

Tabnine: Multi-Language AI Assistant for Code Completion and Explanation

Tabnine supports over 80 languages with seamless IDE integrations, helping developers:

- Complete code faster

- Fix bugs smartly

- Generate documentation

- Generate and explain Python code

- Automate documentation generation

- Understand complex code snippets with clear code explanation

Tabnine can also produce functional code snippets directly from natural language input, making it easier for developers to go from idea to implementation.

It’s especially handy when maintaining or debugging legacy code, turning what used to be a headache into a smooth workflow.

Tabnine delivers low-friction AI assistance wherever you code, keeping projects moving without interruptions.

These AI assistants transform code review and debugging by automating tedious tasks, spotting hidden errors, and integrating smoothly into developer workflows.

Ready to cut review cycles and level up your code quality? Tools like AWS Kiro and Google Jules prove that structured AI and adversarial critique aren’t sci-fi anymore—they’re essentials for agile teams.

Top takeaway: Pick an AI assistant that fits your language stack and workflow style to amplify speed without sacrificing control.

How AI Assistants Unlock Developer Productivity in Code Review and Debugging

AI assistants dramatically reduce the time spent on repetitive tasks like error detection and test writing, cutting hours off development cycles. By automating these routine parts, developers can focus on the strategic challenges that truly require human creativity.

Power users may benefit from advanced features and higher usage quotas, while usage based pricing and free version options make AI assistants accessible to teams of all sizes.

AI Generated Code: Opportunities and Pitfalls

AI-generated code is transforming the way developers approach software creation, offering both exciting opportunities and important challenges. Leveraging ai coding assistants and ai tools can supercharge productivity, but it’s crucial to be aware of the potential pitfalls that come with relying on generated code.

These assistants speed up root cause analysis through advanced diagnostics powered by large language models and intelligent code parsing. Instead of manually tracing bugs line by line, AI tools highlight likely sources of failure based on patterns learned from millions of code examples.

Key productivity improvements come from AI’s ability to:

- Generate and update test cases automatically, improving coverage

- Detect subtle syntax and logic errors early in the review process

- Propose fixes with contextual explanations, speeding debugging sessions

- Provide continuous verification and change tracking to minimize regressions

For example, Google Jules’ asynchronous pull request updates allow human reviewers to focus on higher-level decisions while AI handles routine fixes behind the scenes.

This shift lets teams harness AI for heavy lifting but retain human judgment where it matters most—in assessing code safety, architecture, and user experience implications. A balance prevents false positives or risky changes slipping through, maintaining code integrity without slowing development.

Real-world results back this up: startups using AI assistants like AWS Kiro report up to 30% faster code review turnarounds, while SMBs leveraging Qodo see measurable boosts in test coverage and fewer post-release bugs.

- Faster reviews mean quicker feature rollouts

- Automated test generation reduces technical debt

- Early error detection lowers production incidents

Picture your next sprint with AI pulling most quality checks off your plate—letting your team innovate instead of firefight.

AI tools don’t replace developers—they turbocharge them. By offloading tedious tasks and amplifying insights, these assistants free up creative energy and accelerate software delivery.

The smartest move you can make? Start exploring how AI can integrate into your review processes today, and watch productivity shift from surviving to thriving.

Strategic Integration of AI Assistants in Team Code Reviews

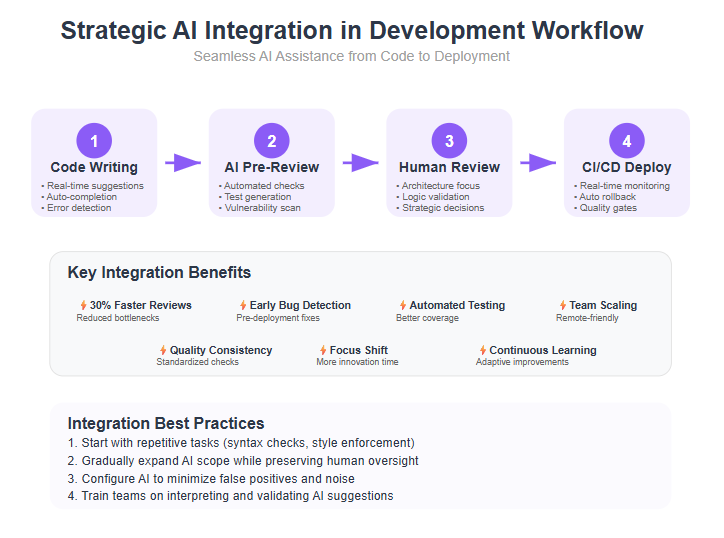

Integrating AI assistants into your team’s code review process starts with aligning these tools with your established workflows. Don’t overhaul everything overnight—focus on gradually introducing AI where it fits best and adds clear value.

AI assistants can also facilitate pair programming and AI pair programming, enabling real-time collaboration between developers and AI. Additionally, code sharing features help teams collaborate more effectively during code review.

Best Practices for Seamless Adoption

- Identify repetitive tasks like code style checks and preliminary bug detection for AI automation

- Use AI-generated pull requests to surface early feedback without blocking human review

- Establish review protocols that prioritize human judgment on complex logic and architecture

- Train teams on interpreting AI suggestions critically, not blindly accepting outputs

This approach prevents turning AI into a “black box,” ensuring reviewers maintain control while benefiting from AI’s speed.

Enhancing Collaboration with AI-Driven Feedback Loops

Imagine an AI assistant catching low-hanging errors and submitting pull requests asynchronously in the background. This setup:

- Accelerates feedback cycles by circulating automated suggestions before human review

- Encourages iterative improvements with continuous AI-human interaction

- Helps distributed teams stay in sync by providing a consistent baseline of code quality checks

A startup we know cut code review times by 30% after adopting this model, freeing devs to focus on innovation.

Managing AI Suggestions Without Cognitive Overload

AI can spit out a lot of suggestions quickly—too quickly, sometimes. To avoid reviewer fatigue:

- Configure AI tools to prioritize recommendations by severity or confidence

- Limit notifications to critical updates, bundling minor fixes as batch suggestions

- Rotate review responsibilities with AI insights to keep perspectives fresh

This keeps the team engaged without drowning in AI noise.

Scaling AI Across Distributed or Hybrid Teams

For SMBs and startups with remote developers, consistent AI feedback is a lifeline. Make scaling AI adoption easier by:

- Standardizing AI configurations across all team members’ IDEs, for example by using a VS Code extension or other code extension to unify AI assistant features

- Integrating AI outputs with collaboration platforms like GitHub Copilot or Jira

- Pairing AI assistance with regular team syncs to discuss edge cases and improve AI models

Teams using GitHub Copilot and Qodo report smoother code syncs and less rework, even across time zones.

Real-World Success in Startup Teams

One LATAM startup boosted code reliability by 40% within two months using Google Jules’ asynchronous pull requests combined with human adversarial review. Early AI flagging caught subtle security vulnerabilities, previously overlooked under tight deadlines.

Another SMB leveraged AWS Kiro’s project decomposition to better organize code reviews, chopping weeks off deployment timelines by clarifying scope and reducing reviewer confusion.

Integrating AI assistants smartly means treating them as powerful teammates, not magic bullets. Gradual rollout, clear roles, and managing AI input volume are your best bets for hitting that sweet spot where AI augments productivity without sacrificing code quality or team sanity. Ready to give your code reviews that AI edge?

Key Features Driving Superior AI Debugging Tools in 2025

AI debugging tools today pack a punch with essential capabilities that turbocharge developer workflows. Leading ai coding assistant tools can generate code, provide code assistance, and automate debugging tasks, making them invaluable for modern development environments. At their core, these assistants automate:

- Automatic error detection that spots bugs before they hit production

- Code fix recommendations offering precise, actionable corrections

- Test generation creating reliable test cases to catch future issues

Automating these foundational tasks slashes manual effort and speeds up release cycles.

Advanced Functions Powering Deeper Insights

Beyond basics, top AI debuggers now deploy cutting-edge features:

- Adversarial review scanning code for hidden vulnerabilities and weak spots

- Real-time context analysis understanding large codebases to tailor suggestions

- Multi-model support, like GPT and Gemini, adapting recommendations based on project needs

- Generation of entire functions based on user input or code context, enabling the creation of complete code structures from comments or signatures

These advances help teams unearth complex bugs and boost confidence in code stability.

User Experience That Fits Developer Lifestyles

The smoothness of AI integration shapes how widely these tools get adopted. Look for:

- Ease of integration with popular IDEs like VS Code, JetBrains, and GitHub platforms

- Command line integration for developers who prefer terminal workflows, enabling AI assistance directly within the command line environment

- Asynchronous operation allowing background code updates without interrupting flow

- Customizability to tweak AI behavior according to team preferences and coding style

Such user-focused design lets developers stay in the zone and reduces cognitive overload.

How These Features Improve Efficiency and Accuracy

Combining these elements, AI debugging assistants help:

- Cut debugging cycle times by up to 30% in some reported cases

- Increase code accuracy with fewer missed bugs even in complex, multi-language projects

- Free developers to focus on creative problem-solving rather than repetitive tasks

AWS Kiro’s Model Context Protocol support, for example, breaks down complex projects systematically, reducing rework and errors ahead of deployment.

What Sets the Leading AI Debuggers Apart?

Top tools stand out through:

Depth of context awareness in code reviews

Robustness of adversarial and multi-model techniques

Seamless IDE and workflow integration

For instance, Google Jules’ asynchronous pull requests with its “critic” feature create safer, developer-validated fixes that don’t disrupt ongoing work.

"Great debugging AI isn’t just smart—it fits so naturally into your workflow you barely notice it’s there."

"The best assistants unlock hours of developer time each week by transforming tedious tasks into automated magic."

"Real-time context and adversarial analysis turn debugging from a guessing game into a clear path forward."

This blend of power and usability is the secret sauce helping SMBs and startups deliver quality software faster, without blowing their budgets.

Your takeaway? Prioritize AI tools that meld strong analysis features with smooth, customizable user experiences to get the most impact on your debugging workflows in 2025.

Choosing the Right AI Assistant for Your Debugging and Code Review Needs

Picking the perfect AI assistant isn’t just about the flashiest features—it’s about fit. Start by checking programming language support, IDE compatibility, and how well the tool meshes with your daily workflow. For example, JetBrains AI Assistant is an integrated AI tool within JetBrains IDEs that enhances productivity through features like code generation and intelligent code completion. Choosing an AI assistant that integrates with your preferred code editor or as a code extension can further streamline your workflow.

Focus on What Fits Your Team’s Tech Stack

Look for AI tools that support the languages you use daily. For example:

- Tabnine offers support for 80+ programming languages, making it a versatile pick if you juggle multiple stacks.

- If you work heavily in Visual Studio Code or JetBrains IDEs, Qodo’s deep integration can save you time.

A mismatch here means wasted effort and frustration.

Balance AI Complexity with Practical Usability

More sophisticated AI models like Google Jules’ Gemini 2.5 Pro can analyze huge contexts and spot hidden vulnerabilities—but don’t let that overwhelm your team.

Ask yourself:

- Is the AI’s output easy to interpret?

- Does it offer customization to tone down false positives?

An assistant that’s complicated or spams you with suggestions can slow things down.

Cost vs. ROI: What Startups and SMBs Should Weigh

Budget matters—especially for smaller teams. AI assistants range from free tiers to subscription models costing hundreds monthly.

Consider:

- How much time the AI saves on reviews and debugging

- Potential reduction in bug-related downtime (which can cost 15%+ of development time on average)

- Licensing and onboarding fees

A good AI investment can pay for itself by accelerating delivery and cutting errors.

Don’t Overlook Vendor Transparency and Support

Trustworthy vendors update models regularly and engage with their communities.

Check:

- Release frequency and responsiveness to bugs

- Availability of documentation and tutorials

- Active forums or support channels

A transparent vendor means fewer surprises and better long-term value.

Tips for Smooth Trial and Adoption

- Start with a pilot project or small team to gauge fit

- Customize AI settings to reduce noise and false alerts

- Train developers on how to interpret AI feedback effectively

- Collect team feedback regularly to tweak integrations

Hands-on experience quickly reveals whether a tool can truly level up your process.

Choosing the right AI assistant means focusing on fit, usability, and ROI—not just features. Think of it like picking a teammate who’s not only smart but easy to work with.

“AI tools work best when they match your workflows and don’t add noise,” says one software lead. Picture your team breezing through code reviews with AI pulling up exactly the right tips, freeing you for the truly creative work.

Start small, measure impact, and scale with confidence—you’ll transform your debugging from a grind into a sprint.

Future Trends: Cutting-Edge AI Features Enhancing Code Review Accuracy

AI-powered code review is evolving fast, with new capabilities reshaping how developers catch bugs and boost code quality. In the future, ai coding assistant tools will offer deeper integration as code extensions within popular development environments, supporting the entire code development lifecycle from initial creation to review.

Adversarial Critique and Multi-Agent Collaboration

One breakthrough: adversarial critique, where AI actively challenges code to expose hidden flaws or inefficiencies. Picture an AI “devil’s advocate” probing for weak spots before deployment.

Alongside this, multi-agent collaboration uses several AI assistants working together to review, test, and suggest fixes, much like a virtual code review team ensuring no issue slips by.

These innovations dramatically raise review precision while reducing human oversight burdens.

AI in Continuous Integration and Deployment

AI is becoming a core part of CI/CD pipelines, helping teams catch errors early and automate routine debugging during builds.

Key benefits include:

- Real-time vulnerability detection during integration

- Automated rollback or patch recommendations on failed tests

- Seamless syncing of AI suggestions with deployment workflows

This tight integration speeds up development cycles, allowing startups and SMBs to ship code faster without sacrificing quality.

Predictive Analytics for Preemptive Bug Catching

Forward-looking AI tools now use predictive analytics to forecast where bugs or vulnerabilities are likely to appear next.

Based on historical commit data and code patterns, these models highlight "risky" areas, enabling teams to:

- Prioritize testing on potential hotspots

- Proactively fix issues before they escalate

- Reduce costly post-release patches

Imagine an AI team member spotting trouble spots before you even push code.

Holistic Quality Monitoring with AI-Driven Analytics Platforms

Modern code reviews are no longer isolated events—they’re part of broader quality ecosystems powered by AI-driven analytics platforms.

These platforms provide:

- Dashboards tracking code health, test coverage, and technical debt

- Alerts triggered by anomalies spotted across projects

- Actionable insights combining code review metrics with deployment outcomes

This holistic approach enables leaders to maintain consistent code standards at scale.

Preparing Teams for the Evolving AI Landscape

Adopting these cutting-edge tools means more than just plugging in software.

Successful teams invest in:

- Ongoing AI training sessions to understand new features and limitations

- Encouraging a culture of experimentation with AI assistants

- Adapting workflows to balance AI automation with human creativity

As AI grows more sophisticated, people remain the crucial link for judgment and context.

Emerging AI features like adversarial critique and predictive analytics are rapidly boosting review accuracy and speeding delivery.

Integrating AI smartly into CI/CD pipelines and analytics platforms is no longer optional—it’s key to staying competitive in 2025.

And while tools evolve, the smartest teams double down on people + AI collaboration to unlock maximum impact. Ready to lead that charge?

Additional Resources

If you’re ready to explore the world of ai coding tools and ai coding assistants, there’s a wealth of resources to help you get started and stay ahead:

- Tutorials and Guides: Most leading ai coding tool providers offer comprehensive tutorials, step-by-step guides, and detailed documentation to help you integrate ai assistants into your development environments.

- Community Forums: Join active forums and discussion groups to connect with other developers, share experiences, and get tips on maximizing the value of ai coding assistants.

- Webinars and Workshops: Attend live or recorded sessions hosted by industry experts and tool vendors, covering everything from basic setup to advanced ai capabilities and best practices.

- Blogs and Research Papers: Stay informed about the latest trends, breakthroughs, and case studies in ai coding tools by following reputable blogs and reading up-to-date research papers.

- Trial Versions and Free Tiers: Experiment with different ai coding assistants using free versions or trial tiers, allowing you to find the best fit for your team’s workflow before making a commitment.

By tapping into these resources, you can confidently navigate the evolving landscape of ai coding tools, enhance your development process, and unlock new levels of productivity and code quality.

Conclusion

Harnessing AI assistants for code review and debugging in 2025 isn’t just about speeding up tasks—it’s about transforming your development workflow into a smarter, more reliable engine for innovation. These tools carry the grunt work, freeing you and your team to focus on the creative, strategic challenges that truly move your product forward.

To unlock this potential, embrace AI as a collaborative partner rather than a silver bullet. The right assistant fits your tech stack, respects your workflow, and elevates your code quality without overwhelming your team.

Here are key moves to make today:

- Start small with targeted AI automation—focus on repetitive tasks like syntax checks and test generation first

- Choose AI assistants that best align with your language needs and IDE preferences to ensure smooth adoption

- Train your team to critically evaluate AI suggestions, balancing trust with human judgment

- Integrate AI feedback into your existing review and deployment pipelines for seamless collaboration

- Monitor AI impact closely and iterate on configurations to reduce noise and maximize value

Taking these steps sets you up for faster releases, more reliable codebases, and sharper developer focus.

Remember, AI doesn’t replace your expertise—it amplifies it. When AI handles the routine, you get to lead the innovation.

“The future of coding isn’t AI versus human—it’s AI alongside human, unlocking potential neither could achieve alone.”

The next breakthrough in your development cycle is just a smart assistant away. Ready to level up?