The New Rules for AI Aggregators

The New Rules for AI Aggregators in 2025: What You Must Know

Key Takeaways: The New Rules for AI Aggregators

Navigating AI aggregator regulations in 2025 means embracing compliance as a strategic advantage, not just a hurdle. These actionable insights help startups, SMBs, and enterprises build trusted, scalable AI platforms that stay ahead of evolving rules while accelerating innovation.

- Prioritize risk-based compliance by classifying AI systems as high-, medium-, or low-risk to tailor your governance and resource allocation effectively under frameworks like the EU AI Act and UK principles.

- Embed privacy-by-design and transparency throughout your platform by minimizing data, anonymizing information, and clearly disclosing AI data sources to boost user trust and regulatory readiness.

- Leverage AI-driven low-code platforms and federated learning to rapidly develop and update compliant aggregation workflows without sacrificing speed or data privacy.

- Implement human oversight protocols to monitor AI outputs and escalate critical issues, fulfilling key regulatory requirements and maintaining ethical accountability.

- Prepare early for mandatory certifications and registrations, including the EU’s CE marking and AI system database by August 2026, to avoid market access delays and fines.

- Train teams continuously on AI regulations and ethics, ensuring all stakeholders understand compliance roles, latest legal shifts, and the importance of fairness and non-discrimination.

- Build agile compliance playbooks and reporting workflows that adapt to patchwork US state laws and dynamic UK policies, keeping your aggregator platform resilient amid regulatory change.

- Use strategic aggregation techniques—balancing breadth and depth of AI data and applying real-time analytics—to deliver personalized, scalable AI services that differentiate your product in competitive markets.

Master these rules now to turn compliance into your platform’s new competitive edge and build AI solutions that customers and regulators trust alike. Ready to dive deeper? The future of AI aggregation is here, and it’s smart, ethical, and fast.

Introduction

Imagine your AI platform facing a regulatory maze where every wrong turn could cost you millions or freeze your product launch.

By 2025, AI aggregators—those systems blending outputs from multiple AI tools—are navigating strict new rules that aren’t just bureaucratic roadblocks. They’re reshaping how startups, SMBs, and enterprises build, deploy, and scale AI-powered solutions.

If you rely on AI to power smarter marketing, risk analysis, or customer service, understanding these changes isn’t optional—it’s mission-critical.

You’ll discover how evolving regulations from the EU, UK, US, and global bodies emphasize:

- Risk-based compliance tied to your AI’s impact

- The growing role of transparency, data governance, and human oversight

- How to turn compliance into a competitive advantage rather than a hurdle

Plus, we’ll unpack pivotal technologies and strategies helping you stay agile and compliant without slowing innovation. Whether you’re a startup aiming to launch fast or an enterprise managing complex AI workflows, these insights help you act confidently in a shifting landscape.

The countdown to full enforcement is on, and early movers will not only avoid fines but also build trusted, resilient platforms your users and regulators will respect.

Next up, we’ll break down the core rules shaping AI aggregation—and what they mean for your business’s future-proofing and growth.

What is Artificial Intelligence?

Artificial Intelligence (AI) is the science of creating computer systems that can perform tasks traditionally requiring human intelligence—think learning, reasoning, problem-solving, and even understanding language or recognizing images. Since John McCarthy coined the term in 1956, AI has rapidly evolved, reshaping how we live, work, and interact with technology.

At the heart of AI are data collection and data aggregation. AI models depend on massive volumes of raw data—from web pages, social media, sensors, and more—to learn patterns, make predictions, and deliver actionable insights. The data aggregation process involves gathering, cleaning, and combining data from multiple sources to extract specific data points that fuel smarter, more accurate AI systems.

Data quality is critical. Issues like missing values, duplicate entries, and inconsistent formats can undermine data accuracy and the effectiveness of AI models. That’s why enhancing data quality—by cleaning, validating, and standardizing data—is a top priority for any data scientist or AI developer. Automated tools and platforms like Google Cloud help streamline these processes, reducing operational costs and enabling real-time processing of large datasets.

A key area of AI is Natural Language Processing (NLP), which allows machines to understand and generate human language. NLP powers everything from chatbots and virtual assistants to advanced search engines, meeting rising user expectations for seamless, intuitive digital experiences. Web scraping is another essential technique, enabling AI aggregators and agents to collect up-to-date information from across the web—though organizations must be mindful of user privacy and avoid unauthorized scraping of sensitive information.

Predictive analytics uses historical data to forecast future trends, helping businesses make data-driven decisions and gain a competitive advantage. As more data is generated every day, the ability to process and analyze it at large scale—and in real time—has become a defining feature of successful AI-driven organizations.

Application Programming Interfaces (APIs) connect different systems, making it easier to integrate diverse data sources and build robust AI solutions. AI aggregators and AI agents leverage these connections to deliver strategic insights from across the digital landscape, supporting everything from personalized recommendations to risk analysis.

The future of AI is being shaped by rapid advances in machine learning, deep learning, and new technologies that demand ever-greater compute power. As the digital landscape evolves, so do the challenges—protecting sensitive data, ensuring data integrity, and adapting to shifting regulations and industry trends. Organizations that master the building blocks of AI—data, algorithms, and infrastructure—will be best positioned to unlock the full potential of artificial intelligence and stay ahead in a fast-changing world.

In short, AI is not just about smart machines—it’s about harnessing the power of data aggregation and AI models to drive innovation, efficiency, and growth in the digital age.

Understanding the New Regulatory Landscape for AI Aggregators in 2025

AI aggregators act like digital conductors, harmonizing data and outputs from multiple AI sources into one seamless experience.

Their role? Powering everything from personalized marketing to risk scoring for startups, SMBs, and enterprises.

Dominant platforms play a significant role in shaping the regulatory environment and setting industry standards for AI aggregators, influencing both competition and compliance across the sector.

Why You Can’t Ignore the New Rules

Recent regulatory updates hit all AI aggregators hard, especially if you process or distribute high-risk AI outputs.

These changes affect:

- Startups and SMBs relying on third-party AI tech

- Enterprises integrating AI to innovate or stay competitive

- Developers partnering on AI-driven, low-code platforms

Ignoring compliance now risks hefty fines and stalled product launches.

Who’s Shaping These Rules?

The global picture is a patchwork, but four heavyweights lead the charge:

- European Union (EU): Implementing the AI Act with strict risk-based rules

- United Kingdom (UK): Favoring pro-innovation yet principle-driven regulation

- United States (US): Federal deregulation plus increasing state-level laws

- International bodies: Like the Council of Europe pushing human-rights-focused AI frameworks

Breaking Down Risk-Based Regulation

Regulators are laser-focused on risk categorization, where AI systems are grouped and regulated by hazard level.

For aggregators, this means:

High-risk AI systems (e.g., credit scoring, medical usage) face tough obligations—data governance, transparency, human oversight. Regulations may also require specific controls, documentation, or transparency regarding the underlying AI model used in aggregation platforms.

Low- and medium-risk AI still require care but with lighter reporting rules

This risk stamp largely dictates your compliance path and resource allocation.

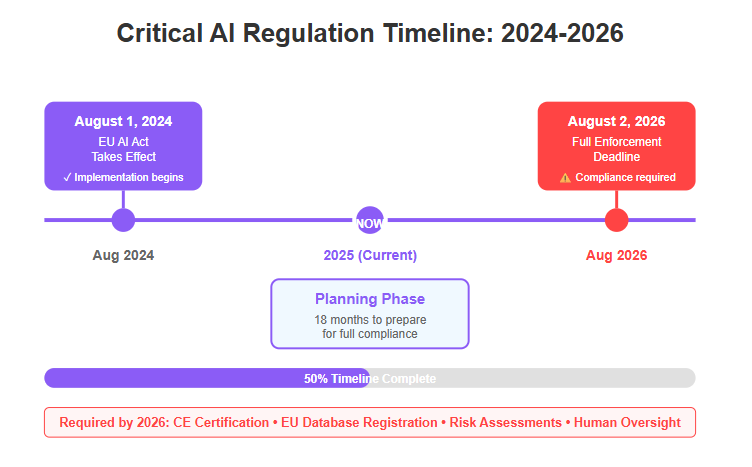

Mark Your Calendar: Critical Enforcement Dates

- The EU AI Act took effect August 1, 2024

- Full enforcement for high-risk AI begins August 2, 2026—your countdown to strict compliance

- UK and US frameworks evolve dynamically; staying updated isn’t optional

Business Model Impact and Opportunity

Evolving rules don’t just constrain—they reshape aggregator strategies:

- Shift from raw data hoarding to transparent, ethical aggregation

- Innovation accelerates around low-code, privacy-first AI development tools

- Compliance becomes a competitive edge—not just a checkbox

When platforms are trusted and compliant, they attract more users and partners, amplifying network effects—each new participant increases the value and insights available, creating a self-reinforcing cycle of growth.

Picture this: startups using compliant AI aggregation navigate risk confidently and launch faster, while laggards scramble under legal pressure.

Understanding this new regulatory maze is your first move in turning compliance into a strategic advantage for 2025 and beyond.

As one insider put it: “Regulations aren’t roadblocks—they’re the new map for smarter, safer AI innovation.“

Ready to make your aggregator platform a beacon of trust and compliance? The clock is ticking.

Navigating Regional Regulatory Frameworks: Compliance Essentials for AI Aggregators

European Union: The AI Act and Its Impact

The AI Act (Regulation (EU) 2024/1689) sets a harmonized standard across all 27 EU countries, focusing on risk-based compliance for AI aggregators.

Key obligations include:

- Managing risks for high-risk AI systems used within aggregation platforms

- Ensuring data governance, transparency, and human oversight throughout AI lifecycles

- Completing conformity assessments and obtaining CE certification before market launch

- Registering AI systems in the EU database to enable traceability and accountability

- Avoiding prohibited practices like real-time biometric identification and social scoring

The EU AI Act also includes specific provisions for large language models, which are increasingly used by AI aggregators to process and extract insights from unstructured data such as press releases and news.

With enforcement kicking in August 2026, aggregation platforms need to start preparing now to meet these strict standards without slowing innovation.

“The EU’s AI Act demands not just compliance, but a proactive approach to transparency and safety.”

United Kingdom: Pro-Innovation and Principle-Based Regulation

The UK opts for a flexible, sector-specific framework built on five guiding principles:

- Safety

- Transparency

- Fairness

- Accountability

- Contestability

AI aggregators should embed these principles by:

- Designing clear user disclosures

- Establishing robust human oversight

- Conducting regular risk assessments

Keep an eye on possible future updates, as the UK may introduce its own AI Act inspired by evolving international rules.

"In the UK, staying agile and principled beats a one-size-fits-all approach."

United States: Federal Deregulation vs. State-Level Intervention

The US landscape is a patchwork:

- Federal AI regulations were repealed in early 2025, signaling a hands-off, pro-innovation stance

- Several states impose stringent AI requirements, such as:

- Utah’s mandate to disclose generative AI use under consumer laws

- Tennessee’s ELVIS Act, controlling voice cloning and deepfakes

Navigating this fragmented environment means prioritizing:

- Compliance agility to adjust per state

- Robust risk mitigation and documentation processes

"In the US, compliance means playing by many different rulebooks at once."

Global and Multilateral Frameworks Influencing Aggregators

The Council of Europe’s Framework Convention on AI and Human Rights, open since September 2024, is setting new global norms emphasizing:

- Protection of human rights and democratic values in AI use

- Cross-border cooperation on ethical AI governance

- Binding standards for data aggregation and usage

AI aggregators working internationally must embed these ethical and legal considerations to stay competitive and trustworthy.

"Global AI policy is shifting from optional guidelines to enforceable commitments."

Understanding these evolving regional regulations is crucial. AI aggregators must actively build compliance frameworks that fit diverse markets, blending transparency, accountability, and innovation to thrive in 2025 and beyond.

Unlocking Strategic Advantages: Data Aggregation Techniques and Technologies for AI Aggregators in 2025

Strategic Aggregation Approaches Driving Competitive Edge

At its core, AI aggregation combines data integration, enrichment, and real-time analytics to turn scattered information into actionable insights.

Startups and SMBs often wrestle with either too much data from diverse sources or overly narrow datasets. The sweet spot?

- Balancing breadth vs. depth of AI data to maximize both coverage and relevance.

- Enriching aggregated data through cross-referencing multiple AI outputs for sharper insights.

- Using real-time analytics to personalize services and boost operational efficiency.

Imagine your platform as a smart assistant that constantly learns from multiple inputs—helping you fine-tune product recommendations or automate customer support without losing the personal touch. By analyzing user behavior, AI aggregators can tailor recommendations and automate support more effectively, ensuring that each interaction is relevant and efficient.

This intelligent aggregation isn’t just about data volume—it’s the backbone of scalability and continuous innovation, letting growing businesses unlock value without ballooning costs.

“Think of aggregation like assembling the ultimate playlist—too few songs and it’s dull; too many and it’s chaos. Get the mix just right, and you’ve got magic.”

Five Transformative Technologies Powering AI Aggregators

AI aggregators in 2025 thrive on a core set of innovative tech pushing boundaries:

Federated learning — Enables aggregators to train AI models across decentralized data without compromising privacy.

Advanced natural language processing (NLP) — Powers sophisticated understanding of unstructured data and user intent.

Edge AI — Moves computation closer to data sources, reducing latency and boosting responsiveness.

AI-driven low-code platforms — Accelerate development cycles by enabling rapid, flexible integration of new AI components.

Cloud-native infrastructure — Supports efficient, scalable, and compliant aggregation at enterprise-grade levels.

Unlike traditional methods of data curation and enrichment, which are often manual, static, and less adaptable, these AI-driven technologies deliver far greater efficiency and flexibility. Automated, intelligent processes now replace the limitations of traditional methods, allowing organizations to respond quickly to changing data and regulatory needs.

These tools are game-changers because they let companies iterate faster and comply with evolving regulations simultaneously. For example, federated learning safeguards sensitive data while still improving model accuracy across distributed sources—critical for sectors like healthcare or finance.

Think of it as the difference between ordering a custom suit versus buying off the rack—the right tools let your AI aggregation platform be tailor-made, responsive, and ready to grow.

“Low-code AI is the secret sauce for startups wanting full power without the full dev headache.”

“Federated learning is privacy’s best friend—powerful without peeking.”

By mastering both strategic aggregation techniques and the enabling technologies of 2025, AI aggregators position themselves not just to survive—but to thrive in complex markets.

Every aggregator aiming for the next level should prioritize blending smart data strategies with these technologies to unlock maximum value, innovation, and compliance ease.

Mastering Data Privacy and Ethical Standards for AI Aggregators

Best Practices for Data Privacy Compliance

Data privacy isn’t optional—it’s foundational for AI aggregators juggling vast, diverse datasets. Start by embedding privacy-by-design and privacy-by-default—meaning privacy features are baked into every stage of your platform’s architecture, not tacked on later.

Focus on key principles like:

- Data minimization: Collect only what’s strictly necessary.

- Anonymization: Strip identifiers to shield individual identities.

- Consent mechanisms: Transparently get user permission for data usage.

Practical steps include encrypting sensitive info, limiting access through role-based controls, and routinely auditing your data flows. Balancing innovation with tough privacy rules isn’t just compliance; it builds lasting user trust.

"Privacy is less of a checkbox and more of a culture you nurture."

The Role of Transparency in Building Trust and Compliance

Transparency is your secret weapon in a crowded AI landscape. Clearly disclosing where data comes from, how models crunch that data, and the logic behind decisions creates a competitive edge beyond regulation.

Here’s what works best:

- Publish clear documentation for AI data sources and decision pathways.

- Offer user-accessible explanations for how aggregated insights are generated.

- Transparency also means ensuring that site displays of business information are accurate and consistent across all online platforms, reducing the risk of misinformation.

- Use transparency as a shield against both regulatory scrutiny and public skepticism.

Picture this: a user checks a platform dashboard to see exactly which datasets fuel their AI insights. That visibility sparks confidence and reduces friction if audits hit.

“When you show your work, trust follows naturally.”

“Transparency nails down both compliance and customer loyalty.”

Cutting-Edge AI Ethics Frameworks and Aggregator Practices

Ethics go hand-in-hand with compliance but extend into your platform’s DNA. Leading principles shaping aggregator behavior include:

- Fairness: Avoiding discriminatory outcomes.

- Accountability: Keeping humans in the loop for oversight.

- Non-discrimination: Ensuring equitable treatment across data inputs.

Integrate these principles directly into product development and data governance cycles. Combatting bias isn’t a one-off—it requires continuous monitoring and adjustment.

By embedding ethics, you’re not just avoiding pitfalls—you create long-term strategic advantages, setting a positive industry standard and attracting clients who prioritize responsible AI.

"Ethics isn’t a speed bump; it’s a fast lane to sustainable innovation."

Data privacy and ethics are no longer back-office concerns for AI aggregators. They’re core strategic assets that empower you to innovate boldly while winning trust in a complex, evolving regulatory world.

Focus on privacy-first design, radical transparency, and proactive ethical stewardship to future-proof your platform and partnerships.

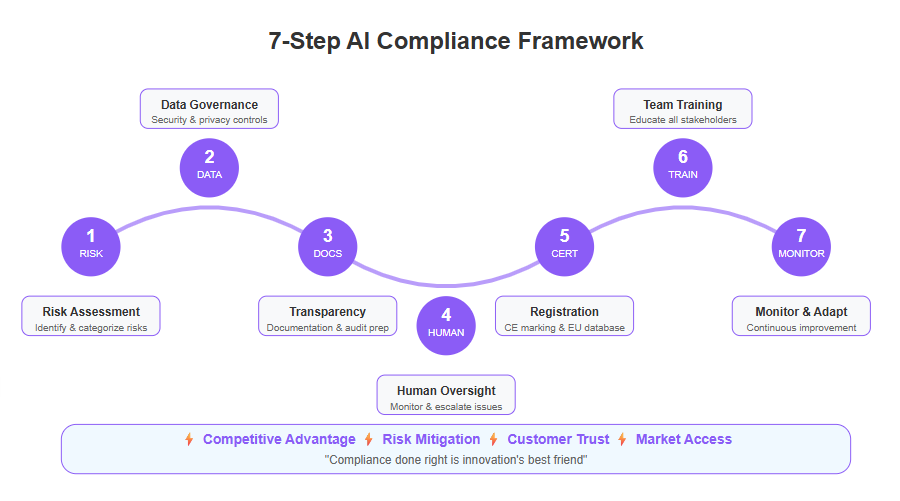

Seven Critical Compliance Steps for AI Aggregators: A Practical Framework

Navigating AI regulations in 2025 means treating compliance as a full-spectrum challenge—not just ticking boxes but embedding real controls into your product lifecycle.

Robust compliance frameworks must include processes for detecting and managing malformed data to maintain data integrity and reliability.

Start by viewing compliance as a roadmap crossing regulatory, ethical, and operational boundaries. Here’s your seven-step framework designed to meet today’s complex demands while keeping your innovation engine humming.

Step 1: Conduct Comprehensive Risk Assessments

Identify and categorize risks aligned with the EU AI Act, UK principles, or state laws like Utah’s Artificial Intelligence Policy Act.

- Map AI components against high-risk classifications.

- Assess impact areas including privacy, bias, and security.

Think of this as auditing your AI ecosystem’s health with clinical precision to avoid surprises later.

Step 2: Implement Robust Data Governance and Security Controls

Protect aggregated data with:

- Encryption, access controls, and anonymization. Start with a well-understood data source and implement robust monitoring and error handling to ensure data quality and security.

- Data minimization aligned with GDPR and similar laws.

Privacy breaches can cost startups tens of thousands in fines and trust—locking down sensitive info upfront saves headaches.

Step 3: Ensure Transparency and Documentation for Audit Readiness

Create clear records on:

- AI data sources and model logic.

- Decision-making processes.

Transparency is non-negotiable; it’s what regulators and users demand to trust your platform. Document everything so audits don’t feel like bloodsports.

Step 4: Establish Human Oversight and Escalation Protocols

Keep humans in the loop to:

- Monitor AI actions and intervene on flagged results.

- Escalate critical issues quickly.

This combination balances AI speed with responsible control, fitting perfectly with the EU Act’s human oversight rules.

Step 5: Prepare and Submit Required Registrations and Certifications

Comply with formalities like:

- EU database registration and CE marking by August 2026.

- Any UK or US state-specific filings.

Ignoring these deadlines can block market access or trigger penalties—build submissions into your roadmap early.

Step 6: Train Teams on Compliance and Governance Updates

Equip everyone who touches your AI—from engineers to sales—with:

- Clear compliance responsibilities.

- Updates on shifting regulations.

Regular, accessible training prevents costly slip-ups and empowers your crew to "own it."

Step 7: Continuously Monitor, Report, and Adapt

Create workflows for:

- Ongoing regulatory scanning.

- Internal reporting and proactive adjustments.

The regulatory landscape fluctuates fast—staying agile ensures you’re never caught off guard.

Practical tools and low-code workflows help automate many of these steps without killing agility.

Picture this: your team runs a compliance dashboard that flags risk hotspots instantly, freeing you to focus on new product features—not chasing paperwork.

Sharpening your compliance game isn’t just about avoiding fines. It’s a competitive advantage that builds trust and scales confidently.

Remember: “Compliance done right is innovation’s best friend.” Take action now to build a resilient, future-proof AI aggregator platform.

Preparing for the Future: Scaling AI Aggregators with Agility and Responsibility

Scaling AI aggregators in 2025 means mastering rapid change in regulations and technology—and turning those challenges into competitive advantages. As more users join AI aggregator platforms, the system's ability to deliver valuable and accurate insights improves, reinforcing the platform's competitive advantage.

Start by adopting a mindset that values compliance, curiosity, and accountability across your teams. This culture ensures your product and business groups don’t just check boxes, but actively anticipate risks and opportunities.

Embedding Agility through AI-First, Low-Code Solutions

Low-code platforms powered by AI let you accelerate development cycles while baking compliance into each step. Instead of building from scratch, you’re using scalable frameworks that adapt quickly when regulations shift.

Here’s what to focus on:

- Use AI-driven automation to speed up regulatory reporting and documentation

- Design platforms with privacy-by-design and transparency baked in

- Continuously update workflows to reflect new legal requirements without interrupting service

Imagine building your product like a city expanding with modular districts — when a new law kicks in, you swap out just a few blocks instead of rebuilding the entire system.

Balancing Fast Innovation with Ethical, Customer-First Principles

Innovation wins only if your customers trust you. Prioritize ethics by:

Ensuring clear data use disclosures at every touchpoint

Maintaining human oversight where AI decisions affect people’s lives

Avoiding shortcuts that compromise fairness or privacy even under time pressure

For instance, if you aggregate user data for personalization, always give users a choice and explain how their info improves their experience. AI aggregators must also be able to understand context within unstructured data to ensure recommendations are accurate, fair, and relevant.

Building Resilience for Tomorrow’s Uncertainties

Regulatory landscapes will keep shifting—think of the EU AI Act’s full enforcement in August 2026 or new US state laws popping up.

Stay ahead by:

- Monitoring policies in all your key markets regularly

- Creating a flexible compliance playbook that evolves with legal frameworks

- Training teams on emerging risks and compliance innovations

Open, transparent communication with regulators, partners, and users is your secret weapon. It builds trust and paves the way for smoother audits or policy changes.

Imagine your aggregator as a ship navigating choppy waters—flexibility and a loyal crew make all the difference when storms hit.

Scaling smart means being ready to pivot fast without losing sight of integrity or customer trust.

Quotable takeaways:

- "Embedding compliance into your AI platform isn’t a cost — it’s a growth enabler."

- "Build modular, low-code AI products that flex with new laws—don’t rebuild from the ground up."

- "Trust grows when transparency and ethics lead your innovation roadmap."

Keeping these strategies front and center turns 2025’s regulatory hurdles into stepping stones for long-term success.

Conclusion

Navigating the shifting landscape of AI aggregation regulations in 2025 is more than a compliance exercise—it’s a chance to turn complexity into competitive advantage. By embracing transparency, ethical design, and strategic technology, you position your AI aggregator not just as a rule-follower, but as a trusted innovation leader.

Staying ahead means combining risk-aware strategies with flexible, AI-powered low-code platforms that adapt alongside evolving laws. This approach frees you to accelerate development without sacrificing compliance or customer trust.

Here are the key moves to make now:

- Implement comprehensive risk assessments to classify and manage AI system hazards early.

- Embed privacy-by-design and transparent data governance across every stage of your platform.

- Establish strong human oversight protocols to keep AI outputs accountable and fair.

- Leverage AI-driven low-code tools to streamline compliance workflows and accelerate innovation.

- Stay vigilant on evolving regulations with ongoing monitoring and team training.

The next step is clear: audit your current AI aggregation practices today to pinpoint gaps and prioritize improvements. Build compliance into your product roadmap, not as a chore but as a strategic asset that builds trust and unlocks new market opportunities.

Remember, regulations aren’t roadblocks—they’re a map for smarter, safer AI development. By owning compliance now, you create a foundation for sustainable growth, resilience, and customer confidence in a fast-changing world.

Your AI aggregator can be the trusted beacon that guides your startup or business through 2025 and beyond—start building that future today.